AI And The Illusion Of Learning: Building A Framework For Responsible AI

Table of Contents

Understanding the Limitations of Current AI Models

Current AI models, particularly those based on machine learning and deep learning, operate under significant limitations. One crucial aspect is the difference between correlation and causation. AI systems excel at identifying correlations within vast datasets, but they often struggle to establish genuine causal relationships. This means an AI might accurately predict an outcome based on observed correlations, but without understanding the underlying reasons why.

This reliance on massive datasets introduces another critical challenge: bias amplification. AI models learn from the data they are trained on, and if that data reflects existing societal biases (gender, racial, socioeconomic), the AI system will inevitably perpetuate and even amplify those biases.

- Examples of biased datasets and their consequences: Facial recognition systems showing higher error rates for people with darker skin tones; loan applications algorithms discriminating against certain demographic groups.

- How biases lead to unfair or discriminatory outcomes: Biased AI in criminal justice can lead to wrongful convictions; biased hiring tools can perpetuate existing inequalities in the workplace.

Furthermore, the "black box" nature of many deep learning models poses a significant hurdle. The complexity of these models makes it difficult to understand how they arrive at their conclusions, hindering transparency and accountability. This is where explainable AI (XAI) becomes crucial.

- Techniques for increasing transparency and interpretability: Developing simpler, more transparent models; using techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) to interpret complex models.

- Examples of XAI methods: Rule extraction, decision trees, visualization techniques that illustrate the model's decision-making process.

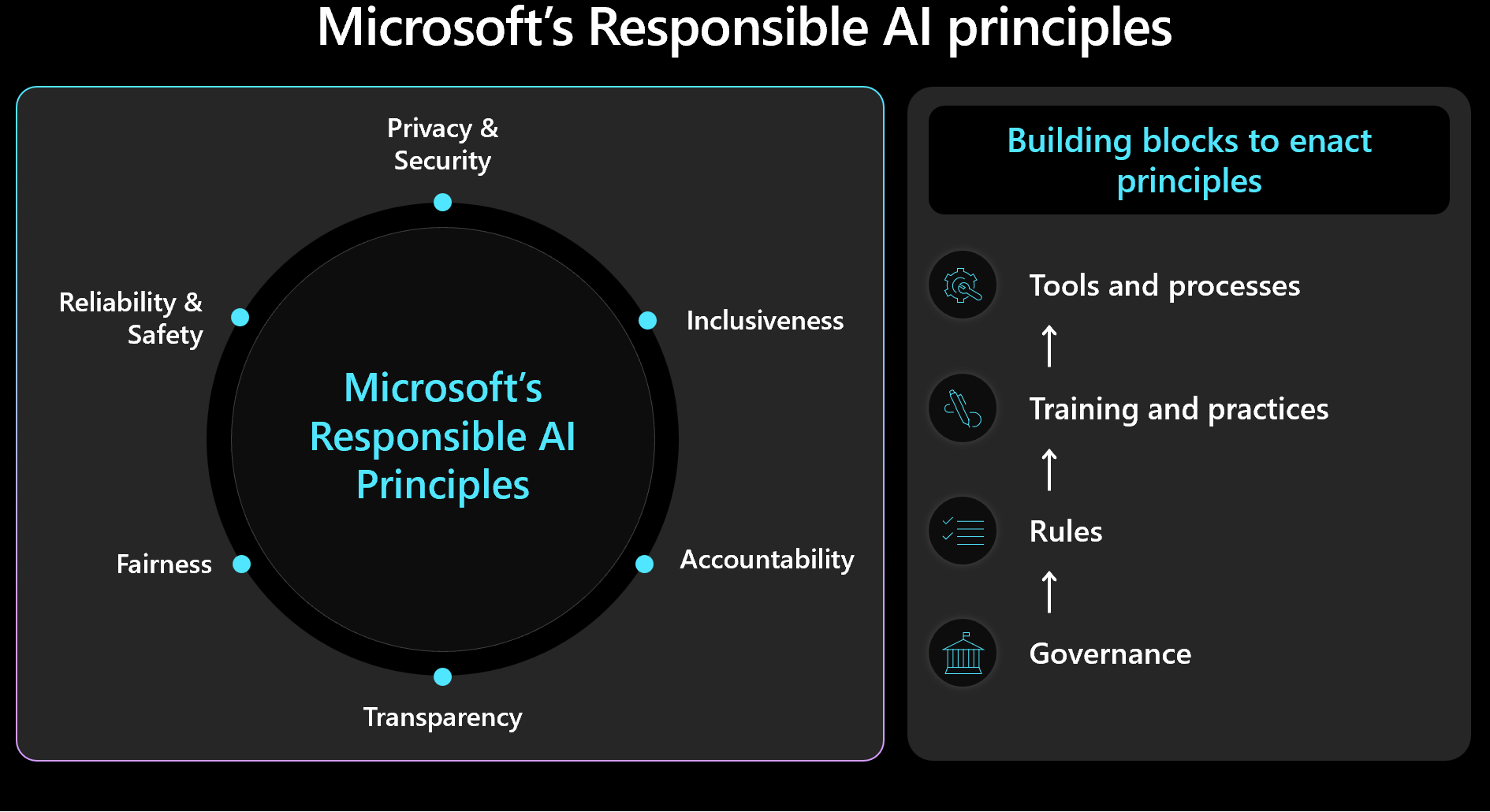

Building Ethical Considerations into the AI Development Lifecycle

Responsible AI necessitates incorporating ethical considerations from the very outset of the development process. This isn't an afterthought; it's foundational. Ethical considerations should guide every stage, from data collection and model design to deployment and ongoing monitoring.

A diverse development team is crucial in mitigating bias and promoting fairness. Different perspectives bring varied experiences and understanding, leading to more robust and equitable AI systems.

- Benefits of diverse perspectives: Identifying potential biases that might be overlooked by a homogeneous team; ensuring the AI system caters to a broader range of users and contexts.

- Best practices for inclusive design processes: Incorporating user feedback from diverse groups; employing bias detection and mitigation tools throughout the development process.

Ongoing monitoring and evaluation are essential to identify and address unintended consequences. AI systems are not static; they evolve and adapt, and their impact needs continuous assessment.

- Methods for continuous monitoring and auditing: Regularly reviewing the AI system's performance against predefined ethical guidelines; tracking key metrics related to fairness, accuracy, and impact.

- Addressing and rectifying biases identified after deployment: Implementing mechanisms for feedback and reporting; retraining the model with more representative data; adjusting the system's parameters to minimize bias.

Promoting Transparency and Accountability in AI

Transparency and accountability are paramount for responsible AI. We need regulations and guidelines to ensure transparency in AI algorithms and decision-making processes. This means making AI models and their underlying data more accessible (while protecting sensitive information) for scrutiny and auditing.

- Existing or proposed regulations related to AI ethics and responsibility: GDPR (General Data Protection Regulation), the EU AI Act, and various national initiatives focusing on AI ethics.

- Importance of data privacy and security: Ensuring data used to train AI models is obtained and used ethically, respecting individual privacy rights and data security best practices.

Mechanisms for accountability are equally important. When AI systems cause harm or make biased decisions, we need clear processes to determine responsibility and redress grievances.

- Potential solutions for addressing AI-related harm: Establishing independent oversight bodies to investigate AI-related incidents; creating mechanisms for redress for individuals harmed by AI systems.

- Establishing clear lines of responsibility: Defining roles and responsibilities for developers, deployers, and users of AI systems; creating clear accountability pathways for addressing AI-related harm.

Fostering Education and Public Engagement on Responsible AI

Public education is crucial for building trust and fostering responsible AI. The public needs to understand the capabilities and limitations of AI systems, along with their ethical implications.

- Increasing public understanding of AI and its ethical implications: Developing educational resources, promoting public discussions and debates, and supporting media literacy initiatives.

- Role of education in promoting responsible AI development: Integrating AI ethics into educational curricula at all levels; fostering critical thinking skills related to AI and its societal impact.

Open dialogue and collaboration are essential. Researchers, developers, policymakers, and the public must work together to shape the future of AI.

- Benefits of multi-stakeholder collaboration: Developing shared ethical guidelines and standards; fostering a sense of shared responsibility for ensuring AI benefits all of humanity.

- Initiatives and organizations promoting responsible AI: The Partnership on AI, OpenAI, and numerous academic and industry groups actively working on responsible AI development.

Conclusion: Moving Towards Responsible AI – A Call to Action

Building a framework for responsible AI development is not merely a technical challenge; it's a societal imperative. The illusion of learning can lead to unintended consequences, perpetuating existing inequalities and causing unforeseen harm. Addressing ethical concerns proactively is vital for harnessing the benefits of AI while mitigating its risks. We must actively engage in discussions, advocate for responsible AI policies, and contribute to building a future where AI benefits all of humanity. Learn more about responsible AI, AI ethics, and AI safety by exploring resources like [link to relevant resource 1], [link to relevant resource 2], and [link to relevant resource 3]. Let's work together to ensure AI remains a tool for progress, not a source of peril.

Featured Posts

-

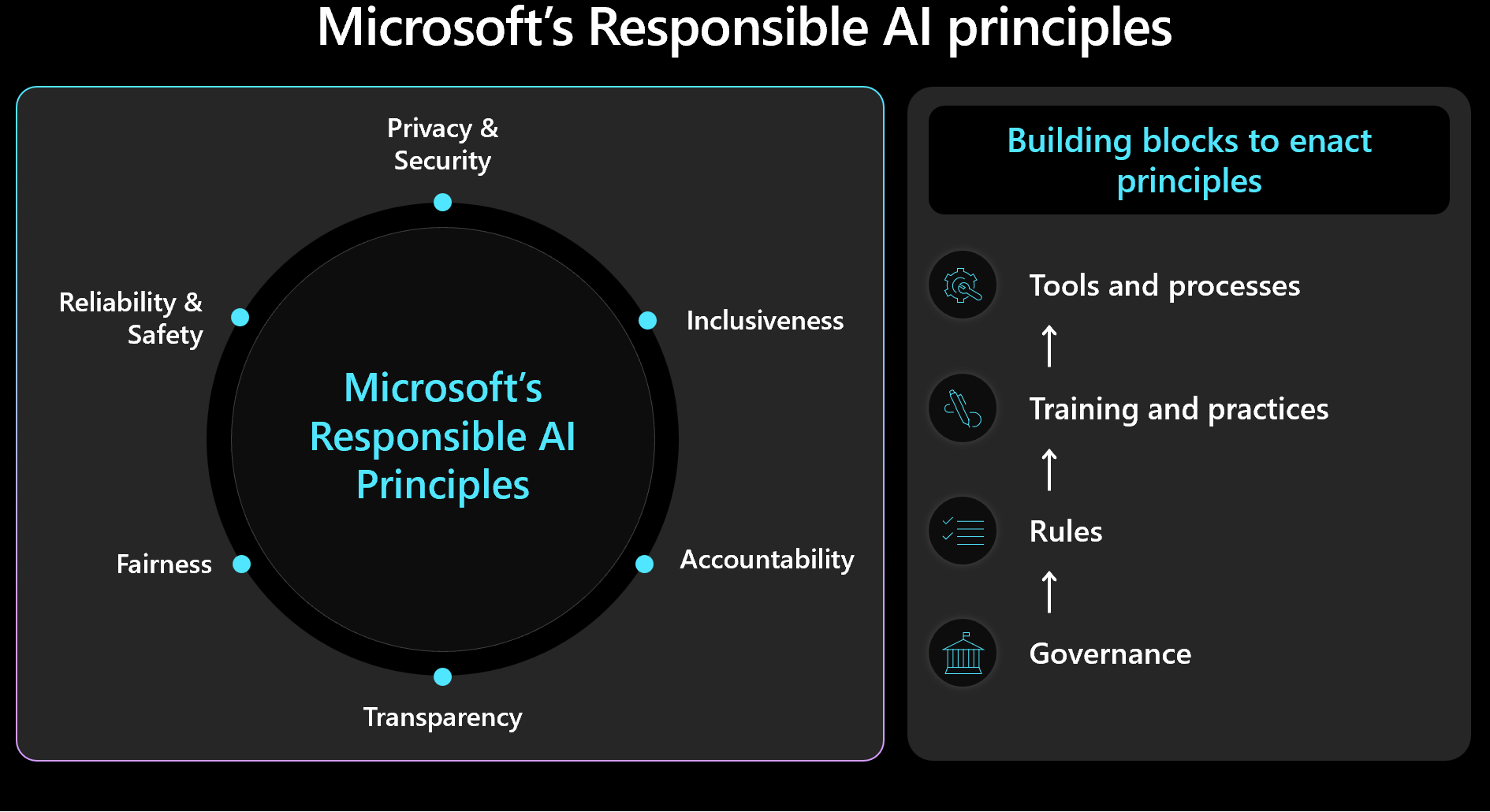

Cleveland Guardians Opening Day Weather Is It Typically This Cold

May 31, 2025

Cleveland Guardians Opening Day Weather Is It Typically This Cold

May 31, 2025 -

Read Cycle News Magazine Issue 12 2025 Cycling Updates

May 31, 2025

Read Cycle News Magazine Issue 12 2025 Cycling Updates

May 31, 2025 -

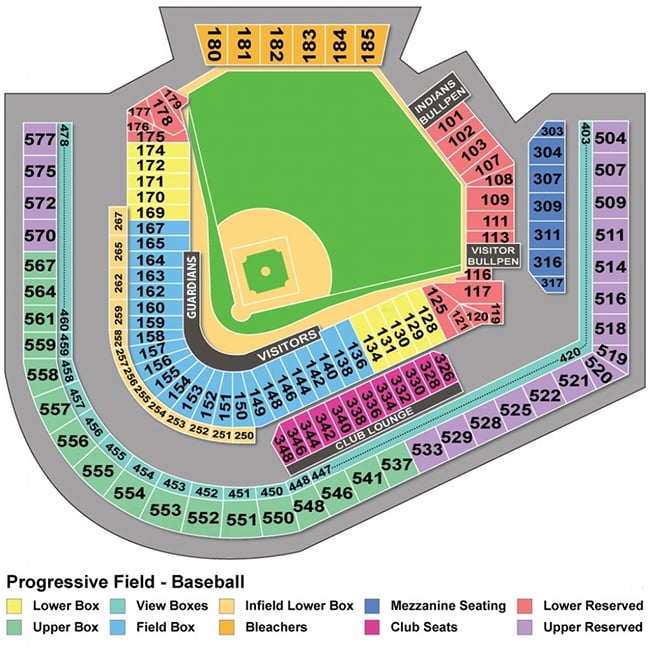

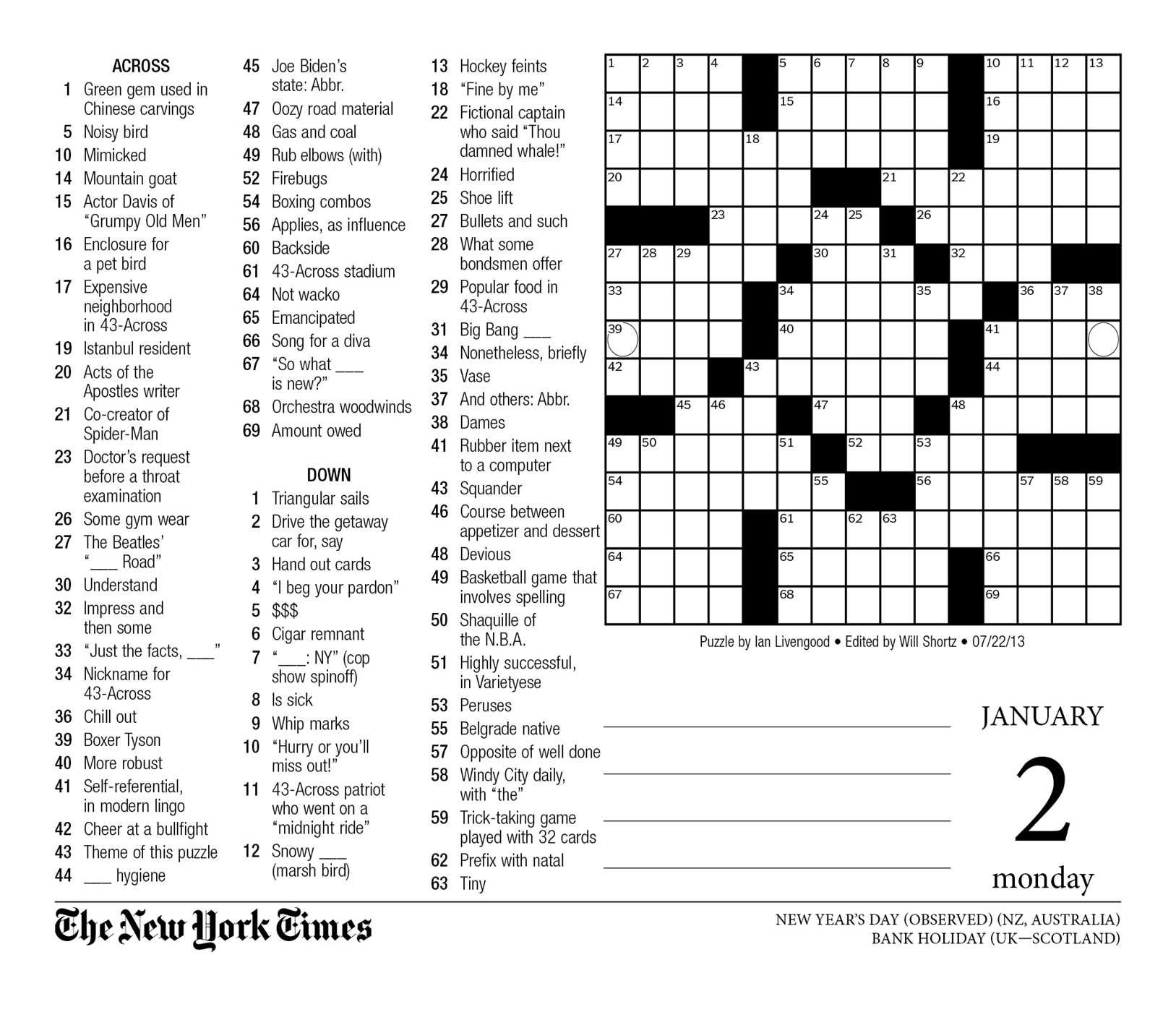

Solve The Nyt Mini Crossword May 7 Answers And Helpful Hints

May 31, 2025

Solve The Nyt Mini Crossword May 7 Answers And Helpful Hints

May 31, 2025 -

Zverevs Indian Wells Run Ends Griekspoor Upsets Top Seed

May 31, 2025

Zverevs Indian Wells Run Ends Griekspoor Upsets Top Seed

May 31, 2025 -

Rudy Giulianis Tribute To Bernie Kerik Remembering A Friend And Public Servant

May 31, 2025

Rudy Giulianis Tribute To Bernie Kerik Remembering A Friend And Public Servant

May 31, 2025