AI And The Limits Of Learning: A Path Towards Responsible Innovation

Table of Contents

Data Bias and its Impact on AI Systems

H3: The Problem of Biased Datasets: AI models learn from data, and biased data leads to biased outcomes. This can perpetuate and amplify existing societal inequalities. The quality of data directly impacts the fairness and accuracy of AI algorithms. Garbage in, garbage out, as the saying goes, applies powerfully here. Biased AI systems can lead to unfair or discriminatory decisions, undermining trust and creating significant ethical concerns. This is a critical challenge in the pursuit of responsible AI.

- Algorithmic bias in loan applications leading to discriminatory lending practices: AI models trained on historical data reflecting existing biases may deny loans to qualified applicants from marginalized communities.

- Facial recognition systems showing higher error rates for certain ethnic groups: This inaccuracy can have serious consequences in law enforcement and security applications, leading to misidentification and wrongful accusations.

- Recruitment AI favoring male candidates over equally qualified female candidates: AI-powered recruitment tools trained on historical hiring data that reflects gender bias may perpetuate this inequality in the workplace.

H3: Mitigating Bias in AI Development: Strategies for detecting and mitigating bias include careful data curation, algorithmic fairness techniques, and diverse development teams. Building responsible AI requires a proactive approach to fairness and inclusivity throughout the entire AI lifecycle.

- Implementing rigorous data auditing processes: Regularly reviewing and cleaning datasets to identify and correct biases is essential. This includes checking for underrepresentation of certain groups and correcting skewed data points.

- Utilizing techniques like adversarial debiasing: These techniques aim to identify and counteract biases in the data and algorithms. They often involve creating counterfactual examples to challenge the AI's biased tendencies.

- Ensuring diverse representation in the design and development process: Teams with diverse backgrounds and perspectives are better equipped to identify and address potential biases in AI systems. A multidisciplinary approach, involving ethicists, social scientists, and domain experts, is crucial.

The Black Box Problem and Explainability in AI

H3: Understanding AI Decision-Making: Many complex AI models, particularly deep learning systems, operate as "black boxes," making it difficult to understand their decision-making processes. This lack of transparency poses significant challenges for accountability, trust, and responsible AI development.

- Lack of transparency hindering trust and accountability: When we cannot understand how an AI system arrives at a decision, it becomes difficult to hold it accountable for errors or unfair outcomes. This lack of transparency erodes public trust.

- Difficulty in identifying and correcting errors: Understanding the reasoning behind an AI's decision is crucial for debugging and improving its performance. Without explainability, identifying and correcting errors becomes a much more challenging task.

- Challenges in complying with regulations requiring explainability: Growing regulatory frameworks for AI often mandate explainability, making it essential to develop methods to understand and communicate AI decision-making processes.

H3: Techniques for Improving Explainability: Methods like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) aim to provide insights into AI model predictions. These techniques are crucial steps toward creating more transparent and responsible AI systems.

- Developing more interpretable AI models: Researchers are actively developing AI models that are inherently more transparent and easier to understand. This includes exploring simpler architectures and using techniques that enhance model interpretability.

- Utilizing visualization techniques to explain model outputs: Visualizations can help communicate complex AI decisions in a more accessible way, making them understandable to a wider audience, including non-technical stakeholders.

- Creating audit trails to track AI decision-making processes: Detailed logs of AI actions and their underlying reasoning can provide valuable insights for auditing, debugging, and ensuring accountability.

The Ethical Implications of Advanced AI Capabilities

H3: Autonomous Weapons Systems: The development of lethal autonomous weapons systems raises serious ethical concerns about accountability and the potential for unintended consequences. The delegation of life-or-death decisions to machines without human oversight is fraught with ethical dilemmas.

- Concerns regarding the delegation of life-or-death decisions to machines: This raises profound questions about human control, responsibility, and the potential for unpredictable outcomes.

- The risk of escalation in armed conflicts: Autonomous weapons could lower the threshold for initiating armed conflict, increasing the risk of escalation and unintended consequences.

- The need for international regulations and ethical guidelines: A global consensus on the ethical implications of autonomous weapons systems is urgently needed to prevent a dangerous arms race.

H3: Job Displacement and Economic Inequality: Automation driven by AI could lead to significant job displacement, exacerbating existing economic inequalities. Addressing this challenge requires proactive strategies to mitigate negative impacts and ensure a just transition.

- The need for reskilling and upskilling initiatives: Investment in education and training programs is essential to equip workers with the skills needed for the jobs of the future.

- Policies to support workers transitioning to new roles: Government policies and social safety nets can play a vital role in supporting workers displaced by automation.

- Exploring alternative economic models that address automation's impact: This includes exploring concepts like universal basic income and other mechanisms to ensure a more equitable distribution of wealth and opportunity.

Conclusion

Developing responsible AI requires a proactive approach that acknowledges and addresses the limitations of AI learning. By focusing on mitigating bias, improving explainability, and engaging in ethical considerations, we can pave the way for a future where AI benefits humanity as a whole. Let's prioritize responsible AI development and ensure that this powerful technology serves humanity's best interests. The future of AI depends on our commitment to building a responsible and ethical framework for its development and deployment. Let's work together to build a future where AI is truly responsible and beneficial for all.

Featured Posts

-

A Speedy Review Of Molly Jongs How To Lose Your Mother

May 31, 2025

A Speedy Review Of Molly Jongs How To Lose Your Mother

May 31, 2025 -

Road To The Final Psg And Inter Milans Champions League Journey

May 31, 2025

Road To The Final Psg And Inter Milans Champions League Journey

May 31, 2025 -

Il Neorealismo Italiano Di Arese Borromeo Interpretazioni Di Ladri Di Biciclette

May 31, 2025

Il Neorealismo Italiano Di Arese Borromeo Interpretazioni Di Ladri Di Biciclette

May 31, 2025 -

Complete Glastonbury 2025 Lineup News From San Remo

May 31, 2025

Complete Glastonbury 2025 Lineup News From San Remo

May 31, 2025 -

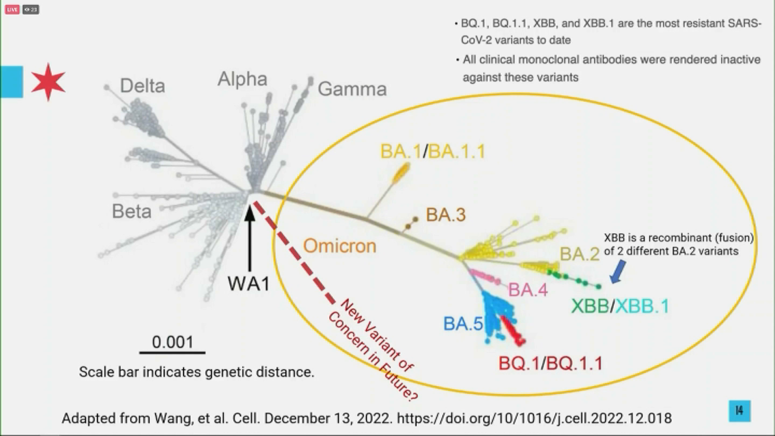

Covid 19 Variant Lp 8 1 A Comprehensive Overview

May 31, 2025

Covid 19 Variant Lp 8 1 A Comprehensive Overview

May 31, 2025