AI's Learning Paradox: Responsible AI Development And Deployment

Table of Contents

Understanding the AI Learning Paradox

The potential of AI to revolutionize industries and improve lives is undeniable. However, this potential is intertwined with a significant risk: the inherent tension between AI's capabilities and its potential for harm. This tension forms the core of the AI learning paradox. As AI systems become more sophisticated and autonomous, the consequences of their actions – both intended and unintended – become increasingly significant.

- AI systems learn from data, inheriting biases present in that data: AI models are trained on vast datasets, and if these datasets reflect existing societal biases (e.g., gender, racial, or socioeconomic biases), the AI system will likely perpetuate and even amplify those biases in its outputs. This can lead to unfair or discriminatory outcomes.

- Unforeseen consequences can arise from complex AI models: The complexity of many modern AI models, particularly deep learning models, makes it difficult to understand precisely how they arrive at their decisions. This lack of transparency increases the risk of unexpected and potentially harmful consequences.

- Lack of transparency in AI decision-making can erode trust: When individuals cannot understand how an AI system made a particular decision, it's difficult for them to trust that decision, particularly in high-stakes situations such as loan applications, medical diagnoses, or criminal justice. This lack of trust can have significant societal implications.

- The potential for misuse of AI in areas like surveillance and autonomous weapons: The power of AI can be exploited for malicious purposes. The development and deployment of AI-powered surveillance systems raise serious privacy concerns, while the use of AI in autonomous weapons systems presents profound ethical and humanitarian challenges.

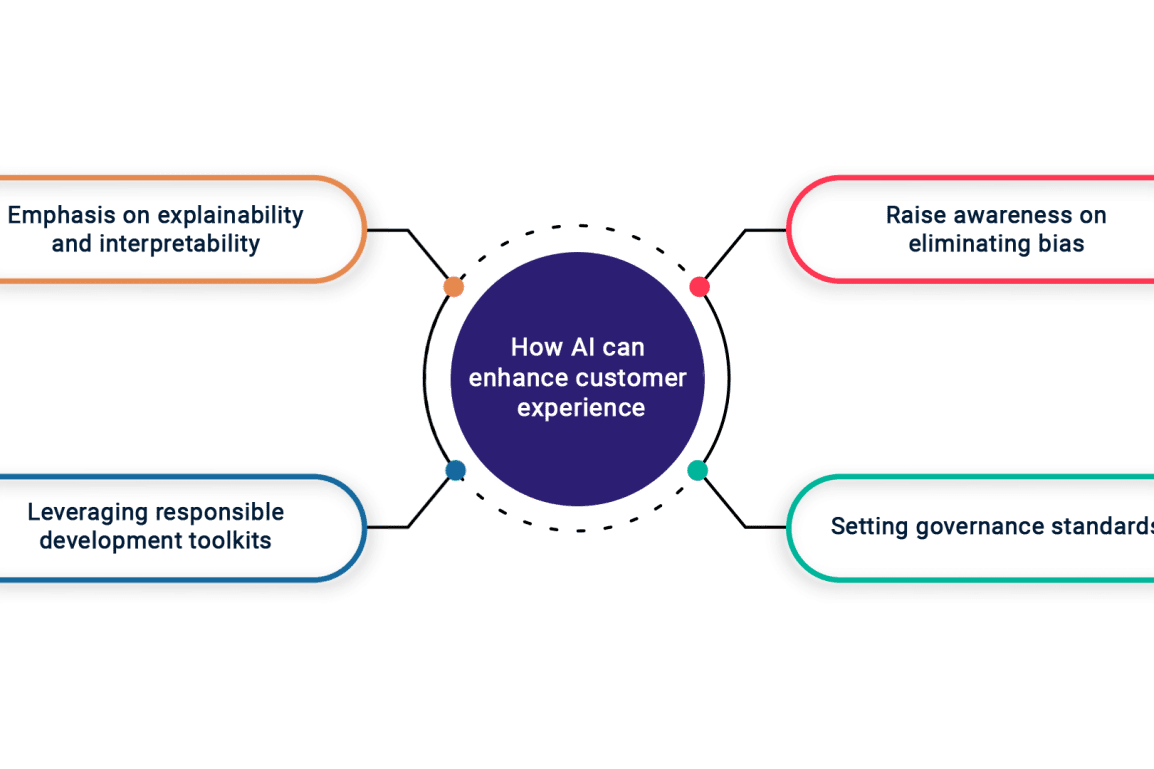

Bias Mitigation in AI Development

Addressing bias in AI is paramount for responsible AI development. This requires a multi-faceted approach that tackles bias at every stage of the AI lifecycle.

- Data augmentation techniques to improve data diversity: Enhancing the training datasets with more representative data can help mitigate bias. This involves actively seeking out and incorporating data from underrepresented groups.

- Algorithmic fairness and accountability tools: Developing and employing algorithms specifically designed to identify and mitigate bias in AI models is crucial. These tools can help detect and correct discriminatory outcomes.

- Human-in-the-loop approaches for monitoring and correcting biases: Integrating human oversight into the AI development and deployment process allows for the identification and correction of biases that might otherwise go unnoticed.

- Regular audits and evaluations of AI systems for bias detection: Ongoing monitoring and evaluation are essential to ensure that AI systems remain fair and unbiased over time. Regular audits can reveal emerging biases and allow for corrective action.

The Importance of Diverse Datasets

The foundation of fair and unbiased AI lies in the data used to train it. Diverse datasets, reflecting the full spectrum of human experiences and perspectives, are essential to prevent skewed outcomes and ensure that AI systems benefit all members of society. Without representative data, AI models will inevitably perpetuate and amplify existing inequalities.

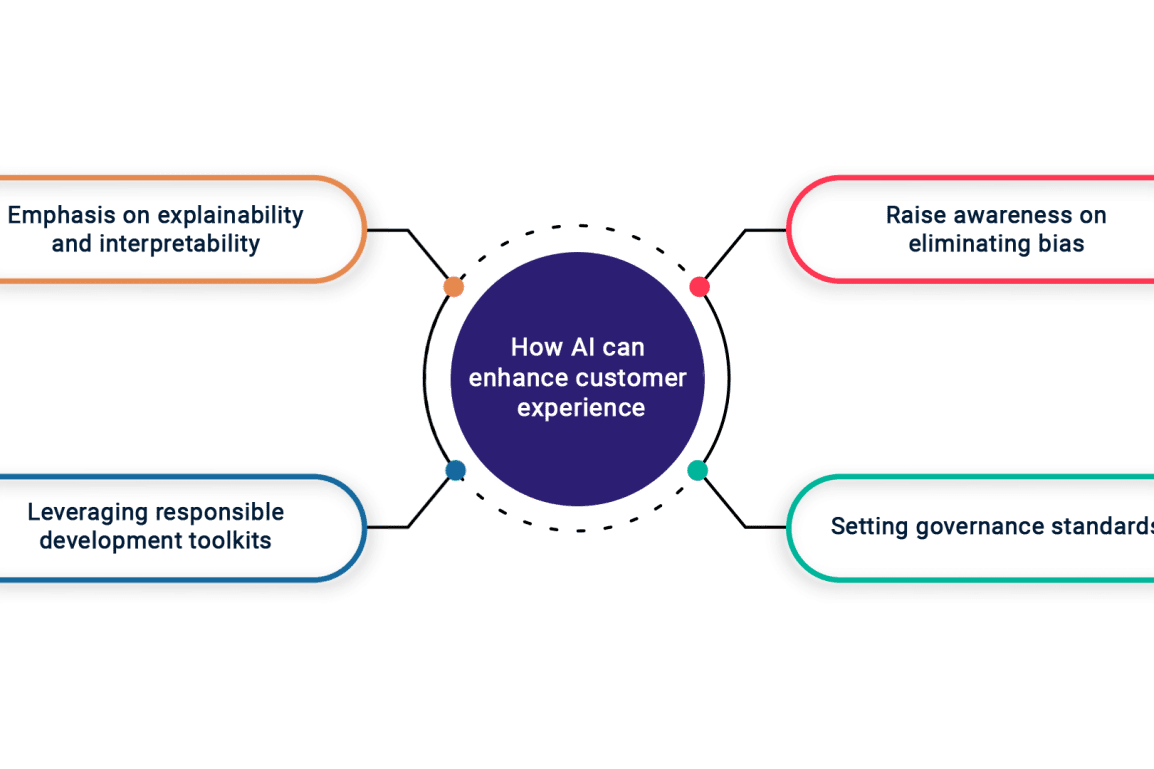

Ensuring Transparency and Explainability

Understanding how AI systems arrive at their conclusions is critical for building trust and accountability. "Black box" AI systems, where the decision-making process is opaque, are inherently problematic.

- Explainable AI (XAI) techniques to improve model interpretability: XAI techniques are being developed to make AI models more transparent and understandable. These techniques aim to provide insights into the reasoning behind an AI system's decisions.

- Development of methods for documenting and communicating AI decision-making processes: Clear documentation of the data, algorithms, and decision-making processes used by AI systems is crucial for accountability and transparency.

- The role of transparency in building public trust and accountability: Openness about how AI systems work is essential for building public confidence and ensuring that these systems are used responsibly.

- The legal and ethical implications of "black box" AI systems: The lack of transparency in "black box" AI systems raises significant legal and ethical concerns, particularly in high-stakes applications.

Ethical Frameworks for AI Deployment

Establishing robust ethical frameworks for AI development and deployment is crucial for guiding responsible innovation. These frameworks should address a wide range of ethical considerations.

- The role of ethical review boards in assessing AI projects: Independent ethical review boards can play a vital role in assessing the ethical implications of AI projects before they are deployed.

- The integration of ethical considerations throughout the AI lifecycle: Ethical considerations should be integrated into every stage of the AI lifecycle, from data collection and algorithm design to deployment and monitoring.

- The importance of stakeholder engagement in defining ethical standards: Broad stakeholder engagement, involving diverse groups and perspectives, is crucial for developing ethical standards that reflect societal values.

- The need for international collaboration on AI ethics: Given the global reach of AI, international collaboration is essential to establish consistent and effective ethical guidelines.

Mitigating Risks and Promoting Responsible Innovation

Managing the risks associated with AI deployment is crucial for realizing its benefits safely and responsibly.

- Robust testing and validation procedures before deployment: Rigorous testing and validation are necessary to identify and address potential problems before AI systems are deployed in real-world applications.

- Continuous monitoring and evaluation of deployed AI systems: Ongoing monitoring and evaluation are vital for identifying and responding to emerging risks and unforeseen consequences.

- Mechanisms for addressing errors and malfunctions: Clear procedures should be in place for addressing errors and malfunctions in deployed AI systems to minimize harm and maintain public trust.

- The development of safety protocols for high-stakes AI applications: Specific safety protocols are needed for high-stakes applications of AI, such as autonomous vehicles or medical diagnosis systems, to ensure safety and prevent harm.

Conclusion

The AI learning paradox highlights the critical need for responsible AI development and deployment. By proactively addressing bias, ensuring transparency, establishing ethical frameworks, and mitigating risks, we can harness the immense potential of AI while minimizing its downsides. Ignoring this paradox risks creating AI systems that perpetuate inequalities and undermine public trust. Let's embrace responsible AI development and deployment to unlock the true benefits of this transformative technology and build a more equitable and prosperous future. Learn more about mitigating the risks and maximizing the benefits of responsible AI development and deployment – explore further resources on [link to relevant resources].

Featured Posts

-

Is That Banksy Graffiti In Westcliff Bournemouth A Closer Look

May 31, 2025

Is That Banksy Graffiti In Westcliff Bournemouth A Closer Look

May 31, 2025 -

Is This The Good Life Assessing Your Current State Of Wellbeing

May 31, 2025

Is This The Good Life Assessing Your Current State Of Wellbeing

May 31, 2025 -

Nigora Bannatyne Shows Off Toned Physique In Sparkling Co Ord

May 31, 2025

Nigora Bannatyne Shows Off Toned Physique In Sparkling Co Ord

May 31, 2025 -

Today In History March 26th Princes Overdose Details

May 31, 2025

Today In History March 26th Princes Overdose Details

May 31, 2025 -

The Pursuit Of The Good Life A Journey Of Self Discovery

May 31, 2025

The Pursuit Of The Good Life A Journey Of Self Discovery

May 31, 2025