Algorithms And Mass Shootings: Examining Corporate Liability

Table of Contents

The Role of Social Media Algorithms in Radicalization

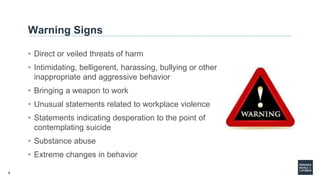

Social media algorithms, designed to maximize engagement and user retention, inadvertently create environments ripe for radicalization. These algorithms, often opaque in their design and operation, contribute to the spread of extremist ideologies and the recruitment of individuals into violent groups.

Echo Chambers and Filter Bubbles

Algorithms curate user feeds, prioritizing content deemed most likely to generate engagement. This can lead to the formation of "echo chambers," where users are primarily exposed to information confirming their existing beliefs, even if those beliefs are extreme or violent. Filter bubbles further restrict exposure to diverse perspectives, reinforcing radicalization.

- Examples: Algorithmic promotion of extremist groups and individuals on platforms like YouTube and Facebook.

- Lack of Content Moderation: Insufficient or inconsistent moderation of hate speech and violent content.

- Spread of Misinformation: Algorithms can amplify false narratives and conspiracy theories that fuel extremist ideologies.

- Amplification of Hate Speech: The algorithms' focus on engagement often inadvertently boosts the visibility of hateful and violent rhetoric, normalizing and even encouraging such behavior.

Targeted Advertising and Recruitment

Sophisticated algorithms utilize user data to target individuals with specific interests and vulnerabilities. This capability is exploited by extremist groups to reach potential recruits through personalized propaganda campaigns.

- Targeted Advertising Campaigns: Extremist groups utilize targeted advertising to reach individuals expressing interest in relevant keywords or topics.

- Use of Data Analytics: Sophisticated data analytics techniques are employed to identify individuals susceptible to recruitment.

- Ethical Implications: The ethical implications of targeted advertising when used to promote violence and extremism are profoundly troubling. The question of corporate responsibility for enabling such activities becomes paramount.

Liability and Negligence

Determining corporate liability for the role of social media platforms in mass shootings presents significant legal challenges. Existing legal frameworks offer limited guidance in this complex area.

Existing Legal Frameworks

Current legal frameworks struggle to definitively address the issue. Section 230 of the Communications Decency Act (USA), for example, provides significant immunity to online platforms for user-generated content, although this protection is increasingly challenged. The European Union's Digital Services Act (DSA) represents a move towards greater platform responsibility, yet proving direct causation between algorithmic design and specific violent acts remains a significant hurdle.

- Section 230 (USA): Provides significant legal protection to online platforms from liability for user-generated content.

- Digital Services Act (EU): Imposes greater responsibilities on online platforms regarding content moderation and algorithmic transparency.

- Challenges in Proving Causation: Establishing a direct causal link between algorithmic design and a mass shooting is exceptionally difficult.

Arguments for Corporate Responsibility

Despite these challenges, compelling arguments exist for holding social media companies accountable. The foreseeability of harm, the failure to adequately moderate content, and the prioritization of profit over safety all contribute to a strong case for corporate responsibility.

- Foreseeability of Harm: The potential for harm from the spread of extremist ideologies and hate speech on social media is readily foreseeable.

- Failure to Adequately Moderate Content: The persistent failure of platforms to effectively moderate hate speech and violent content points to a significant breach of responsibility.

- Profit Motive Outweighing Safety Concerns: The prioritization of user engagement and profit maximization over public safety raises serious ethical and legal concerns.

- Potential Legal Strategies: Legal strategies could include negligence claims, arguing that platforms failed to take reasonable steps to prevent foreseeable harm.

Proposed Solutions and Policy Recommendations

Addressing the issue requires a multi-pronged approach encompassing improved content moderation, legislative changes, and a fundamental shift in the ethical considerations guiding algorithm design.

Improved Content Moderation

Improved content moderation strategies are crucial. This involves a combination of advanced AI-powered detection systems and robust human oversight, operating under stricter guidelines and increased transparency.

- Stricter Guidelines: Implementation of clearer and more comprehensive guidelines for content moderation.

- Increased Transparency: Greater transparency in the design and operation of algorithms used in content moderation.

- Independent Audits: Regular independent audits of content moderation practices to ensure effectiveness and accountability.

Legislative Changes

Legislative action is vital to hold social media companies accountable. This may involve amendments to existing laws or entirely new legislation specifically targeting online radicalization and the promotion of violence.

- Amendments to Existing Laws: Revisions to laws like Section 230 to clarify platform responsibility.

- New Legislation: The enactment of new legislation specifically addressing online radicalization and its connection to real-world violence.

- International Cooperation: International collaboration is essential to address the global nature of online extremism.

Ethical Considerations in Algorithm Design

Ethical considerations must be central to algorithm design. Algorithms should be designed to minimize harm and promote a safer online environment.

- Bias Detection and Mitigation: Algorithms must be designed and audited to detect and mitigate biases that can contribute to the spread of extremist ideologies.

- Promoting Diverse and Inclusive Content: Algorithms should be designed to promote diverse and inclusive content, countering the formation of echo chambers and filter bubbles.

- User Education and Media Literacy: Promoting media literacy and critical thinking skills among users to help them identify and resist extremist propaganda.

Conclusion

The relationship between algorithms and mass shootings is complex and multifaceted. While proving direct causation remains challenging, the potential for corporate liability is undeniable. Social media companies have a responsibility to mitigate the risks associated with their algorithms. We need improved content moderation, stronger legislation, and a fundamental shift in ethical considerations guiding algorithm design. Understanding the role of algorithms in mass shootings is crucial. We must demand accountability from corporations and advocate for legislation that prioritizes public safety. Learn more and get involved today!

Featured Posts

-

Investigation Reveals Prolonged Presence Of Toxic Chemicals After Ohio Derailment

May 31, 2025

Investigation Reveals Prolonged Presence Of Toxic Chemicals After Ohio Derailment

May 31, 2025 -

World News Banksy Art Showcases In Dubai

May 31, 2025

World News Banksy Art Showcases In Dubai

May 31, 2025 -

Munguias Doping Denial Following Adverse Test Result

May 31, 2025

Munguias Doping Denial Following Adverse Test Result

May 31, 2025 -

Strategic Investment Guide Mapping The Countrys Emerging Business Hotspots

May 31, 2025

Strategic Investment Guide Mapping The Countrys Emerging Business Hotspots

May 31, 2025 -

You Re Back On Netflix A Review Of You Season 4

May 31, 2025

You Re Back On Netflix A Review Of You Season 4

May 31, 2025