Algorithms, Radicalization, And Mass Shootings: Holding Tech Companies Accountable

Table of Contents

The Role of Algorithms in Amplifying Extremist Content

H3: Echo Chambers and Filter Bubbles: Recommendation algorithms, designed to personalize our online experiences, often create echo chambers and filter bubbles. These algorithms, driven by user data and engagement metrics, prioritize content aligning with our existing beliefs, even if those beliefs are hateful and extremist. This reinforces pre-existing biases and can lead to radicalization.

- Examples: YouTube's recommendation system leading users down rabbit holes of conspiracy theories and extremist ideologies; Facebook's newsfeed algorithm prioritizing sensational and emotionally charged content, including hate speech and violent rhetoric.

- Details: The mechanics are simple: if a user frequently engages with extremist content, the algorithm interprets this as a preference and prioritizes similar content in the future. This creates a self-reinforcing loop, pushing individuals further down the path of radicalization. The inherent algorithm bias further exacerbates the problem.

H3: The Business Model of Engagement: The core business model of many social media platforms relies on maximizing user engagement. This translates into more time spent on the platform, leading to increased advertising revenue. Unfortunately, extremist content, often designed to be shocking and provocative, tends to generate high levels of engagement. This creates a perverse incentive for platforms to prioritize engagement over content moderation, even when that content is harmful.

- Examples: The prioritization of “likes,” shares, and comments over content quality; the slow response to reports of hate speech and violent content.

- Details: The financial incentives overshadow ethical considerations, leading to a situation where platforms inadvertently, or even deliberately, profit from the spread of extremist ideologies. This monetization of hate speech needs to be addressed through stronger content moderation policies and greater platform responsibility.

The Link Between Online Radicalization and Mass Shootings

H3: The Online Grooming Process: Online platforms are increasingly used to groom and radicalize individuals, creating a fertile ground for violence. Extremist groups and individuals utilize these platforms to spread their ideology, recruit new members, and build online communities that foster a sense of belonging and validation.

- Examples: Online forums and social media groups promoting misogynistic ideologies (like incel communities) and white supremacist views; encrypted messaging apps used for private communication and planning of violent acts.

- Details: These online spaces utilize psychological manipulation techniques, such as creating an us-versus-them mentality, fostering a sense of grievance, and promising belonging and purpose. Understanding these radicalization pathways is crucial to prevent future acts of violence. The presence of hate speech often acts as a precursor to more violent rhetoric.

H3: Manifestation of Online Radicalization in Real-World Violence: A growing body of evidence links online radicalization to real-world mass shootings. Many mass shooting perpetrators have a history of engagement with extremist online communities, exhibiting a pattern of online grooming and radicalization before committing acts of violence.

- Examples: Numerous case studies reveal the online activities of mass shooting perpetrators, including their participation in online forums, their consumption of extremist propaganda, and their expressions of violent intentions online.

- Details: These cases highlight the real-world consequences of online radicalization and demonstrate the direct link between online activity and violent acts. The influence of online communities and platforms in inspiring and facilitating acts of violence cannot be ignored.

Holding Tech Companies Accountable

H3: Legal and Regulatory Frameworks: Stronger legal and regulatory frameworks are urgently needed to hold tech companies accountable for their role in online radicalization. Current laws and regulations often prove insufficient, struggling to keep pace with the rapid evolution of technology and the sophisticated strategies employed by extremist groups.

- Examples: The limitations of current laws on hate speech and online harassment; the challenges of enforcing content moderation policies across different jurisdictions.

- Details: Finding a balance between government intervention and protecting free speech is a complex challenge, but it's a challenge that must be addressed. Improving existing content moderation policies and enhancing legal liability for platforms are vital steps.

H3: Increased Transparency and Responsibility: Tech companies must embrace greater transparency and responsibility in their content moderation practices. This includes developing more sophisticated algorithms that identify and flag extremist content, implementing robust content moderation strategies, and creating effective user reporting mechanisms.

- Examples: Investing in AI-powered tools to detect hate speech and violent content; enhancing human moderation teams; providing users with clearer avenues to report problematic content.

- Details: This requires a commitment to ethical algorithms and responsible technology. Tech companies must prioritize corporate accountability and demonstrate a commitment to mitigating the risks associated with their platforms. Transparency initiatives regarding algorithm design and content moderation practices are essential.

Conclusion

The evidence overwhelmingly demonstrates a strong link between algorithms, online radicalization, and mass shootings. The current business models of many tech companies inadvertently, and sometimes intentionally, contribute to the spread of extremist ideologies, creating a dangerous cycle of online grooming and real-world violence. We must demand accountability from these companies. We must urgently address Algorithms and Radicalization to prevent mass shootings. We must call on our legislators to enact stronger laws, support organizations combating online extremism, and pressure tech companies to prioritize safety and ethical practices above profit. Let’s work together to combat algorithms and radicalization to prevent mass shootings and demand that tech companies take responsibility for their role in creating a safer online environment. Contact your legislators, support organizations fighting online extremism, and demand better practices from tech companies – let your voice be heard.

Featured Posts

-

Medvedevs French Open Exit Norries Stunning Victory And Djokovics Continued Dominance

May 30, 2025

Medvedevs French Open Exit Norries Stunning Victory And Djokovics Continued Dominance

May 30, 2025 -

Cool Wet And Windy Weather Expected In San Diego County

May 30, 2025

Cool Wet And Windy Weather Expected In San Diego County

May 30, 2025 -

Manchester United Louva Bruno Fernandes Desempenho E Importancia Para O Clube

May 30, 2025

Manchester United Louva Bruno Fernandes Desempenho E Importancia Para O Clube

May 30, 2025 -

Did Elon Musk Father Amber Heards Twins A Look At The Embryo Controversy

May 30, 2025

Did Elon Musk Father Amber Heards Twins A Look At The Embryo Controversy

May 30, 2025 -

A Widower Under Scrutiny Remy In Fbi Most Wanted Season 6 Sneak Peek

May 30, 2025

A Widower Under Scrutiny Remy In Fbi Most Wanted Season 6 Sneak Peek

May 30, 2025

Latest Posts

-

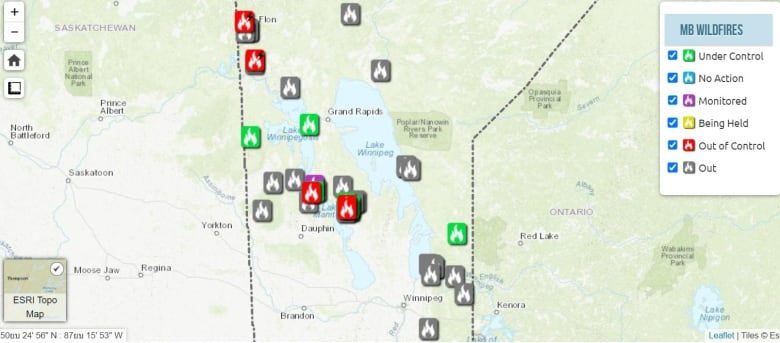

Canada News Desperate Fight Against Devastating Wildfires In Eastern Manitoba

May 31, 2025

Canada News Desperate Fight Against Devastating Wildfires In Eastern Manitoba

May 31, 2025 -

Deadly Wildfires Rage In Eastern Manitoba Update On Contained And Uncontained Fires

May 31, 2025

Deadly Wildfires Rage In Eastern Manitoba Update On Contained And Uncontained Fires

May 31, 2025 -

Texas Panhandle Wildfire A Year Of Recovery And Renewal

May 31, 2025

Texas Panhandle Wildfire A Year Of Recovery And Renewal

May 31, 2025 -

Eastern Manitoba Wildfires Ongoing Battle Against Uncontrolled Fires

May 31, 2025

Eastern Manitoba Wildfires Ongoing Battle Against Uncontrolled Fires

May 31, 2025 -

Beauty From The Ashes Texas Panhandles Wildfire Recovery One Year Later

May 31, 2025

Beauty From The Ashes Texas Panhandles Wildfire Recovery One Year Later

May 31, 2025