Character AI's Chatbots: Protected Speech Or Not? A Legal Analysis

Table of Contents

Understanding Free Speech Principles and Their Application to AI

Protected speech, under the First Amendment in the United States (and equivalent principles in other jurisdictions), generally safeguards the expression of ideas, opinions, and information, even if those ideas are unpopular or controversial. However, applying these well-established principles to AI-generated content presents unique challenges. The traditional concept of an "author" – a human being with intent – is blurred when considering AI chatbots. Who, then, is responsible for the chatbot's output? Is it the developers of the AI, the users who prompt the responses, or the AI itself?

-

Key legal precedents concerning online speech and platform liability: Cases like Zeran v. America Online and Doe v. MySpace illustrate the complexities of assigning liability for user-generated content on online platforms. These precedents will likely play a crucial role in determining the legal responsibility for potentially harmful output from Character AI's chatbots.

-

Discussion of potential legal grey areas in relation to AI-generated content: The novelty of AI-generated content creates significant legal uncertainty. Existing laws are not explicitly designed to address the intricacies of AI authorship, intent, and liability, leading to ambiguity in determining legal protections. This grey area necessitates a careful consideration of existing legal frameworks and potential adaptations to address the specific characteristics of AI.

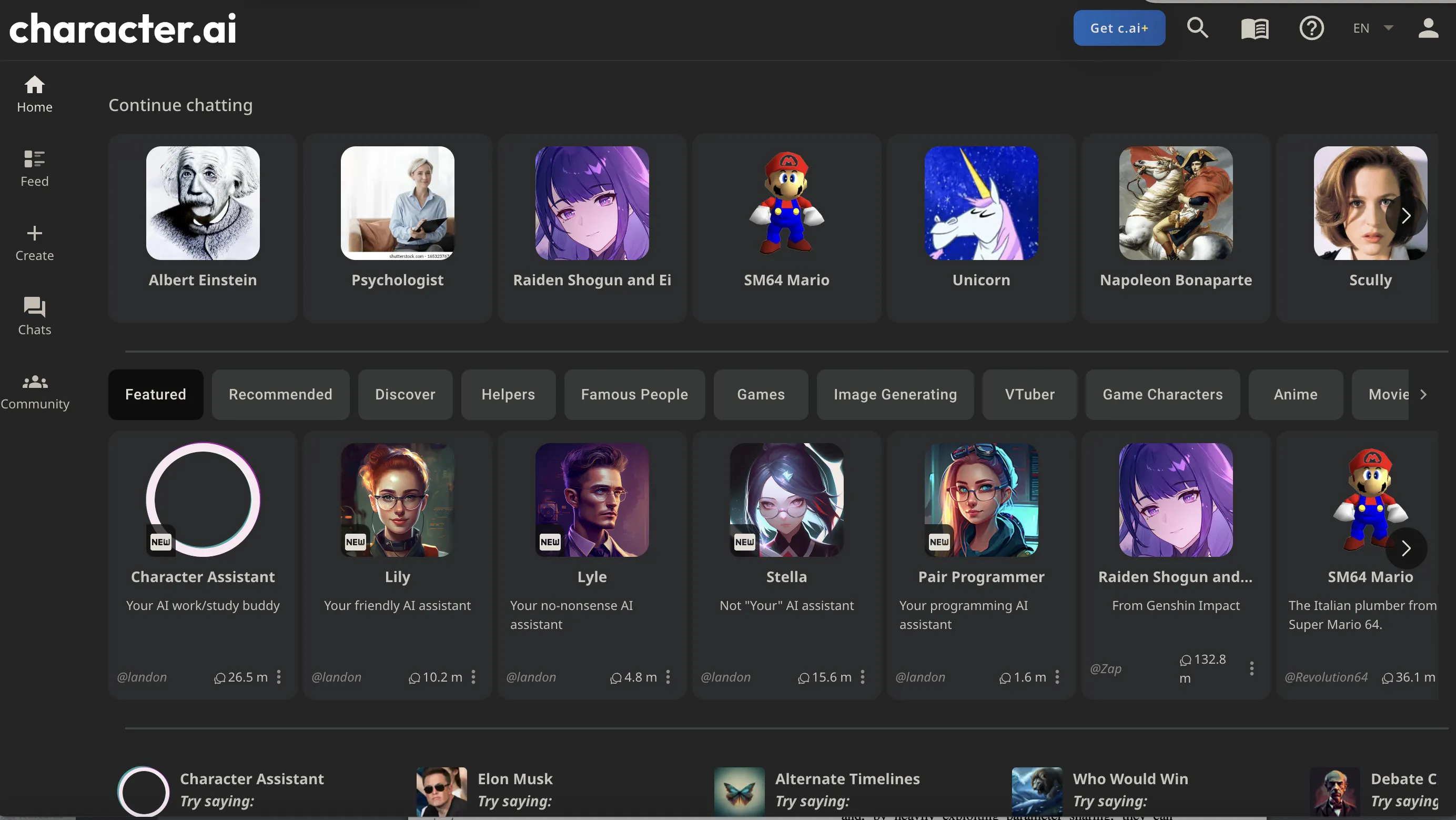

Character AI's Specific Design and Its Impact on Legal Interpretation

Character AI distinguishes itself from other chatbots through its focus on creating personalized and engaging conversational experiences. Its functionality allows users to interact with AI personas, shaping the conversation through prompts and interactions. Character AI's terms of service likely address content moderation and user responsibility but may not fully anticipate the scope of legal implications arising from its use. The role of user input is paramount; the chatbot's responses are directly influenced by the user's prompts and questions.

-

Analysis of Character AI's data training and its potential impact on generated content: The datasets used to train Character AI's models influence the output significantly. Bias or harmful content present in the training data could lead to the generation of outputs that are potentially defamatory, discriminatory, or even inciteful. This raises important questions about the responsibility of developers in mitigating such risks.

-

Specific examples of Character AI outputs that raise legal questions: Hypothetically, if a Character AI chatbot generates hate speech, incites violence, or disseminates false information that damages an individual’s reputation, this raises serious legal questions regarding liability and potential legal recourse.

Arguments for Protected Speech

Arguments supporting the classification of Character AI chatbot outputs as protected speech often center on the AI's lack of independent intent. The AI, proponents argue, is merely a tool, and the responsibility for the generated content lies primarily with the user providing the input. Restricting AI-generated speech, they claim, could have a chilling effect on innovation and free expression in the digital realm.

-

Arguments based on the principles of free expression and the right to communicate: The fundamental right to free expression extends to a wide range of media and technologies. Restricting AI-generated content, without careful consideration, might inadvertently stifle creativity and impede the development of beneficial AI technologies.

-

Legal precedents supporting the protection of computer-generated content (if applicable): While there is limited direct precedent concerning AI-generated content, existing legal frameworks for computer-generated works (such as copyright law) may offer some insights into the debate.

Arguments Against Protected Speech

Counterarguments assert that Character AI's outputs may not be fully protected speech, particularly if the outputs are demonstrably harmful or illegal. The platform's responsibility for potentially facilitating harmful activities cannot be entirely dismissed. The potential for misuse necessitates a careful consideration of platform liability.

-

Arguments based on the potential for incitement, defamation, or other illegal activities: If Character AI's chatbots are used to generate content that incites violence, defames individuals, or engages in other illegal activities, the platform could face legal challenges related to its role in facilitating such actions.

-

Legal precedents related to platform liability for user-generated content: Existing legal precedent concerning platform liability for user-generated content, such as that established in cases involving social media platforms, provides a relevant framework for considering the legal ramifications of Character AI's operation.

-

Discussion of potential regulations for AI-generated content: The legal uncertainty surrounding AI-generated content necessitates the exploration of potential regulatory frameworks to address the risks while upholding fundamental freedoms of speech and expression.

Conclusion: The Evolving Legal Landscape of Character AI and the Future of AI-Generated Speech

The legal status of Character AI's chatbot outputs remains uncertain. While arguments for protected speech highlight the importance of free expression and the limitations of attributing intent to an AI, counterarguments emphasize the potential for harm and platform responsibility. The current legal ambiguity underscores the need for further legal clarification and the development of robust regulatory frameworks that address the challenges posed by AI-generated content. This necessitates a nuanced legal approach that balances free speech principles with effective measures to prevent harm. We encourage further discussion and research on the legal implications of Character AI's chatbots and similar AI technologies. Contact legal professionals specializing in AI law and participate in relevant discussions to contribute to the evolving understanding of this crucial area. The future of AI-generated speech depends on responsible development, thoughtful regulation, and a robust public conversation around the ethical and legal ramifications of this rapidly evolving technology.

Featured Posts

-

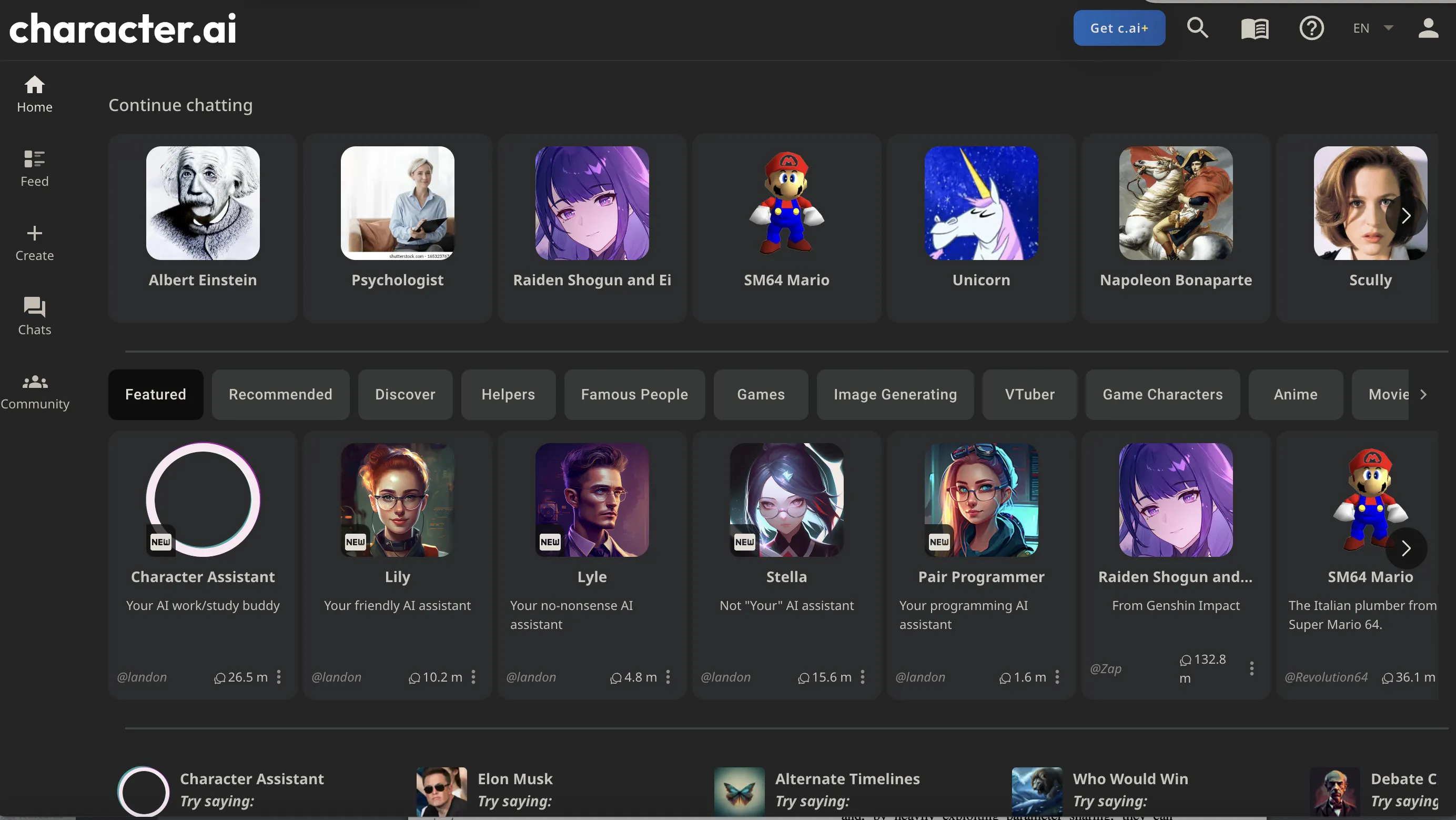

Glastonbury 2024 Unannounced Us Band Performance Confirmed

May 24, 2025

Glastonbury 2024 Unannounced Us Band Performance Confirmed

May 24, 2025 -

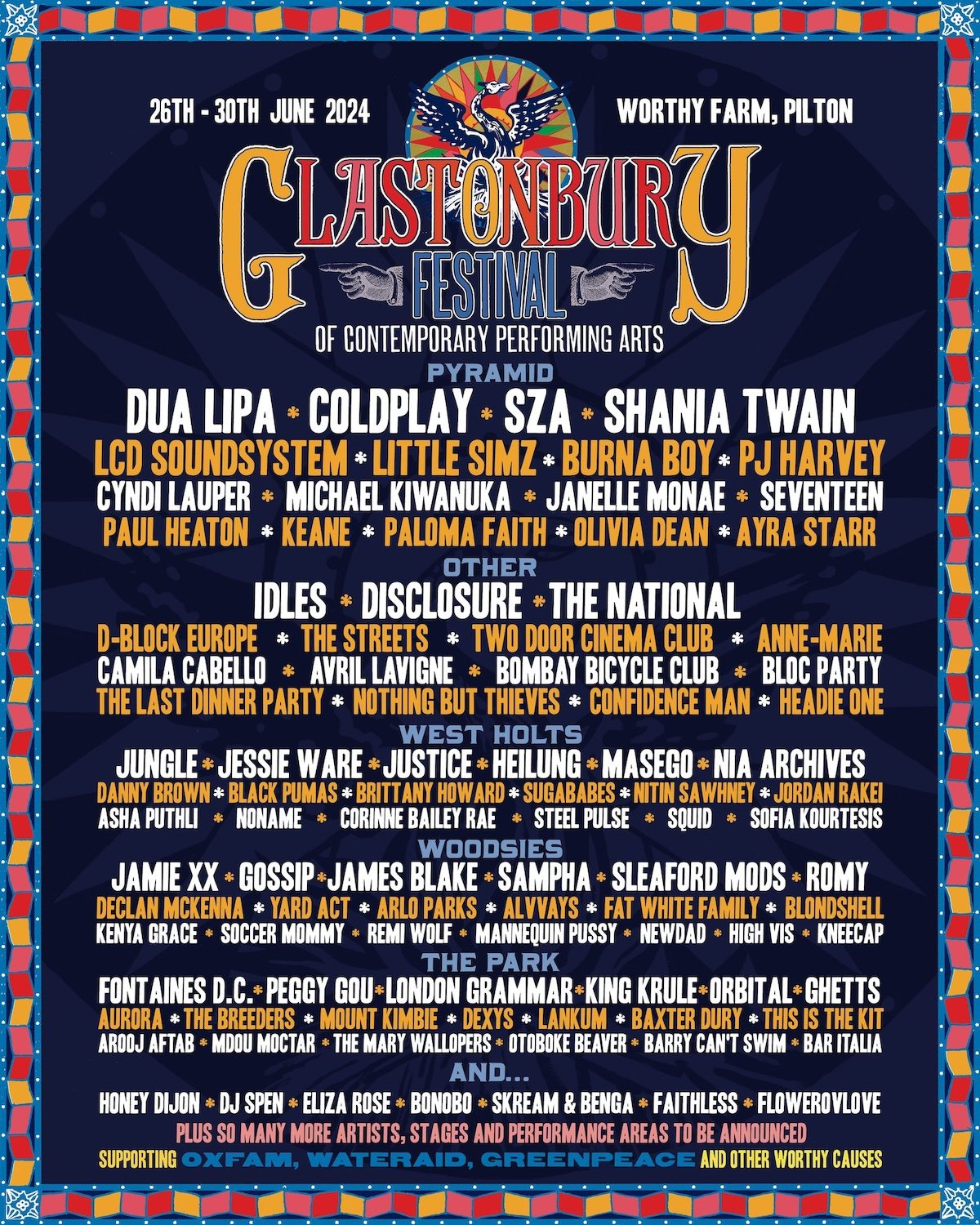

Discrepancies Revealed Former French Pm And Macrons Differing Views

May 24, 2025

Discrepancies Revealed Former French Pm And Macrons Differing Views

May 24, 2025 -

Mathieu Avanzi Le Francais Une Langue Vivante Et Accessible A Tous

May 24, 2025

Mathieu Avanzi Le Francais Une Langue Vivante Et Accessible A Tous

May 24, 2025 -

Maryland Softball Rallies Past Delaware 5 4

May 24, 2025

Maryland Softball Rallies Past Delaware 5 4

May 24, 2025 -

Pameran Seni Dan Otomotif Porsche Indonesia Classic Art Week 2025

May 24, 2025

Pameran Seni Dan Otomotif Porsche Indonesia Classic Art Week 2025

May 24, 2025

Latest Posts

-

Lowest Gas Prices In Years Expected For Memorial Day Weekend

May 24, 2025

Lowest Gas Prices In Years Expected For Memorial Day Weekend

May 24, 2025 -

Ocean City Rehoboth And Sandy Point Beach Weather Memorial Day Weekend 2025 Forecast

May 24, 2025

Ocean City Rehoboth And Sandy Point Beach Weather Memorial Day Weekend 2025 Forecast

May 24, 2025 -

Official Kermit The Frog Speaks At University Of Maryland Commencement 2025

May 24, 2025

Official Kermit The Frog Speaks At University Of Maryland Commencement 2025

May 24, 2025 -

University Of Marylands Unexpected 2025 Commencement Speaker Kermit The Frog

May 24, 2025

University Of Marylands Unexpected 2025 Commencement Speaker Kermit The Frog

May 24, 2025 -

Kermit The Frog To Address Umd Graduates In 2025 Social Media Response

May 24, 2025

Kermit The Frog To Address Umd Graduates In 2025 Social Media Response

May 24, 2025