Do Algorithms Contribute To Mass Shooter Radicalization? Examining Corporate Responsibility

Table of Contents

The Amplifying Effect of Algorithms

Algorithms, the invisible engines driving much of the internet, are designed to optimize user engagement. However, this pursuit of engagement can inadvertently create environments that foster radicalization.

Echo Chambers and Filter Bubbles

Algorithms designed to personalize content can inadvertently create echo chambers and filter bubbles. These reinforce existing biases and expose users to increasingly extreme viewpoints. Instead of encountering diverse perspectives, users are primarily shown content aligning with their pre-existing beliefs, regardless of its veracity or potential harm.

- Examples of algorithms prioritizing engagement over safety: Many social media platforms prioritize content that generates high engagement, even if that content is harmful or promotes violence. The algorithm rewards inflammatory posts and conspiracy theories, leading to their wider dissemination.

- Studies showing correlation between algorithm-driven content and radicalization: Several studies have demonstrated a correlation between exposure to extremist content through personalized recommendations and increased radicalization. These studies highlight the need for a more nuanced understanding of how algorithms shape user behavior and beliefs.

- The role of recommendation systems in pushing extreme content: Recommendation systems, a cornerstone of many online platforms, often promote content similar to what a user has already engaged with. This can lead users down a rabbit hole of increasingly extreme viewpoints, potentially contributing to radicalization.

Algorithmic Bias and Discrimination

Pre-existing biases in the massive datasets used to train algorithms can lead to discriminatory outcomes. This can disproportionately expose vulnerable individuals to extremist content. The lack of diversity in these datasets means certain viewpoints are underrepresented or even entirely absent, creating skewed algorithmic outputs.

- Discussion on the lack of diversity in data sets: The datasets used to train algorithms often lack diversity in terms of demographics, perspectives, and experiences. This lack of representation can lead to biased outcomes, favoring certain types of content over others.

- The impact of biased algorithms on marginalized communities: Marginalized communities are often disproportionately affected by algorithmic bias, as they may be exposed to more hate speech and extremist content due to the skewed nature of algorithmic recommendations.

- Examples of algorithmic bias amplifying hate speech: Algorithms have been shown to amplify hate speech targeting specific demographic groups. This amplification can have real-world consequences, contributing to discrimination and violence.

The Spread of Misinformation and Disinformation

Algorithms significantly impact the spread of misinformation and disinformation, creating fertile ground for radicalization. The speed and reach of online platforms, coupled with the design of algorithms, enable harmful ideologies to proliferate rapidly.

The Rapid Dissemination of Hate Speech

Algorithms facilitate the rapid spread of hate speech and conspiracy theories. This rapid dissemination can create a breeding ground for radicalization, as individuals are increasingly exposed to extremist viewpoints without sufficient counter-narratives.

- Case studies of algorithms amplifying hate speech leading to real-world violence: There are numerous documented cases where algorithms have amplified hate speech, directly contributing to real-world violence and acts of extremism.

- The challenge of content moderation at scale: The sheer volume of content on online platforms makes content moderation an incredibly challenging task. Algorithms struggle to keep up with the speed at which harmful content is created and shared.

- The limitations of current algorithms in identifying nuanced forms of extremist content: Current algorithms often struggle to identify subtle forms of extremist content, such as coded language or dog whistles, that are intentionally designed to evade detection.

The Role of Deepfakes and Manipulated Media

Algorithms are increasingly used to create and spread deepfakes and manipulated media. These can be used to further radicalize individuals and incite violence by distorting reality and spreading false narratives.

- Examples of deepfakes used to spread propaganda and misinformation: Deepfakes have been used to create fabricated videos and audio recordings of political figures, making it difficult to distinguish between truth and falsehood.

- The difficulty in detecting and combating deepfakes: Detecting and combating deepfakes poses significant technological and societal challenges. The technology is constantly evolving, making it difficult for platforms to keep pace.

- The ethical implications of AI-powered media manipulation: The use of AI to create and spread manipulated media raises serious ethical concerns, particularly in the context of radicalization and incitement to violence.

Corporate Responsibility and Mitigation Strategies

Tech companies have a crucial role to play in mitigating the risks associated with algorithmic amplification of extremism. This requires a multi-pronged approach encompassing improved content moderation, media literacy initiatives, and greater accountability.

Improving Content Moderation Practices

Tech companies must invest in more robust and sophisticated content moderation systems to identify and remove extremist content. This requires a combination of technological solutions and human oversight.

- The need for human oversight in content moderation: While AI can play a role in content moderation, human oversight is crucial to ensure accuracy and address the nuances of extremist content.

- Investing in AI and machine learning for improved content detection: Continued investment in AI and machine learning is necessary to improve the ability of algorithms to identify and flag extremist content more effectively.

- The importance of transparency in content moderation policies: Tech companies must be transparent about their content moderation policies and processes to build trust and accountability.

Promoting Media Literacy and Critical Thinking

Tech companies have a responsibility to educate users on how to identify and critically evaluate online information. This helps mitigate the impact of algorithmic manipulation.

- Partnerships with educational institutions: Collaboration with educational institutions can help develop effective media literacy programs and curricula.

- Developing online resources and tools for media literacy: Tech companies can create online resources and tools to equip users with the skills to identify misinformation and propaganda.

- Promoting critical thinking skills among users: Encouraging critical thinking skills among users can help them resist manipulation and make informed decisions about the information they consume online.

Accountability and Transparency

Increased transparency and accountability mechanisms are crucial to ensure tech companies are taking responsibility for the impact of their algorithms. This demands regular audits and independent reviews.

- Regular audits of algorithmic systems: Regular audits can help identify and address biases and vulnerabilities in algorithmic systems.

- Independent reviews of content moderation practices: Independent reviews can provide an objective assessment of the effectiveness of content moderation practices.

- Increased public reporting on the prevalence of extremist content: Increased transparency in reporting on the prevalence of extremist content can help track progress and identify areas for improvement.

Conclusion

The evidence suggests that algorithms, while not the sole cause, can play a significant role in the radicalization of individuals who commit mass violence. Tech companies bear a substantial corporate responsibility to mitigate these risks through improved content moderation, increased media literacy initiatives, and greater accountability and transparency. Failing to address the amplification effect of algorithms on online extremism will continue to pose a significant threat to public safety. We need proactive measures and a concerted effort to prevent the further use of algorithms to facilitate mass shooter radicalization. Let's hold tech companies accountable and demand better practices in combating the spread of online extremism through algorithmic reform.

Featured Posts

-

Metallica Announces Two Night Stand At Dublins Aviva Stadium

May 30, 2025

Metallica Announces Two Night Stand At Dublins Aviva Stadium

May 30, 2025 -

Marvel Sinner And The Importance Of Post Credit Scenes

May 30, 2025

Marvel Sinner And The Importance Of Post Credit Scenes

May 30, 2025 -

Us Imposes New Tariffs On Southeast Asian Solar Imports What You Need To Know

May 30, 2025

Us Imposes New Tariffs On Southeast Asian Solar Imports What You Need To Know

May 30, 2025 -

Alastqlal Qst Kfah Wthdy

May 30, 2025

Alastqlal Qst Kfah Wthdy

May 30, 2025 -

Trumps Ukraine Peace Prediction A Consistent Two Week Timeline

May 30, 2025

Trumps Ukraine Peace Prediction A Consistent Two Week Timeline

May 30, 2025

Latest Posts

-

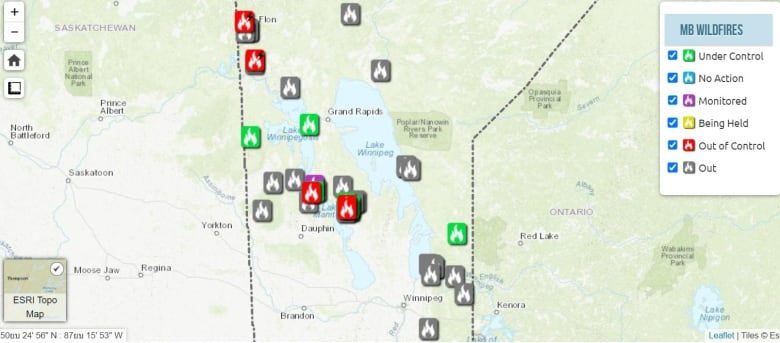

Deadly Wildfires Rage In Eastern Manitoba Update On Contained And Uncontained Fires

May 31, 2025

Deadly Wildfires Rage In Eastern Manitoba Update On Contained And Uncontained Fires

May 31, 2025 -

Texas Panhandle Wildfire A Year Of Recovery And Renewal

May 31, 2025

Texas Panhandle Wildfire A Year Of Recovery And Renewal

May 31, 2025 -

Eastern Manitoba Wildfires Ongoing Battle Against Uncontrolled Fires

May 31, 2025

Eastern Manitoba Wildfires Ongoing Battle Against Uncontrolled Fires

May 31, 2025 -

Beauty From The Ashes Texas Panhandles Wildfire Recovery One Year Later

May 31, 2025

Beauty From The Ashes Texas Panhandles Wildfire Recovery One Year Later

May 31, 2025 -

Newfoundland Wildfires Emergency Response To Widespread Destruction

May 31, 2025

Newfoundland Wildfires Emergency Response To Widespread Destruction

May 31, 2025