FTC Probe Into OpenAI: A Deeper Look At ChatGPT And AI Accountability

Table of Contents

The FTC's Concerns Regarding ChatGPT and Data Privacy

The FTC's authority stems from its mandate to prevent unfair or deceptive business practices. The agency investigates whether companies are complying with consumer protection laws, including those related to data privacy. In the case of OpenAI and ChatGPT, the FTC is likely scrutinizing several areas related to the collection, use, and protection of user data. The training of large language models (LLMs) like ChatGPT involves ingesting massive datasets, raising concerns about the potential for:

-

Concerns about the potential for biases in ChatGPT's outputs and their impact on individuals. Bias in training data can lead to discriminatory or unfair outputs, potentially impacting individuals' access to opportunities or leading to inaccurate assessments. The FTC is likely investigating whether OpenAI adequately mitigated these risks.

-

Analysis of OpenAI's data privacy policies and their adequacy in protecting user information. The transparency and comprehensiveness of OpenAI's data privacy policies are under scrutiny. The FTC will likely examine whether these policies accurately reflect how user data is collected, used, and protected, and whether they comply with relevant laws like the CCPA and GDPR.

-

Discussion of the potential for unauthorized data collection and usage. The FTC's investigation may probe whether OpenAI collected or used user data beyond what was disclosed in its privacy policy, potentially violating users' rights. This includes looking into whether data was used for purposes beyond what users explicitly consented to.

-

Exploration of the legal framework surrounding data privacy in relation to AI systems. This investigation could help to clarify the legal responsibilities of AI companies concerning user data, particularly in the context of LLM training and operation, and define clearer boundaries for acceptable data practices. This is particularly important given the evolving nature of AI technology and data privacy regulations.

AI Accountability and the Challenges of Algorithmic Transparency

A central challenge in the FTC's investigation is the inherent opacity of complex AI models like ChatGPT. The "black box" problem refers to the difficulty in understanding how these models arrive at their outputs. This lack of transparency poses significant challenges for accountability. Key questions include:

-

Examination of the potential for algorithmic bias and discrimination. As mentioned above, bias in training data can result in biased outputs. The FTC is likely analyzing ChatGPT's outputs to identify potential instances of bias and determine whether OpenAI took sufficient steps to mitigate these risks.

-

Analysis of methods for increasing transparency in AI algorithms. The investigation could spur the development of new techniques for making AI algorithms more transparent and explainable, allowing for better scrutiny of their decision-making processes.

-

Discussion of the need for explainable AI (XAI) to improve accountability. XAI aims to make AI decision-making processes more understandable to humans, facilitating better accountability and allowing for the identification and correction of biases or errors.

-

Exploration of the role of independent audits in assessing the safety and fairness of AI systems. The FTC's investigation could lead to increased reliance on independent audits to assess the safety and fairness of AI systems, promoting greater trust and accountability. This could involve rigorous testing and evaluation by third-party experts.

The Broader Implications of the OpenAI Investigation for the AI Industry

The FTC's investigation sets a crucial precedent for the regulation of AI. It signals that AI companies are not exempt from existing consumer protection laws and may face significant legal repercussions for failing to comply with them. The impact on the industry is multifaceted:

-

Examination of potential regulatory frameworks for AI, including self-regulation and government oversight. The investigation will likely influence the development of regulatory frameworks for AI, balancing the need for innovation with the need to protect consumers. This may involve a mix of industry self-regulation and government oversight.

-

Discussion of the ethical considerations surrounding the development and deployment of powerful AI models. The investigation highlights the ethical considerations that must be addressed when developing and deploying powerful AI models. This includes considerations around bias, transparency, and potential misuse.

-

Analysis of the impact on consumer trust and the adoption of AI technologies. The outcome of the investigation will significantly impact consumer trust in AI technologies. Increased regulation and greater transparency could lead to greater trust, ultimately boosting adoption.

-

Exploration of international collaborations and standards for AI governance. The FTC's investigation may lead to greater international collaboration and the development of global standards for AI governance, ensuring a more coordinated and effective approach to AI regulation.

The Future of AI Regulation: Striking a Balance Between Innovation and Accountability

The key to responsible AI development lies in finding a balance between fostering innovation and ensuring accountability. This requires a multi-pronged approach:

-

Implementing robust data privacy protocols: Companies must implement robust data privacy protocols, ensuring transparency and user control over their data.

-

Developing explainable AI (XAI) techniques: Investing in XAI techniques is crucial for increasing transparency and accountability in AI systems.

-

Establishing ethical guidelines and responsible AI practices: Developing and adhering to ethical guidelines and responsible AI practices is essential for building trust and ensuring the ethical use of AI technologies.

-

Promoting independent audits and third-party assessments: Independent audits and assessments can help ensure that AI systems are safe, fair, and comply with relevant regulations.

Conclusion:

The FTC's probe into OpenAI and ChatGPT signifies a crucial turning point in the conversation surrounding AI accountability. The investigation highlights the urgent need for robust regulatory frameworks that address data privacy concerns, promote algorithmic transparency, and ensure responsible AI development. Understanding the complexities of AI, including potential biases and lack of transparency, is essential for navigating the ethical and legal challenges posed by these powerful technologies. Moving forward, a collaborative effort involving researchers, policymakers, and industry stakeholders is vital to establish guidelines that foster innovation while protecting individuals and society. Staying informed about the ongoing FTC probe into OpenAI and its implications for ChatGPT and the broader AI landscape is crucial for navigating the future of artificial intelligence. Learn more about ChatGPT and AI accountability by following the latest developments in this critical area.

Featured Posts

-

Planning A Successful Screen Free Week For Your Family

May 21, 2025

Planning A Successful Screen Free Week For Your Family

May 21, 2025 -

Trinidad Trip Curtailed Dancehall Artists Travel Restrictions And Kartels Message

May 21, 2025

Trinidad Trip Curtailed Dancehall Artists Travel Restrictions And Kartels Message

May 21, 2025 -

How To Watch Peppa Pig Cartoons Online Free Streaming Services

May 21, 2025

How To Watch Peppa Pig Cartoons Online Free Streaming Services

May 21, 2025 -

Big Bear Ai A Detailed Investment Analysis For 2024

May 21, 2025

Big Bear Ai A Detailed Investment Analysis For 2024

May 21, 2025 -

I Hope You Rot In Hell Shocking Video Captures Pub Landlords Tirade At Departing Employee

May 21, 2025

I Hope You Rot In Hell Shocking Video Captures Pub Landlords Tirade At Departing Employee

May 21, 2025

Latest Posts

-

Cultivating Resilience A Guide To Mental Strength

May 21, 2025

Cultivating Resilience A Guide To Mental Strength

May 21, 2025 -

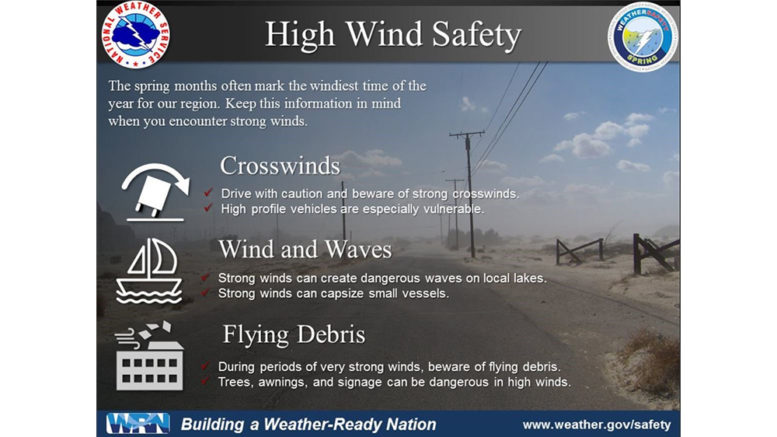

Staying Safe During Fast Moving Storms With High Winds

May 21, 2025

Staying Safe During Fast Moving Storms With High Winds

May 21, 2025 -

Bayern Munichs Bundesliga Triumph Delayed Leverkusen Victory And Kanes Absence

May 21, 2025

Bayern Munichs Bundesliga Triumph Delayed Leverkusen Victory And Kanes Absence

May 21, 2025 -

Boosting Mental Resilience Overcoming Challenges And Thriving

May 21, 2025

Boosting Mental Resilience Overcoming Challenges And Thriving

May 21, 2025 -

Damaging Winds Essential Safety Tips For Fast Moving Storms

May 21, 2025

Damaging Winds Essential Safety Tips For Fast Moving Storms

May 21, 2025