FTC Probe Into OpenAI And ChatGPT: Implications For AI Regulation

Table of Contents

The FTC's Concerns Regarding OpenAI and ChatGPT

The Federal Trade Commission (FTC) launched its investigation into OpenAI, the creator of the widely popular chatbot ChatGPT, citing several serious concerns. The OpenAI investigation stems from a growing awareness of the potential harms associated with large language models (LLMs). The FTC is specifically investigating several key areas:

- Data Privacy and Security: ChatGPT's training data encompasses vast amounts of personal information scraped from the internet. The FTC is likely concerned about the potential for data breaches, unauthorized data use, and violations of user privacy rights. This includes the potential for the ChatGPT regulation to necessitate increased data security measures.

- Bias and Discrimination: AI models like ChatGPT can inherit and amplify biases present in their training data, leading to discriminatory outputs. The FTC's investigation likely involves scrutiny of the model's potential to perpetuate harmful stereotypes and unfair outcomes based on factors like race, gender, or socioeconomic status.

- Misinformation and False Narratives: The ability of ChatGPT to generate convincingly realistic text raises concerns about the spread of misinformation and propaganda. The FTC is likely investigating OpenAI's role in mitigating this risk.

- Lack of Transparency: The opacity surrounding OpenAI's data collection and model training processes is another critical area of concern. The FTC's investigation aims to determine the extent of transparency in OpenAI's practices and whether it adequately informs users about how their data is being used.

The legal basis for the FTC's investigation likely rests on existing consumer protection laws, focusing on unfair or deceptive trade practices. The potential penalties OpenAI could face range from hefty fines to mandatory changes in its data handling and model development practices. The outcome of this OpenAI investigation will set a significant precedent for other AI companies.

The Broader Implications for AI Development and Deployment

The FTC's actions against OpenAI have far-reaching implications for the future of AI. The AI Regulation landscape is rapidly evolving, and this investigation is likely to accelerate the development of stricter safety standards and regulations.

- Increased Scrutiny of AI Datasets and Training Methodologies: The investigation highlights the critical need for careful curation and auditing of datasets used to train AI models, ensuring they are free from bias and represent diverse populations fairly.

- Mandatory AI Audits and Compliance Requirements: We can expect increased calls for mandatory audits and compliance requirements for AI systems, particularly those with the potential for significant societal impact.

- Greater Emphasis on Ethical AI Development Principles: The FTC's actions underscore the necessity of integrating ethical considerations into the entire AI development lifecycle, from data collection to deployment and ongoing monitoring.

- Slowdown in the Deployment of Large Language Models (LLMs): The regulatory uncertainty and potential for legal challenges might lead to a cautious approach to deploying new LLMs, prioritizing safety and ethical considerations over rapid innovation.

This increased scrutiny could potentially create a "chilling effect" on AI innovation and investment, though some argue that responsible regulation is necessary to ensure the ethical and beneficial use of AI technology.

Potential Future Regulatory Frameworks for AI

The FTC's investigation is pushing the conversation surrounding AI Regulation to the forefront. Existing and proposed regulations, like the EU's AI Act, provide a framework for potential future approaches. These include:

- Risk-based approaches: Focusing on the regulation of high-risk AI applications, like those used in healthcare or criminal justice.

- Principle-based approaches: Establishing overarching ethical principles for AI development and deployment, allowing for flexibility and innovation while adhering to core values.

- Sector-specific regulations: Tailoring regulations to specific industries, considering the unique risks and benefits of AI within each sector.

However, creating effective AI Regulation faces significant challenges:

- The rapid pace of AI development makes it difficult for regulations to keep up.

- The international nature of AI research and deployment necessitates global cooperation.

- Defining and measuring AI risk remains a complex and ongoing challenge.

The Role of Transparency and Accountability in AI Systems

Transparency and accountability are paramount in building trust and ensuring the responsible use of AI. This means:

- Transparency in AI algorithms and decision-making processes: Users should understand how AI systems arrive at their conclusions and recommendations, particularly in high-stakes situations.

- Mechanisms of accountability for AI-driven outcomes: Clear lines of responsibility must be established when AI systems cause harm or make incorrect decisions.

- Explainable AI (XAI): The development and deployment of XAI techniques are critical for building trust and understanding in AI systems.

The benefits of increased transparency include:

- Improved user understanding of AI systems.

- Enhanced ability to identify and address biases.

- Increased user control over personal data used by AI systems.

Conclusion: Navigating the Future of AI Regulation After the OpenAI Probe

The FTC Probe into OpenAI and ChatGPT has significant implications for the future of AI Regulation. The investigation underscores the urgent need for comprehensive guidelines addressing data privacy, bias mitigation, transparency, and accountability in AI systems. Responsible AI development and deployment are not just ethical imperatives; they are becoming legal necessities. The outcome of this probe will shape how AI is developed, deployed, and regulated for years to come, influencing everything from ChatGPT regulation to the broader landscape of AI ethics. Stay updated on the FTC Probe into OpenAI and ChatGPT and its impact on AI regulation by following [link to relevant resources].

Featured Posts

-

Benjamin Kaellman Huuhkajien Uusi Taehti Kasvu Kentillae Ja Sen Ulkopuolella

May 20, 2025

Benjamin Kaellman Huuhkajien Uusi Taehti Kasvu Kentillae Ja Sen Ulkopuolella

May 20, 2025 -

Key Stats And Fast Facts About Wayne Gretzkys Hockey Career

May 20, 2025

Key Stats And Fast Facts About Wayne Gretzkys Hockey Career

May 20, 2025 -

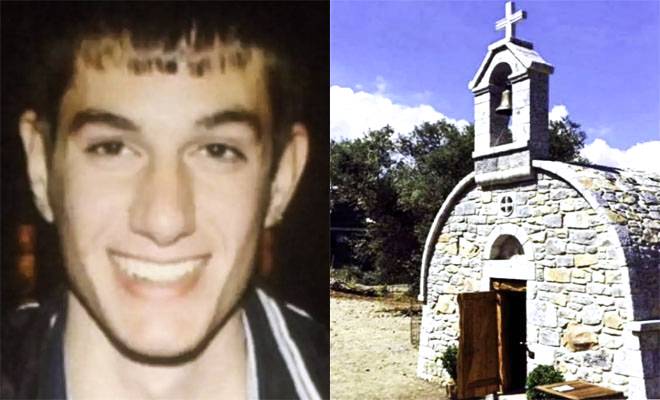

Baggelis Giakoymakis I Tragodia Toy 20xronoy To Bullying Kai Oi Vasanismoi

May 20, 2025

Baggelis Giakoymakis I Tragodia Toy 20xronoy To Bullying Kai Oi Vasanismoi

May 20, 2025 -

Sabalenkas Winning Debut At The Madrid Open

May 20, 2025

Sabalenkas Winning Debut At The Madrid Open

May 20, 2025 -

Cote D Ivoire Le Port D Abidjan Accueille Le Plus Grand Navire De Son Histoire

May 20, 2025

Cote D Ivoire Le Port D Abidjan Accueille Le Plus Grand Navire De Son Histoire

May 20, 2025