FTC Probe Into OpenAI: Implications For AI Regulation And The Future Of ChatGPT

Table of Contents

The FTC's Concerns Regarding OpenAI and ChatGPT

The FTC, tasked with protecting consumers from unfair or deceptive business practices, has launched an investigation into OpenAI's practices. This signifies a growing recognition of the potential harms associated with powerful AI models like ChatGPT. The FTC's mandate centers on consumer protection, and their concerns regarding OpenAI are multifaceted.

The FTC's specific concerns likely revolve around several key areas:

-

Data privacy breaches and potential violations of COPPA (Children's Online Privacy Protection Act): The vast amounts of data used to train ChatGPT raise concerns about potential privacy violations, particularly concerning children's data. The lack of transparency in data collection and usage practices is a major point of contention. OpenAI's data handling must adhere to strict regulations like COPPA to protect minors' privacy.

-

Algorithmic bias and its discriminatory impact: AI models like ChatGPT can inherit and amplify biases present in their training data, leading to discriminatory outcomes. This bias can manifest in various ways, from perpetuating harmful stereotypes to unfairly disadvantaging certain groups. Addressing algorithmic bias is crucial for ensuring fairness and equity in AI applications.

-

Misinformation and the spread of false narratives through ChatGPT: ChatGPT's ability to generate human-quality text raises concerns about its potential for misuse in spreading misinformation and propaganda. The ease with which convincingly false information can be generated poses a significant challenge to combating disinformation.

-

Lack of transparency in OpenAI’s data handling and model training processes: The lack of transparency surrounding OpenAI's data sources and model training methods hinders independent audits and assessments of potential risks. Increased transparency is vital for building public trust and enabling responsible oversight of AI technologies.

Implications for AI Regulation

The FTC investigation into OpenAI has far-reaching implications for the future of AI regulation worldwide. It signals a growing global movement towards stricter oversight of AI development and deployment. The investigation will likely influence the development and enforcement of existing and proposed regulations such as the EU AI Act, which aims to establish a comprehensive regulatory framework for AI systems.

This increased scrutiny will likely lead to:

-

Increased scrutiny of AI model development and deployment processes: Expect more rigorous testing and evaluation procedures before AI models are released to the public.

-

Potential for stricter data privacy regulations and increased user control over data: Users will likely have greater control over their data and how it's used to train AI models.

-

The need for robust mechanisms to address algorithmic bias and ensure fairness: Developing and implementing methods for detecting and mitigating bias in AI models will be crucial.

-

Development of standardized ethical guidelines for AI development: Industry-wide standards and ethical guidelines will become more essential to ensuring responsible AI development and deployment.

The Future of ChatGPT and Similar AI Models

The FTC probe will inevitably reshape the future of ChatGPT and other large language models (LLMs). We can expect significant changes in how these models are developed, deployed, and used. The emphasis on accountability and transparency will likely drive significant changes in the business models of AI companies.

These changes may include:

-

Increased emphasis on user privacy and data security: OpenAI and other AI developers will likely prioritize robust data security measures and transparent data handling practices.

-

Development of more robust methods to detect and mitigate bias: Advanced techniques for identifying and mitigating biases in training data and model outputs will become necessary.

-

Implementation of better safeguards against misuse and malicious applications: Mechanisms to prevent the misuse of LLMs for malicious purposes, such as generating deepfakes or spreading misinformation, will be essential.

-

Potential for stricter regulations on the use of AI in sensitive areas (e.g., healthcare, finance): The use of AI in high-stakes applications will be subject to stricter regulations and oversight.

Responses from OpenAI and the Tech Industry

OpenAI has publicly committed to cooperating with the FTC investigation and addressing their concerns. The company's response will likely shape the future trajectory of its development and deployment of AI models. The broader tech industry is also reacting to this increased regulatory scrutiny. Many companies are actively investing in research on AI safety and developing their own internal ethical guidelines.

Key aspects of the industry response include:

-

OpenAI’s commitment to addressing the FTC’s concerns: OpenAI is expected to implement changes in its data handling practices, bias mitigation strategies, and transparency measures.

-

Statements from other major tech companies regarding AI safety and regulation: Major tech players are increasingly acknowledging the need for responsible AI development and are publicly supporting greater regulation.

-

Industry initiatives aimed at promoting responsible AI development: Collaborative efforts across the tech industry to establish industry best practices and ethical guidelines are gaining momentum.

Conclusion: Navigating the Future of AI with Responsible Development – The FTC Probe and ChatGPT

The FTC probe into OpenAI serves as a critical turning point in the development and regulation of AI. The investigation highlights the significant risks associated with powerful AI models like ChatGPT, emphasizing the urgent need for responsible AI development and robust regulatory frameworks. The potential impact on ChatGPT and similar LLMs is substantial, requiring increased transparency, accountability, and a commitment to mitigating biases and preventing misuse. The future of AI hinges on a collaborative effort between regulators, developers, and users to navigate the ethical and societal challenges posed by this transformative technology. Stay informed about the ongoing FTC investigation and actively participate in discussions about AI regulation, ChatGPT safety, and responsible AI development to shape a future where AI benefits all of humanity.

Featured Posts

-

Les Pressions Environnementales Maritimes Sous Tension Geopolitique Credit Mutuel Am

May 19, 2025

Les Pressions Environnementales Maritimes Sous Tension Geopolitique Credit Mutuel Am

May 19, 2025 -

Trumps Winning Coalition Fracturing Ahead Of 2024

May 19, 2025

Trumps Winning Coalition Fracturing Ahead Of 2024

May 19, 2025 -

Chateau Diy Projects Easy Tutorials And Inspiration

May 19, 2025

Chateau Diy Projects Easy Tutorials And Inspiration

May 19, 2025 -

Nyt Mini Crossword Answers Today March 24 2025 Hints And Clues

May 19, 2025

Nyt Mini Crossword Answers Today March 24 2025 Hints And Clues

May 19, 2025 -

Symvoylio Efeton Dodekanisoy I Simasia Ton 210 Enorkon Sto Mikto Orkoto Efeteio

May 19, 2025

Symvoylio Efeton Dodekanisoy I Simasia Ton 210 Enorkon Sto Mikto Orkoto Efeteio

May 19, 2025

Latest Posts

-

Ftc Monopoly Case Against Meta The Defense Begins

May 19, 2025

Ftc Monopoly Case Against Meta The Defense Begins

May 19, 2025 -

Ftc Trial Update Meta Shifts Focus To Defense Strategy

May 19, 2025

Ftc Trial Update Meta Shifts Focus To Defense Strategy

May 19, 2025 -

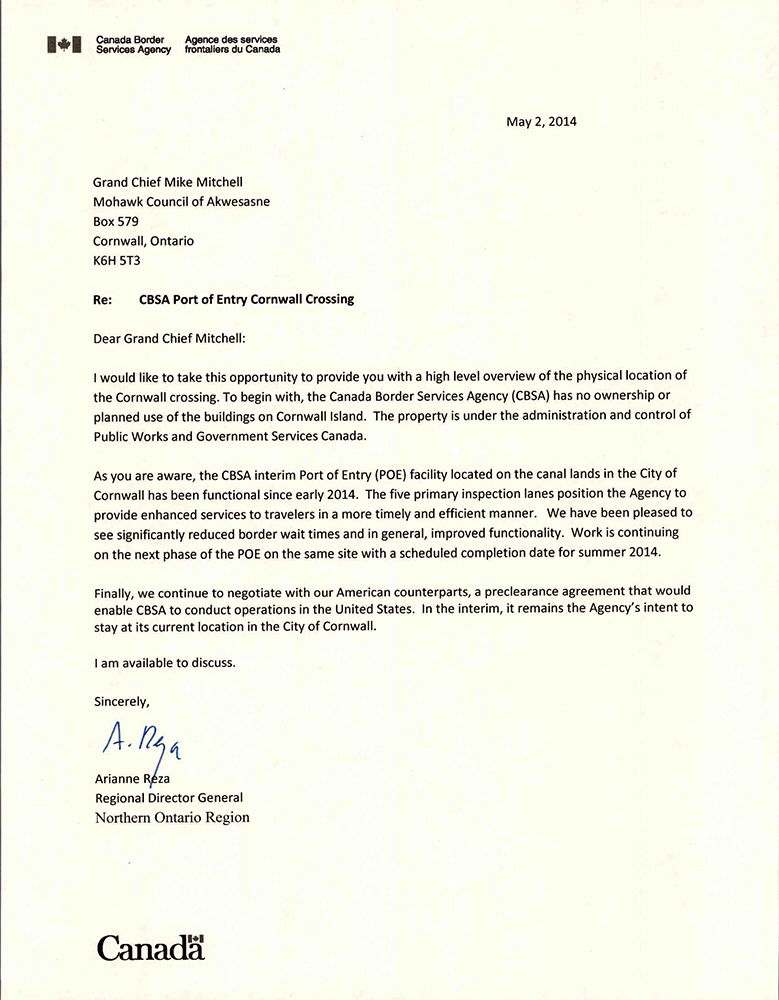

220 Million Lawsuit Shakes Kahnawake Casino Owners Sue Mohawk Council And Grand Chief

May 19, 2025

220 Million Lawsuit Shakes Kahnawake Casino Owners Sue Mohawk Council And Grand Chief

May 19, 2025 -

Metas Monopoly Defense Takes Center Stage In Ftc Trial

May 19, 2025

Metas Monopoly Defense Takes Center Stage In Ftc Trial

May 19, 2025 -

Kahnawake Casino Dispute 220 Million Lawsuit Filed

May 19, 2025

Kahnawake Casino Dispute 220 Million Lawsuit Filed

May 19, 2025