Google Vs. OpenAI: A Deep Dive Into I/O And Io Differences

Table of Contents

Understanding I/O and io in AI Systems

Defining I/O (Input/Output):

In the context of AI, I/O refers to the flow of data into and out of an AI system. This encompasses data ingestion, processing, and the generation of outputs. Consider these examples:

- Image Recognition: Input: an image file; Output: a classification label (e.g., "cat," "dog"). Efficient I/O here means quickly loading the image and delivering the classification result.

- Natural Language Processing (NLP): Input: a sentence in English; Output: a translation in Spanish. Efficient I/O involves fast text processing and output generation.

- Speech Recognition: Input: an audio file; Output: a transcribed text. Here, the speed of audio processing and text output is crucial for efficient I/O.

Effective I/O management directly translates to a better user experience, especially in real-time applications.

The Significance of Efficient io Operations:

Efficient input/output operations are paramount for optimal AI model performance. Key factors include:

- Latency: The time it takes for the system to respond to an input request. Lower latency leads to faster response times.

- Throughput: The amount of data processed per unit of time. Higher throughput means more efficient processing of large datasets.

Hardware and software optimizations play a critical role. For example, using high-speed storage (like SSDs) and employing parallel processing techniques drastically improves io efficiency.

- I/O bottlenecks can significantly impact AI model response times, leading to frustrating user experiences.

- Efficient io management is crucial for handling large datasets, which are common in modern AI applications. Inefficient io can lead to significant delays in training and inference.

- Different AI systems prioritize and optimize for various I/O characteristics, reflecting differing design goals and target applications.

Google's Approach to I/O and io

Google's Cloud Infrastructure and I/O:

Google Cloud Platform (GCP) boasts a robust infrastructure designed to handle massive I/O demands. Key services facilitating this include:

- Google Cloud Storage: Offers scalable and durable object storage for various data types, enabling efficient data ingestion and retrieval.

- BigQuery: A highly scalable, serverless data warehouse that allows for rapid querying and analysis of massive datasets. This is crucial for efficient data processing within the I/O pipeline.

- Cloud Dataflow: A fully managed, unified stream and batch data processing service that can handle complex data pipelines for optimized I/O.

TensorFlow and I/O Optimization:

TensorFlow, Google's popular machine learning framework, incorporates features specifically designed to address I/O challenges. These include:

-

Data Pipelines: Efficiently manage the flow of data from storage to the model, minimizing bottlenecks.

-

Parallel Processing: Distribute I/O operations across multiple cores or machines to improve throughput.

-

Optimized I/O Libraries: Provide optimized routines for reading and writing data in various formats.

-

GCP offers scalable solutions for large-scale I/O operations, making it suitable for handling massive datasets and high-throughput applications.

-

TensorFlow's optimized I/O libraries and data pipeline tools significantly improve the performance of AI models.

-

Google prioritizes efficient data management and retrieval, leading to robust and performant I/O systems.

OpenAI's Approach to I/O and io

OpenAI's API and I/O Interactions:

OpenAI primarily interacts with users through its API. Developers send requests containing input data (e.g., text prompts, images) and receive structured outputs (e.g., generated text, image classifications). This abstracts away much of the underlying I/O complexity.

- The API handles data serialization, transmission, and deserialization, simplifying the developer experience.

- Input/output formats are generally well-defined and standardized, promoting interoperability.

OpenAI's Focus on Model Performance vs. Raw I/O:

While OpenAI's API handles I/O, its primary focus is often on model performance and accuracy. Optimizations might prioritize computational efficiency over raw I/O speed in some scenarios. This means that while the API is user-friendly, fine-grained control over I/O parameters may be limited compared to GCP.

- OpenAI's API simplifies interaction with powerful AI models, making them accessible to a wider range of developers.

- The API handles much of the underlying I/O complexity, allowing developers to focus on model application rather than infrastructure management.

- OpenAI's focus on model optimization may impact specific I/O scenarios, especially when dealing with extremely large datasets or high-frequency requests.

Comparing Google and OpenAI: I/O and io Performance

Benchmarking and Key Differences:

Direct benchmarking of Google and OpenAI's I/O performance is difficult due to the differences in their architectures and intended use cases. Google's solutions are often geared towards large-scale data processing and handling massive datasets, while OpenAI's API prioritizes ease of use and access to powerful models.

Scalability and Resource Requirements:

Google's infrastructure offers unparalleled scalability, capable of handling massive I/O workloads. OpenAI's API scalability depends on the underlying infrastructure, which is less transparent. Resource requirements differ greatly; Google's solutions might require significant infrastructure investments, whereas OpenAI's API handles much of the infrastructure burden.

- Consider the type of application when comparing I/O performance: For large-scale data processing, Google's solutions are often superior. For simpler applications requiring access to powerful models, OpenAI's API may be more convenient.

- Scalability needs vary depending on the task and dataset size: Google's infrastructure scales better to handle extremely large datasets.

- Both platforms offer strengths in different aspects of I/O: Google excels in raw I/O speed and scalability, while OpenAI excels in user-friendliness and access to advanced models.

Conclusion:

This deep dive into Google and OpenAI's approaches to I/O and io reveals significant differences stemming from their distinct architectures and priorities. Google emphasizes scalable infrastructure and efficient data management, while OpenAI focuses on simplifying user interaction with powerful models. The choice between Google and OpenAI depends heavily on the specific application requirements and the balance needed between I/O performance and model accuracy. Understanding these nuances is crucial for making informed decisions when choosing the right AI platform for your projects. To further explore the intricacies of Google and OpenAI's I/O capabilities, delve deeper into their respective documentation and research the latest advancements in Google vs. OpenAI I/O and io differences.

Featured Posts

-

China Us Trade Export Boom Driven By Approaching Trade Agreement

May 25, 2025

China Us Trade Export Boom Driven By Approaching Trade Agreement

May 25, 2025 -

Conquering Your Fears At Dr Terrors House Of Horrors

May 25, 2025

Conquering Your Fears At Dr Terrors House Of Horrors

May 25, 2025 -

Significant Delays On M6 Southbound Following Collision

May 25, 2025

Significant Delays On M6 Southbound Following Collision

May 25, 2025 -

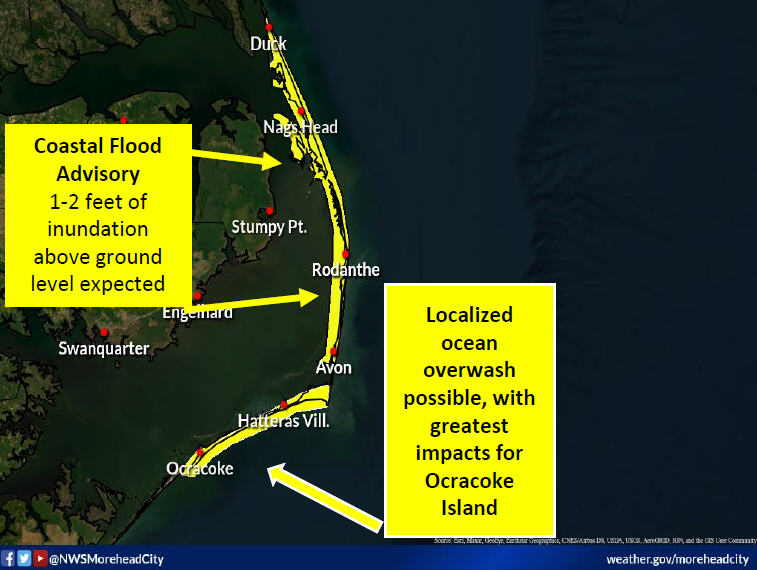

Urgent Coastal Flood Advisory In Effect For Southeast Pa Wednesday

May 25, 2025

Urgent Coastal Flood Advisory In Effect For Southeast Pa Wednesday

May 25, 2025 -

Humoriste Transformiste Zize En Spectacle Graveson 4 Avril

May 25, 2025

Humoriste Transformiste Zize En Spectacle Graveson 4 Avril

May 25, 2025