Investigating The Link Between Algorithmic Radicalization And Mass Shootings

Table of Contents

The Mechanisms of Algorithmic Radicalization

Algorithmic radicalization, the process by which algorithms contribute to the adoption of extremist views, operates through several insidious mechanisms. Understanding these mechanisms is crucial to addressing the problem effectively.

Echo Chambers and Filter Bubbles

Algorithms designed to personalize content often create echo chambers, reinforcing pre-existing beliefs and limiting exposure to diverse perspectives. This curated experience leads to the amplification of extremist views and the creation of filter bubbles, isolating individuals within radical online communities.

- Increased exposure to extremist content: Users are consistently shown content aligning with their initial biases, regardless of its veracity or extremism.

- Reduced exposure to counter-narratives: Opposing viewpoints are suppressed, preventing users from critically evaluating their beliefs.

- Confirmation bias reinforcement: Algorithms reinforce pre-existing biases, making it difficult for users to objectively assess information.

- Examples: Facebook's newsfeed algorithm, YouTube's recommendation system, and Twitter's trending topics have all been implicated in creating echo chambers and filter bubbles that can contribute to algorithmic radicalization. These platforms prioritize engagement, often at the expense of balanced information presentation.

Recommendation Systems and Radicalization Pathways

Recommendation systems, designed to suggest relevant content, can inadvertently lead users down a "rabbit hole" of increasingly extreme materials. This process, often gradual and subtle, can contribute to radicalization without the user's conscious awareness. This is often described as "adjacent radicalization," where exposure to seemingly benign content gradually leads to exposure to more extreme material.

- The "adjacent radicalization" phenomenon: Users start with relatively moderate content and are progressively exposed to increasingly extreme views through algorithmic suggestions.

- Escalation of engagement with violent content: Algorithms can identify and suggest increasingly violent and extreme content, escalating user engagement with harmful ideologies.

- Personalized content shaping extremist beliefs: Tailored recommendations reinforce existing beliefs and introduce users to new, extremist perspectives, leading to the formation and solidification of harmful ideologies.

- Case studies: Research has shown how YouTube's recommendation system has been used to lead users from mainstream videos to extremist content. Similar patterns have been observed on other platforms, highlighting the need for improved algorithmic design and content moderation.

The Role of Social Media Platforms in Algorithmic Radicalization

Social media platforms play a pivotal role in the spread of extremist ideologies, often unintentionally facilitated by their algorithms. Addressing this requires a multi-faceted approach.

Platform Responsibility and Content Moderation

Social media platforms face immense challenges in moderating content effectively, particularly identifying and removing extremist material before it reaches vulnerable individuals. The constant evolution of extremist language and tactics creates a constant "arms race" between moderators and those seeking to spread hateful ideologies.

- Limitations of current content moderation techniques: Current methods often struggle to keep pace with the evolving tactics of extremist groups.

- Challenges in detecting subtle forms of radicalization: Identifying early signs of radicalization online is complex and requires sophisticated detection systems.

- Ethical considerations of censorship and free speech: Balancing the need to prevent harm with the protection of free speech remains a significant challenge for platforms.

- Improving platform accountability: Greater transparency and accountability mechanisms are needed to ensure platforms are taking responsibility for the content hosted on their sites.

The Spread of Misinformation and Conspiracy Theories

Social media algorithms often prioritize engagement metrics over accuracy, inadvertently amplifying the spread of misinformation and conspiracy theories. These narratives can contribute to a climate of distrust and anger, potentially fueling violent extremism.

- Impact of misinformation on radicalization: False narratives and conspiracy theories can contribute to feelings of anger, resentment, and frustration, creating a fertile ground for extremist ideologies.

- Role of social media in creating and spreading conspiracy theories: Social media algorithms can quickly amplify misinformation and conspiracy theories, reaching vast audiences and normalizing extremist views.

- Examples: Numerous studies have demonstrated how the spread of misinformation on social media has fueled violence and contributed to real-world conflict.

- Strategies for combating misinformation: Fact-checking initiatives, media literacy education, and improved algorithmic design are crucial to mitigating the spread of misinformation.

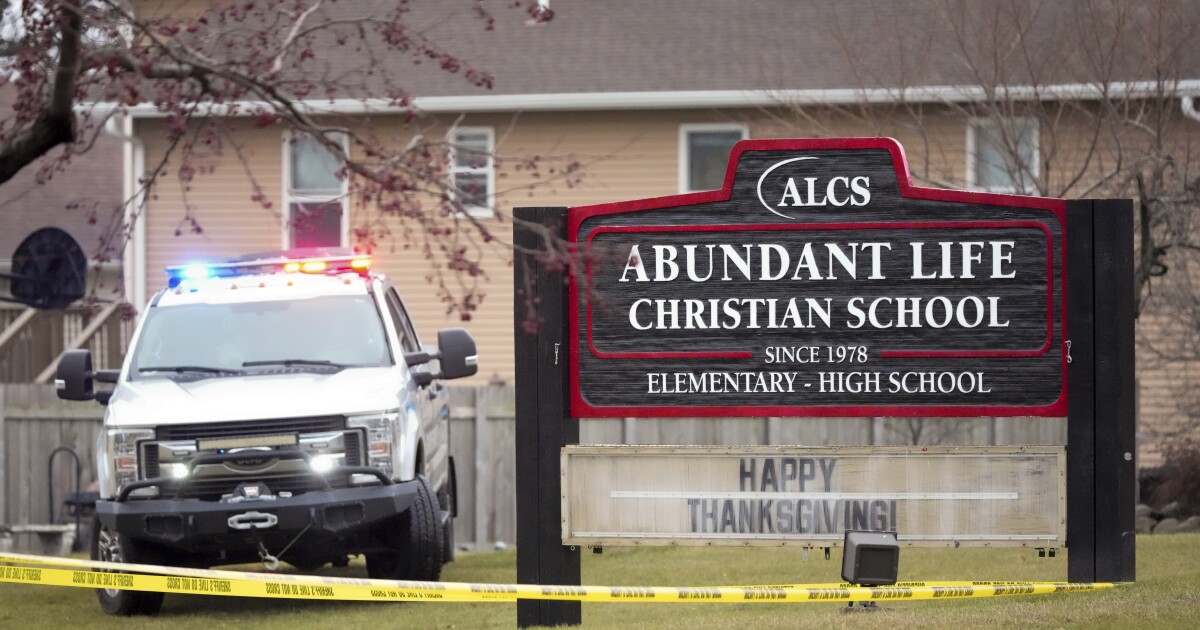

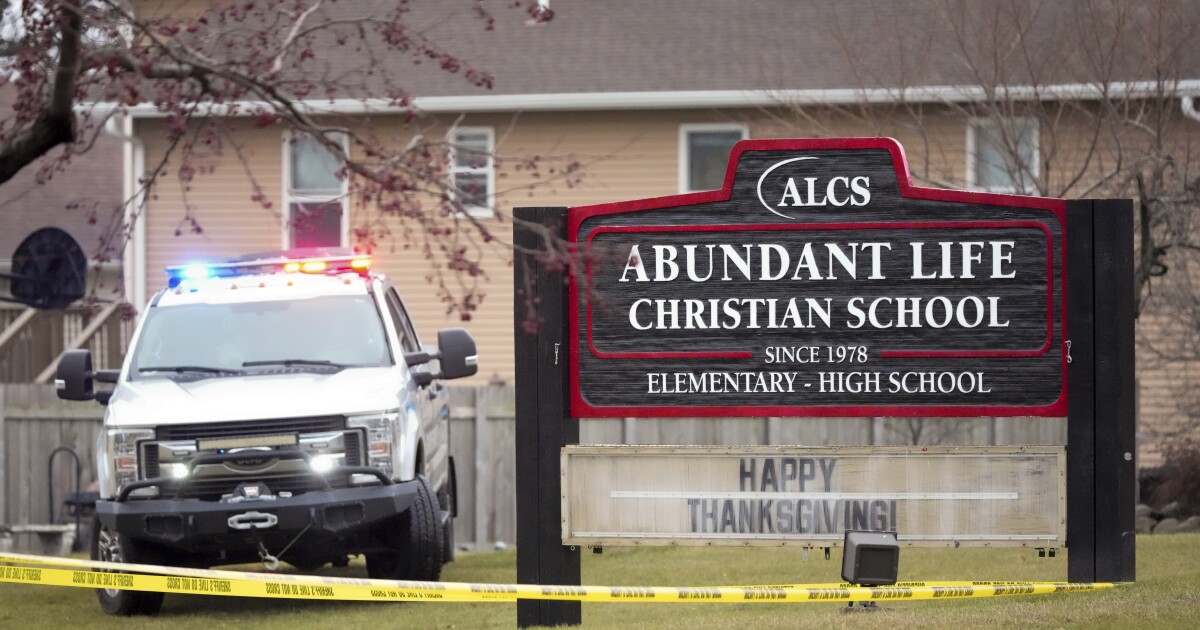

Connecting Algorithmic Radicalization to Mass Shootings

Establishing a direct causal link between algorithmic radicalization and mass shootings is complex, requiring careful analysis and consideration of multiple factors. However, a growing body of research suggests a strong correlation.

Case Studies and Empirical Evidence

This section requires further research and analysis of specific instances. However, several studies are already emerging that explore the online activity of perpetrators of mass shootings, identifying patterns in their engagement with extremist content and online communities. This research can provide crucial empirical evidence linking online radicalization to real-world acts of violence.

- Analysis of online activity of perpetrators: Researchers are examining the digital footprints of individuals who committed mass shootings to identify patterns in their exposure to extremist ideologies online.

- Identification of patterns in the use of social media and extremist content: Analyzing data on social media usage can reveal patterns and trends that help understand how algorithms contribute to radicalization.

- Discussion of the limitations of connecting online activity to real-world actions: It's crucial to acknowledge that correlation does not equal causation, and other factors must be considered when analyzing the link between online radicalization and real-world violence.

The Psychological Impact of Online Radicalization

Algorithmic radicalization doesn't just passively expose individuals to extremist views; it actively shapes their psychological state, contributing to the potential for violence.

- Algorithms contributing to the dehumanization of others: Algorithms can reinforce existing biases and contribute to the dehumanization of out-groups, increasing the likelihood of violent acts.

- Role of online communities in reinforcing extremist ideologies: Online echo chambers can create environments where extremist views are validated and reinforced, leading to radicalization.

- Psychological factors contributing to violent behavior: Understanding the psychological mechanisms underlying violent extremism is crucial to developing effective interventions.

Conclusion

The link between algorithmic radicalization and mass shootings is a complex and evolving issue demanding urgent attention. While more research is needed to fully understand the nuanced relationship, the evidence suggests a strong correlation between exposure to extremist content online, facilitated by algorithms, and the increased risk of violent acts. Social media platforms bear significant responsibility in mitigating the spread of extremist ideologies and combating algorithmic radicalization. We must demand increased transparency, improved content moderation strategies, and a greater focus on promoting media literacy to prevent future tragedies. Further investigation into the algorithmic radicalization process is crucial to developing effective countermeasures and protecting vulnerable individuals from the dangers of online extremism. We need to act now to curb the harmful effects of algorithmic radicalization.

Featured Posts

-

Trump Advisers Plan B New Tariff Strategy After Court Setback

May 31, 2025

Trump Advisers Plan B New Tariff Strategy After Court Setback

May 31, 2025 -

Port Saint Louis Du Rhone Le Festival De La Camargue Celebre Les Mers Et Les Oceans

May 31, 2025

Port Saint Louis Du Rhone Le Festival De La Camargue Celebre Les Mers Et Les Oceans

May 31, 2025 -

Suchaktion Bodensee Vermisste Person In Bregenz Weiterhin Vermisst

May 31, 2025

Suchaktion Bodensee Vermisste Person In Bregenz Weiterhin Vermisst

May 31, 2025 -

Megarasaray Otelleri Acik Turnuvasi Ciftler Sampiyonlari Bondar Ve Waltert In Basarisi

May 31, 2025

Megarasaray Otelleri Acik Turnuvasi Ciftler Sampiyonlari Bondar Ve Waltert In Basarisi

May 31, 2025 -

Rudy Giulianis Tribute To Bernie Kerik Remembering A Friend And Public Servant

May 31, 2025

Rudy Giulianis Tribute To Bernie Kerik Remembering A Friend And Public Servant

May 31, 2025