Is Your Tech Stack Holding Back Your AI Ambitions?

Table of Contents

Data Storage and Management: The Foundation of Your AI Infrastructure

Efficient data storage and management are paramount for any successful AI project. AI algorithms thrive on data; the more data, the better the insights. However, managing vast quantities of data presents significant challenges. Scaling data storage for AI workloads requires careful planning and the right infrastructure. Without it, your AI initiatives will be crippled.

Challenges often include:

- Lack of scalable cloud storage solutions: Relying on outdated on-premise solutions can quickly become a bottleneck.

- Inefficient data pipelines leading to bottlenecks: Slow data ingestion and processing severely impact training times.

- Difficulties in data integration from various sources: Consolidating data from disparate sources is crucial for comprehensive AI analysis.

- Limited data versioning and management capabilities: Tracking changes and managing different versions of your data is essential for reproducibility and auditing.

Solutions include adopting cloud-based data lakes, leveraging robust data warehousing solutions like Snowflake or BigQuery, and implementing data versioning tools to maintain data integrity and traceability. Investing in a robust data management system is a crucial first step in building a strong AI tech stack.

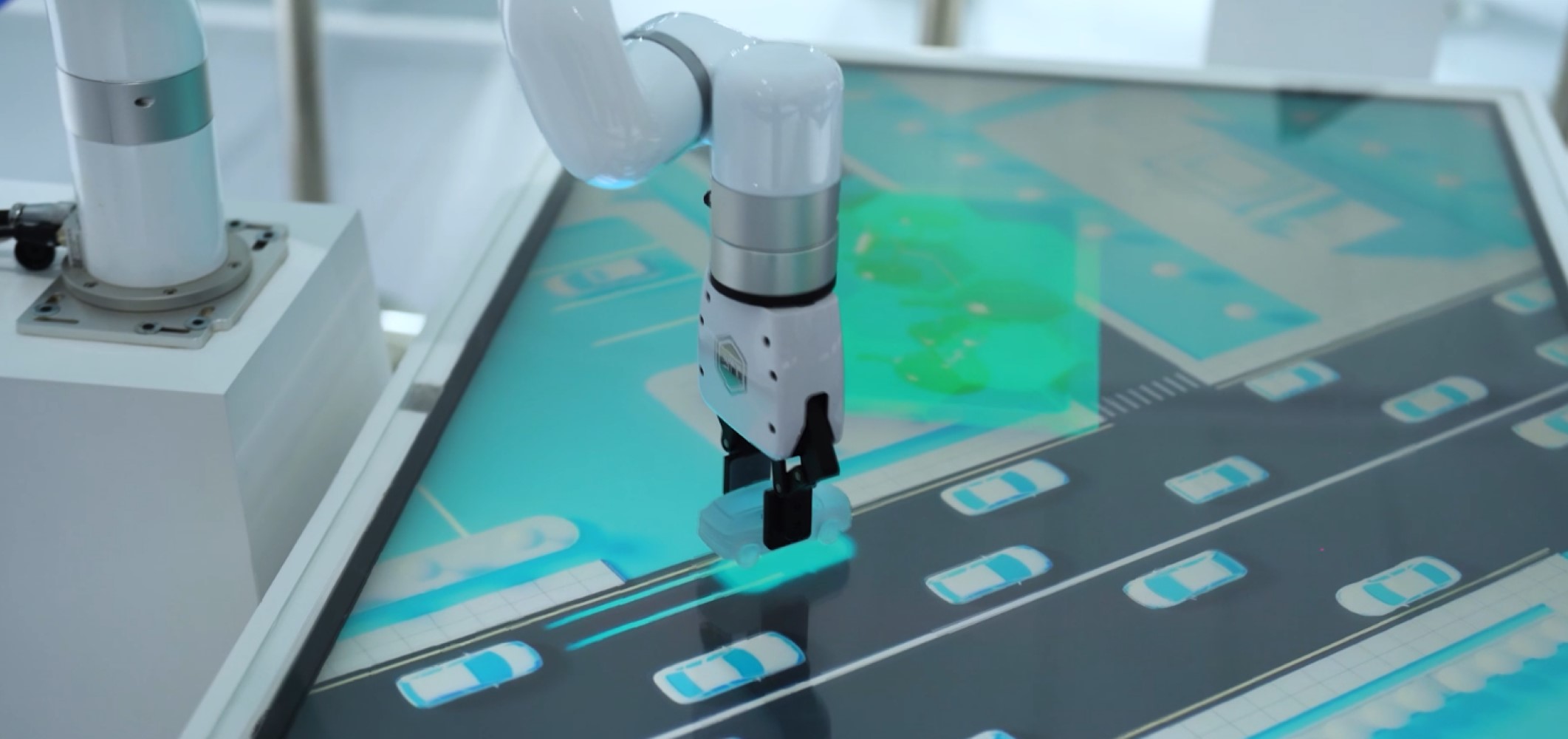

Computational Power and Scalability: Meeting the Demands of AI

AI algorithms, particularly deep learning models, are computationally intensive. Training complex models requires significant processing power, and limited resources can severely impact training time and model performance. Scalability is equally crucial as datasets grow, and AI models need to handle increasing workloads.

The consequences of insufficient computational resources include:

- Insufficient CPU/GPU resources for model training: Leading to excessively long training times and potentially hindering model accuracy.

- Lack of scalability for handling growing datasets: Inability to handle larger datasets limits the potential insights.

- Challenges in deploying AI models to production environments: Models trained on powerful hardware may not perform optimally in less resource-rich production environments.

- Limited options for parallel processing: Restricting the ability to speed up training and inference.

To address these challenges, consider cloud computing platforms like AWS, Azure, and GCP, which offer scalable compute resources on demand. Specialized AI hardware, such as GPUs and TPUs, can significantly accelerate training. Containerization technologies like Docker and Kubernetes facilitate efficient deployment and management of AI models across various environments.

AI/ML Frameworks and Libraries: Choosing the Right Tools for the Job

Selecting the appropriate AI/ML frameworks and libraries is vital for efficient development and deployment. The choice depends largely on project requirements, developer expertise, and integration needs. Using outdated or incompatible frameworks can lead to a multitude of problems.

Consider these potential pitfalls:

- Incompatibility between different frameworks and tools: Choosing disparate frameworks can create integration challenges and hinder workflow efficiency.

- Lack of support for cutting-edge AI algorithms: Using older frameworks might limit access to the latest advancements in AI.

- Difficulty in finding skilled developers for specific frameworks: Choosing less common frameworks can make finding and retaining talent challenging.

- Limited integration with other parts of the tech stack: Poor integration can severely hamper the overall AI system's functionality.

Popular frameworks include TensorFlow, PyTorch, and scikit-learn. The best choice depends on your project’s specific needs and the skills of your development team. Careful consideration of these factors is key to selecting the right tools for your AI journey.

Integration and Interoperability: A Seamless AI Ecosystem

A successful AI implementation requires seamless integration between different components of your tech stack. This includes integrating AI systems with existing business applications, such as CRM, ERP, and other enterprise systems. Without proper integration, your AI solutions will remain isolated and unable to provide maximum value.

Difficulties in integration can manifest in several ways:

- Difficulties in integrating AI models with existing CRM, ERP, and other systems: Data silos prevent holistic analysis and limit the AI's impact on business processes.

- Lack of APIs and standardized interfaces for data exchange: Without standardized interfaces, data exchange becomes cumbersome and error-prone.

- Challenges in managing data security and privacy across integrated systems: Maintaining security and compliance is critical when integrating diverse systems.

- Siloed data preventing holistic AI analysis: A fragmented data landscape hinders the ability to draw comprehensive insights.

Solutions include designing API-driven architectures, adopting a microservices approach, utilizing standardized data formats like JSON or Parquet, and implementing robust security measures to protect sensitive data.

Conclusion: Optimizing Your Tech Stack for AI Success

Building a robust and scalable AI infrastructure requires careful consideration of several critical components. An inadequate tech stack can lead to significant challenges in data management, computational limitations, framework incompatibility, and integration difficulties. These issues can result in slower development cycles, higher costs, and ultimately, a limited return on investment.

By strategically selecting and integrating the right tools and infrastructure – including efficient data storage, powerful computational resources, suitable AI/ML frameworks, and ensuring seamless interoperability – businesses can overcome these hurdles and unlock the full potential of their AI ambitions. A well-architected tech stack delivers improved scalability, enhanced performance, reduced costs, and increased ROI. Assess your current tech stack today and identify areas for improvement. Don't let a suboptimal tech stack hold back your AI journey – optimize today!

Featured Posts

-

Panthers Aim For Back To Back Success 8th Pick In The Nfl Draft

May 01, 2025

Panthers Aim For Back To Back Success 8th Pick In The Nfl Draft

May 01, 2025 -

Will France Reign Supreme In The Six Nations 2025

May 01, 2025

Will France Reign Supreme In The Six Nations 2025

May 01, 2025 -

8000 Km A Velo Le Defi De Trois Jeunes Du Bocage Ornais

May 01, 2025

8000 Km A Velo Le Defi De Trois Jeunes Du Bocage Ornais

May 01, 2025 -

Kareena Kapoor Opens Up Facial Lines Cosmetic Procedures And Hollywoods Pressure

May 01, 2025

Kareena Kapoor Opens Up Facial Lines Cosmetic Procedures And Hollywoods Pressure

May 01, 2025 -

Sag Awards 2024 Gillian Anderson And David Duchovny Share The Stage

May 01, 2025

Sag Awards 2024 Gillian Anderson And David Duchovny Share The Stage

May 01, 2025

Latest Posts

-

Dragons Den Investment Trends And Opportunities

May 02, 2025

Dragons Den Investment Trends And Opportunities

May 02, 2025 -

Doctors Alarming Claim This Food Is A Bigger Killer Than Smoking

May 02, 2025

Doctors Alarming Claim This Food Is A Bigger Killer Than Smoking

May 02, 2025 -

Analyzing Dragons Den Pitches Lessons Learned

May 02, 2025

Analyzing Dragons Den Pitches Lessons Learned

May 02, 2025 -

The Michael Sheen Million Pound Giveaway How To Participate

May 02, 2025

The Michael Sheen Million Pound Giveaway How To Participate

May 02, 2025 -

Early Death Risk Is This One Food Worse Than Smoking A Doctor Explains

May 02, 2025

Early Death Risk Is This One Food Worse Than Smoking A Doctor Explains

May 02, 2025