OpenAI's ChatGPT: The FTC Investigation And Future Of AI Development

Table of Contents

The FTC Investigation: Allegations and Concerns

The FTC investigation into OpenAI's ChatGPT centers on several key areas of concern, raising critical questions about the future of AI development and its impact on society.

Data Privacy and Security Concerns

ChatGPT's sophisticated capabilities are fueled by massive datasets used during its training. This raises significant data privacy and security concerns. The FTC is likely scrutinizing OpenAI's practices regarding the collection, use, and protection of user data.

- Potential Privacy Violations: Unauthorized collection and use of personal information shared during user interactions.

- Data Breaches: Vulnerabilities in the system that could expose sensitive user data to malicious actors.

- Lack of Transparency: Insufficient disclosure regarding data usage practices and potential risks to users.

These concerns highlight the need for robust data protection measures, compliance with regulations like GDPR and CCPA, and a greater focus on user data privacy within AI development. The ethical implications of utilizing vast amounts of data for AI training require careful consideration and transparent practices.

Misinformation and Bias in AI Models

Another central concern of the FTC investigation is the potential for ChatGPT to generate biased or inaccurate information. Large language models like ChatGPT learn from the data they are trained on, which may contain biases reflecting societal inequalities.

- Biased Outputs: The model may produce responses that perpetuate harmful stereotypes or discriminate against certain groups.

- Spread of Misinformation: ChatGPT can be used to generate convincing but false information, contributing to the spread of misinformation and disinformation online.

- Difficulty in Mitigation: Identifying and mitigating bias in AI models is a complex challenge requiring ongoing research and development.

Addressing AI bias and the spread of misinformation requires algorithmic accountability and the development of methods to detect and correct biased outputs. The FTC's investigation underscores the importance of responsible AI development practices that minimize the risk of these harmful outcomes. Keywords like deepfakes and algorithmic accountability highlight the broader societal impacts that are being addressed.

Consumer Protection Issues

The FTC is also likely examining consumer protection aspects related to ChatGPT. The use of AI technologies raises questions about transparency, deception, and the potential for harm to consumers.

- Deceptive Practices: The use of AI to generate misleading or deceptive content, potentially harming consumers.

- Lack of Transparency: A lack of clear disclosure regarding the use of AI in generating content, making it difficult for consumers to assess the reliability of the information.

- Need for Clear Disclosures: Regulations may require clear labeling of AI-generated content to prevent consumer confusion and manipulation.

The FTC's investigation highlights the need for greater transparency and clearer consumer protections in the rapidly evolving AI landscape. Establishing clear guidelines for responsible AI use is vital for safeguarding consumer rights.

Implications for OpenAI and the AI Industry

The FTC investigation carries significant implications for OpenAI and the broader AI industry.

Potential Penalties and Regulatory Changes

OpenAI faces the potential for substantial penalties as a result of the FTC investigation, including:

- Fines: Significant financial penalties for violations of consumer protection laws and data privacy regulations.

- Changes to Business Practices: Requirements to revise data handling practices, improve transparency, and enhance safeguards against bias and misinformation.

- Stricter Regulations on Data Handling: Increased regulatory scrutiny of data collection, storage, and use practices.

These potential penalties highlight the increasing legal implications of AI development and underscore the need for compliance with existing and future regulations.

Impact on AI Development and Innovation

The investigation could significantly impact future AI development and innovation:

- Increased Scrutiny of AI Models: A more rigorous review process for AI models before deployment, potentially slowing down innovation.

- Slower Development Cycles: Increased time and resources dedicated to ensuring compliance with regulations, resulting in longer development cycles.

- Higher Development Costs: The increased costs associated with compliance and risk mitigation could impact the financial viability of some AI projects.

The Role of Self-Regulation and Industry Standards

The FTC investigation highlights the importance of proactive self-regulation and the establishment of industry-wide standards for responsible AI development. This includes:

- Development of Ethical Guidelines: Creating clear ethical guidelines for AI development and deployment that prioritize user privacy, data security, and fairness.

- Industry Best Practices: Establishing best practices for data handling, bias mitigation, and transparency in AI systems.

- Independent Audits: Regular independent audits to assess compliance with ethical guidelines and industry standards.

The Future of AI Development: Lessons Learned

The FTC investigation into OpenAI's ChatGPT serves as a crucial lesson for the future of AI development. It emphasizes the need to balance innovation with responsible regulation.

Balancing Innovation and Regulation

Moving forward, a delicate balance must be struck between fostering innovation and implementing effective regulations to ensure responsible AI development. This requires:

- Best Practices: Adoption of best practices for data governance, algorithmic transparency, and bias mitigation.

- Frameworks for Ethical AI Development: Development of robust frameworks for ethical AI development and deployment, informed by input from ethicists, policymakers, and industry experts.

- Collaboration Between Industry and Regulators: Strong collaboration between industry stakeholders and regulatory bodies to establish clear guidelines and ensure compliance.

Conclusion: The Path Forward for OpenAI's ChatGPT and the Future of AI

The FTC investigation into OpenAI's ChatGPT underscores the critical need for responsible AI development and the importance of robust regulation. The potential penalties and regulatory changes stemming from this investigation will significantly impact OpenAI and the broader AI landscape. The future of AI hinges on a commitment to transparency, accountability, and the proactive mitigation of potential risks associated with these powerful technologies. Stay informed about the ongoing developments in this case and actively participate in the conversation surrounding OpenAI's ChatGPT and the future of responsible AI development. For further information on AI ethics and regulation, explore resources from organizations like [link to relevant resource/organization].

Featured Posts

-

Ftcs Appeal Challenges Court Ruling On Microsofts Activision Acquisition

May 04, 2025

Ftcs Appeal Challenges Court Ruling On Microsofts Activision Acquisition

May 04, 2025 -

Gary Mar Challenges Mark Carney Prioritizing Western Canada For National Success

May 04, 2025

Gary Mar Challenges Mark Carney Prioritizing Western Canada For National Success

May 04, 2025 -

E Bay And Section 230 Legal Ruling On Listings Of Banned Chemicals

May 04, 2025

E Bay And Section 230 Legal Ruling On Listings Of Banned Chemicals

May 04, 2025 -

Prince Harry King Charles Silence Following Security Case

May 04, 2025

Prince Harry King Charles Silence Following Security Case

May 04, 2025 -

A New Era Carney Unveils Ambitious Economic Transformation

May 04, 2025

A New Era Carney Unveils Ambitious Economic Transformation

May 04, 2025

Latest Posts

-

Finding Affordable Lizzo Concert Tickets Your Guide To The In Real Life Tour

May 04, 2025

Finding Affordable Lizzo Concert Tickets Your Guide To The In Real Life Tour

May 04, 2025 -

Lizzo In Real Life Tour Ticket Prices A Comprehensive Guide

May 04, 2025

Lizzo In Real Life Tour Ticket Prices A Comprehensive Guide

May 04, 2025 -

How Much Do Lizzo Concert Tickets Cost A Guide To Her In Real Life Tour Prices

May 04, 2025

How Much Do Lizzo Concert Tickets Cost A Guide To Her In Real Life Tour Prices

May 04, 2025 -

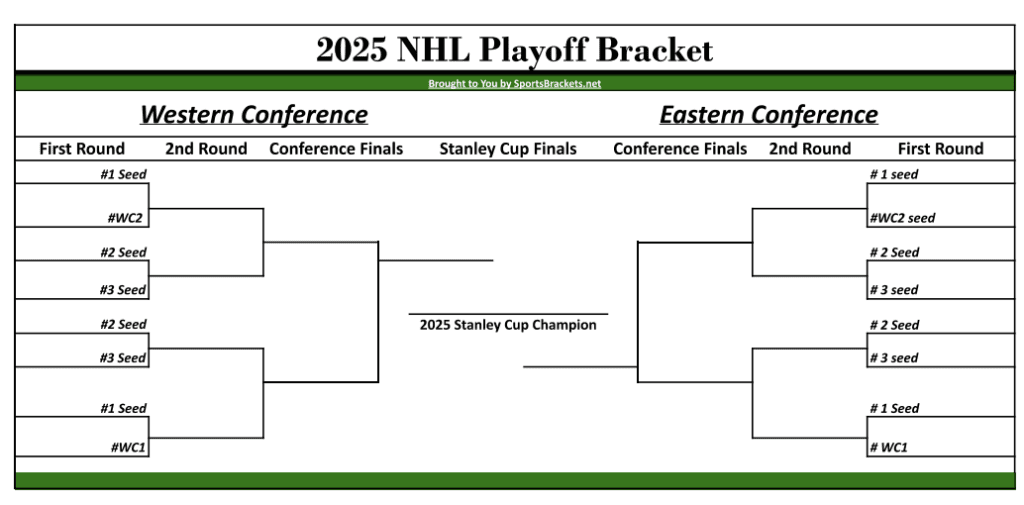

Las Vegas Golden Knights Prime Position For Stanley Cup Success

May 04, 2025

Las Vegas Golden Knights Prime Position For Stanley Cup Success

May 04, 2025 -

Nhl First Round Matchups Predictions And Analysis

May 04, 2025

Nhl First Round Matchups Predictions And Analysis

May 04, 2025