Revolutionizing Voice Assistant Development: OpenAI's 2024 Innovations

Table of Contents

Enhanced Natural Language Understanding (NLU) in OpenAI's Voice Assistants

OpenAI's advancements in Natural Language Understanding (NLU) are fundamentally changing how voice assistants interact with users. These improvements are leading to more natural, fluid, and contextually aware conversations.

Contextual Awareness and Memory

OpenAI is significantly improving the ability of its voice assistants to understand context and maintain conversation flow across multiple turns. This means the assistant remembers previous interactions, understands the ongoing conversation's topic, and responds accordingly.

- Improved contextual understanding: OpenAI's models can now track the conversation's context over extended periods, leading to more coherent and relevant responses.

- Handling interruptions: The voice assistant gracefully handles interruptions and seamlessly re-integrates the conversation's flow after a disruption.

- Remembering previous conversations: Personalized experiences are enhanced by remembering past interactions and preferences, leading to more tailored responses.

- Specific OpenAI technologies: This improved contextual awareness is driven by advancements in transformer models and memory mechanisms, leveraging techniques like attention mechanisms and long short-term memory (LSTM) networks.

Handling Ambiguity and Nuance

OpenAI is tackling the challenge of interpreting complex or ambiguous user requests. This includes better understanding slang, dialects, and different speech patterns.

- Improved slang and dialect understanding: The voice assistant now better recognizes colloquialisms and regional variations in language, leading to more accurate interpretations.

- Resolving uncertainty in user input: Advanced algorithms help clarify ambiguous requests by asking clarifying questions or providing relevant options.

- Related OpenAI research: OpenAI's research into robust NLU techniques, including techniques for handling noisy and incomplete data, is vital to this advancement.

Multilingual Support and Translation

OpenAI is breaking down language barriers, making voice assistants more accessible globally. This includes support for a wider range of languages and real-time translation capabilities.

- Extensive language support: OpenAI's voice assistants are expanding their support to include numerous languages and dialects, fostering inclusivity.

- Real-time translation features: The ability to translate conversations on the fly allows for seamless communication across language barriers.

- Impact on global accessibility: This enhanced multilingual support dramatically increases the accessibility of voice assistant technology worldwide.

Improved Speech Synthesis and Personalization in OpenAI's Voice Assistants

OpenAI is revolutionizing speech synthesis, creating more natural, expressive, and personalized voice experiences.

More Natural and Expressive Voice Synthesis

OpenAI is developing synthetic voices that are indistinguishable from human speech, incorporating nuances of emotion and intonation.

- Advancements in prosody and intonation: OpenAI's models are generating speech with more natural rhythm, pitch, and stress patterns.

- Emotion expression in synthetic speech: The ability to convey emotions like happiness, sadness, or anger makes the interactions feel more human.

- Specific OpenAI models: Models like GPT-3 and its successors are instrumental in driving these advancements in natural language generation, impacting the quality of synthetic speech.

Voice Cloning and Customization

Users may soon be able to personalize their voice assistants using their own voices or preferred vocal styles.

- Ethical considerations and technical challenges: OpenAI is actively addressing the ethical concerns around voice cloning, including issues of privacy and potential misuse.

- Addressing technical challenges: OpenAI is working on techniques for high-quality voice cloning with minimal data requirements.

Adaptive Speech Synthesis based on User Preferences

OpenAI is making voice assistants adapt to individual user preferences, creating truly personalized experiences.

- Adaptive speech speed, tone, and style: The voice assistant learns the user's preferred speech patterns and adjusts accordingly.

- User feedback integration: OpenAI utilizes user feedback to continually improve the voice assistant's responsiveness and personalization.

Advanced Integration and Interoperability with OpenAI's Voice Assistants

OpenAI is focusing on seamless integration and interoperability to enhance the overall user experience.

Seamless Integration with Existing Smart Home Ecosystems

OpenAI's advancements are bridging the gap between voice assistants and various smart home platforms.

- Improved device control: Users can control a wider range of smart home devices through voice commands.

- Interoperability across different platforms: OpenAI is facilitating better communication and control across various smart home ecosystems.

- Enhanced user experiences: The integration streamlines interactions, making smart home control more intuitive and efficient.

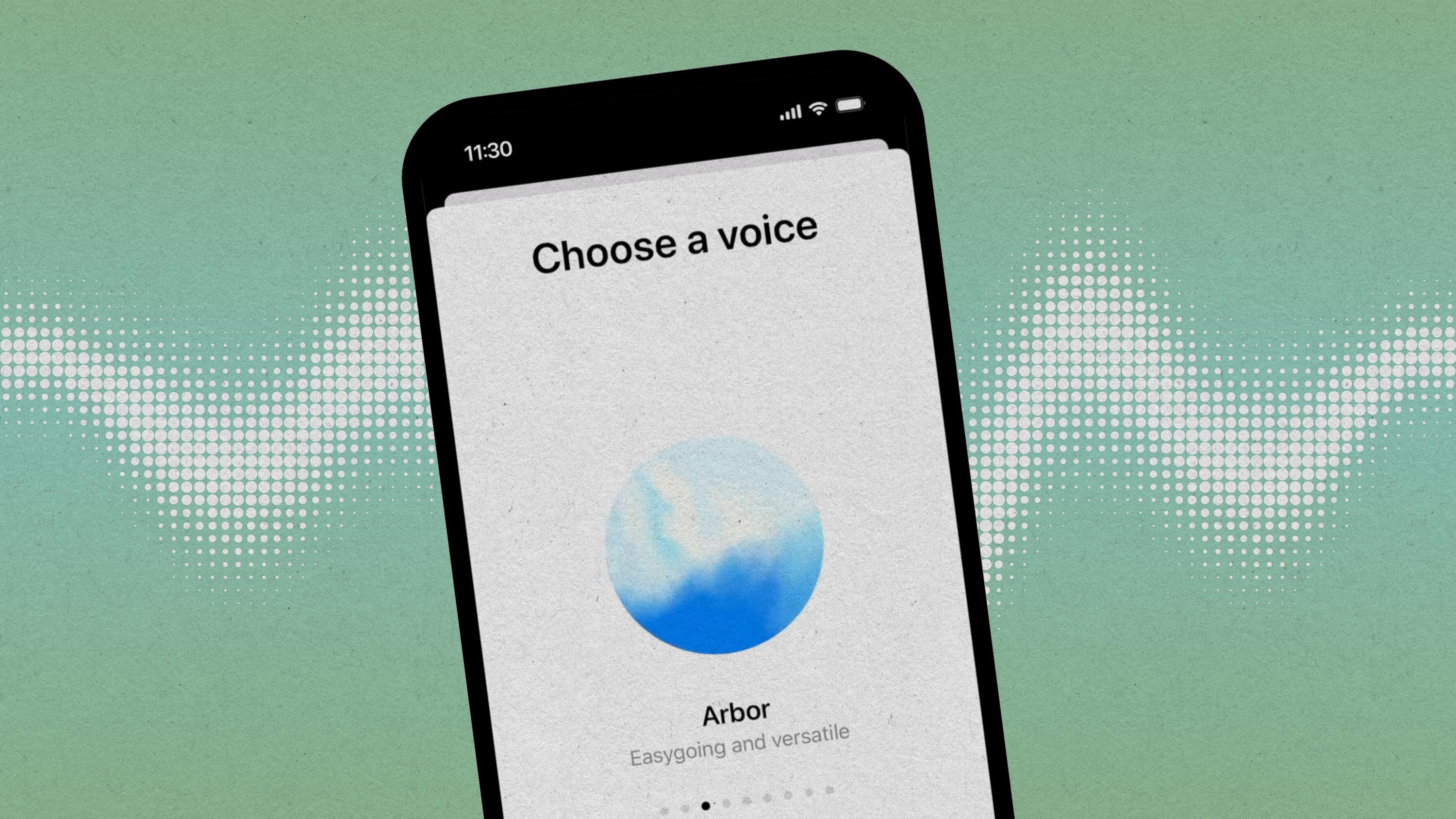

Enhanced API Access and Developer Tools

OpenAI is providing developers with better tools and APIs to integrate voice assistant technology into their applications.

- Improvements in API documentation: Clearer and more comprehensive documentation simplifies the integration process.

- Ease of use and support: OpenAI is offering robust support and readily available resources for developers.

- Support for different programming languages: The APIs are designed to be compatible with a variety of programming languages.

Open Source Contributions and Community Engagement

OpenAI is fostering collaboration by contributing to open-source projects and engaging with the developer community.

- Specific open-source projects: OpenAI actively participates in and contributes to several open-source initiatives related to voice technology.

- Community forums and developer resources: OpenAI provides platforms for developers to connect, share knowledge, and collaborate.

Conclusion

OpenAI's 2024 innovations in voice assistant development represent a significant leap forward. The advancements in natural language understanding, speech synthesis, and integration capabilities are transforming the way we interact with technology. These innovations hold immense potential for various industries, from customer service to healthcare and beyond. To stay updated on OpenAI's progress in revolutionizing voice assistant technology, follow their blog, attend their webinars, and join their vibrant developer community. The future of voice assistant technology is being shaped by OpenAI's groundbreaking work, and we encourage you to be a part of it.

Featured Posts

-

Securite Routiere Les Glissieres Une Solution Efficace Pour Reduire La Mortalite Routiere

Apr 30, 2025

Securite Routiere Les Glissieres Une Solution Efficace Pour Reduire La Mortalite Routiere

Apr 30, 2025 -

Open Ai Simplifies Voice Assistant Creation 2024 Developer Event Highlights

Apr 30, 2025

Open Ai Simplifies Voice Assistant Creation 2024 Developer Event Highlights

Apr 30, 2025 -

Swq Alraklyt Fy Swysra Yshhd Tfrt Qyasyt

Apr 30, 2025

Swq Alraklyt Fy Swysra Yshhd Tfrt Qyasyt

Apr 30, 2025 -

Adult Adhd From Suspicion To Support

Apr 30, 2025

Adult Adhd From Suspicion To Support

Apr 30, 2025 -

Ru Pauls Drag Race Season 17 Episode 8 Your Guide To Free Online Viewing

Apr 30, 2025

Ru Pauls Drag Race Season 17 Episode 8 Your Guide To Free Online Viewing

Apr 30, 2025

Latest Posts

-

150 Bet Mgm Bonus Use Code Rotobg 150 For Nba Playoffs Action

Apr 30, 2025

150 Bet Mgm Bonus Use Code Rotobg 150 For Nba Playoffs Action

Apr 30, 2025 -

Savo Vardo Turnyras Vilniuje Mato Buzelio Tylos Priezastys

Apr 30, 2025

Savo Vardo Turnyras Vilniuje Mato Buzelio Tylos Priezastys

Apr 30, 2025 -

Matas Buzelis Tyla Po Savo Vardo Turnyro Vilniuje

Apr 30, 2025

Matas Buzelis Tyla Po Savo Vardo Turnyro Vilniuje

Apr 30, 2025 -

Bet Mgm Nba Playoffs Bonus Rotobg 150 For 150 In Free Bets

Apr 30, 2025

Bet Mgm Nba Playoffs Bonus Rotobg 150 For 150 In Free Bets

Apr 30, 2025 -

Nba Cavs Week 16 Review Trade And Rest Impact On Performance

Apr 30, 2025

Nba Cavs Week 16 Review Trade And Rest Impact On Performance

Apr 30, 2025