The CNIL's Revised AI Guidelines: Key Changes And Practical Implications

Table of Contents

Enhanced Focus on Risk Assessment and Mitigation (Keyword: CNIL AI Risk Assessment)

The revised guidelines place a significantly greater emphasis on proactive risk assessment for AI systems. This isn't merely a box-ticking exercise; the CNIL expects a thorough and documented evaluation of potential risks associated with your AI deployments. This proactive CNIL AI risk assessment is key to demonstrating compliance.

-

Detailed explanation of different AI risk levels (high, medium, low): The CNIL provides a clearer framework for categorizing AI systems based on their potential impact. High-risk systems, such as those used in criminal justice or healthcare, necessitate significantly more rigorous assessments and mitigation strategies. Medium and low-risk systems require proportionate levels of scrutiny.

-

Specific requirements for documenting risk assessments: The documentation process is more formalized, requiring a detailed record of the assessment methodology, identified risks, and proposed mitigation measures. This documentation needs to be readily available for CNIL audits.

-

Emphasis on incorporating privacy by design and default principles: Privacy considerations are central to the risk assessment. Organizations must demonstrate that they have integrated privacy protection into the design and implementation of their AI systems from the outset. Data minimization and purpose limitation are crucial aspects of this approach.

-

Guidance on data minimization and purpose limitation in the context of AI: The guidelines stress the importance of collecting and processing only the data strictly necessary for the AI system's intended purpose. Overly broad data collection practices are explicitly discouraged.

This rigorous approach to CNIL AI risk assessment requires a multidisciplinary team involving data protection officers, AI developers, and legal experts to ensure comprehensive coverage of all potential risks. Failing to adequately address these requirements can result in substantial penalties.

Strengthened Requirements for Transparency and Explainability (Keyword: CNIL AI Transparency)

The updated guidelines underscore the need for greater CNIL AI Transparency throughout the AI lifecycle. Users must understand how AI systems affect them, and organizations need to be accountable for their AI decisions.

-

Clearer explanations of the logic behind AI decision-making: The CNIL expects organizations to provide clear and understandable explanations of how their AI systems arrive at their conclusions. This is particularly important for decisions that significantly impact individuals' lives.

-

Improved methods for informing individuals about the use of AI: Users must be proactively informed about the use of AI systems that affect them. This includes detailing the purpose of the AI, the data used, and the potential impact on their rights.

-

Provision of mechanisms for individuals to contest AI-driven decisions: Individuals must have clear and effective channels to challenge AI-driven decisions that they believe are unfair or inaccurate. This often involves robust appeal processes.

-

Detailed documentation of AI algorithms and datasets: The CNIL requires detailed documentation of the algorithms and datasets used in AI systems. This documentation facilitates both internal auditing and external scrutiny by the authority.

Achieving CNIL AI Transparency demands a proactive approach to communication and documentation. This includes developing user-friendly explanations of complex AI processes and establishing clear procedures for handling user complaints.

Increased Accountability for AI Deployments (Keyword: CNIL AI Accountability)

The revised guidelines significantly increase CNIL AI Accountability for organizations using AI systems. This means greater responsibility and increased scrutiny.

-

Clearer delineation of responsibilities for AI governance: Organizations need clearly defined roles and responsibilities for overseeing AI development and deployment. This often involves designating a specific individual or team to be responsible for AI compliance.

-

Increased oversight of AI development and deployment processes: The CNIL will conduct more rigorous audits to ensure compliance with the guidelines. Organizations need to be prepared for increased scrutiny of their AI practices.

-

Emphasis on human oversight and intervention in critical situations: The guidelines emphasize the importance of human oversight, particularly in situations where AI systems make high-stakes decisions. This ensures that human judgment can override potentially flawed AI outputs.

-

Strengthened mechanisms for addressing complaints and enforcing compliance: The CNIL has strengthened its enforcement mechanisms, leading to potentially higher penalties for non-compliance.

Implementing strong CNIL AI Accountability requires a robust governance framework, comprehensive documentation, and a commitment to ongoing compliance monitoring.

Specific Guidance on Data Protection and AI (Keyword: CNIL AI Data Protection)

The revised guidelines offer detailed guidance on the intersection of AI and data protection, addressing specific concerns within the broader GDPR framework.

-

Specific requirements for data security and integrity in AI systems: AI systems must meet stringent security requirements to protect data from unauthorized access, use, or disclosure. Robust security measures are crucial.

-

Guidance on the lawful basis for processing data for AI purposes: Organizations must ensure that they have a valid legal basis for processing data used in AI systems, such as consent or legitimate interests.

-

Best practices for complying with data subject rights in the context of AI: Individuals' rights, including the right of access, rectification, and erasure, must be respected even when data is processed by AI systems.

-

Specific attention given to the use of sensitive data in AI applications: The guidelines place particular emphasis on protecting sensitive data, such as health or biometric data, used in AI applications.

Navigating the complexities of CNIL AI Data Protection requires a deep understanding of both AI technologies and data protection law. Specialized expertise may be needed to ensure full compliance.

Conclusion

The revised CNIL AI guidelines represent a significant step towards fostering responsible AI development and deployment in France. Understanding and implementing these updated recommendations, particularly regarding risk assessment, transparency, accountability, and data protection, is crucial for organizations operating within the French legal framework. Failure to comply could result in substantial penalties. Staying informed about the CNIL AI Guidelines and proactively adapting your AI strategies is vital for continued compliance and ethical AI practices. Download our free guide to further understand the CNIL's AI regulations and how to implement effective compliance strategies.

Featured Posts

-

Planning The Seating For A Papal Funeral Protocol And Practicalities

Apr 30, 2025

Planning The Seating For A Papal Funeral Protocol And Practicalities

Apr 30, 2025 -

Ru Pauls Drag Race Season 17 Episode 6 Guide And Predictions

Apr 30, 2025

Ru Pauls Drag Race Season 17 Episode 6 Guide And Predictions

Apr 30, 2025 -

Ru Pauls Drag Race Season 17 Episode 9 Review The Queens Design Skills

Apr 30, 2025

Ru Pauls Drag Race Season 17 Episode 9 Review The Queens Design Skills

Apr 30, 2025 -

Blue Ivys Eyebrows Tina Knowles Reveals Her Simple Technique

Apr 30, 2025

Blue Ivys Eyebrows Tina Knowles Reveals Her Simple Technique

Apr 30, 2025 -

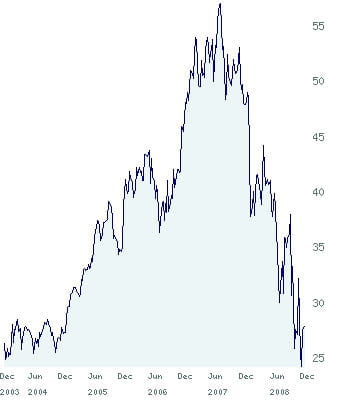

Comprendre Le Document Amf Cp 2025 E1029253 De Remy Cointreau

Apr 30, 2025

Comprendre Le Document Amf Cp 2025 E1029253 De Remy Cointreau

Apr 30, 2025

Latest Posts

-

Channing Tatum 44 And Inka Williams 25 New Romance Confirmed

Apr 30, 2025

Channing Tatum 44 And Inka Williams 25 New Romance Confirmed

Apr 30, 2025 -

Exploring The Zoe Kravitz And Noah Centineo Dating Speculation

Apr 30, 2025

Exploring The Zoe Kravitz And Noah Centineo Dating Speculation

Apr 30, 2025 -

Channing Tatum Dating Australian Model Inka Williams Following Zoe Kravitz Breakup

Apr 30, 2025

Channing Tatum Dating Australian Model Inka Williams Following Zoe Kravitz Breakup

Apr 30, 2025 -

Channing Tatum And Girlfriend Inka Williams Melbourne Trip Ahead Of Potential F1 Appearance

Apr 30, 2025

Channing Tatum And Girlfriend Inka Williams Melbourne Trip Ahead Of Potential F1 Appearance

Apr 30, 2025 -

Channing Tatum And Inka Williams New Romance After Zoe Kravitz Split

Apr 30, 2025

Channing Tatum And Inka Williams New Romance After Zoe Kravitz Split

Apr 30, 2025