The Connection Between Algorithms, Radicalization, And Mass Violence: Who's To Blame?

Table of Contents

H2: The Role of Algorithms in Amplifying Extremist Content

Algorithms, the complex sets of rules governing online content delivery, play a significant role in shaping our digital experiences. Unfortunately, this power has been exploited to amplify extremist narratives and accelerate the process of radicalization.

H3: Filter Bubbles and Echo Chambers

Personalized algorithms create "filter bubbles," curating content based on our past online behavior. This can lead to the formation of "echo chambers," where individuals are primarily exposed to information confirming their pre-existing beliefs, even if those beliefs are extremist.

- Algorithmic bias: Algorithms trained on biased data can inadvertently reinforce existing prejudices and promote extremist viewpoints.

- Reinforcement learning: These systems learn to prioritize content that generates high engagement, often leading to the amplification of inflammatory and radical material.

- Effect on worldview: Constant exposure to biased information within an echo chamber can warp an individual's perception of reality and make them more susceptible to extremist ideologies. This is further amplified by the constant reinforcement of these views through like-minded communities online.

H3: Recommendation Systems and Content Spread

Recommendation systems, designed to suggest relevant content, can inadvertently become powerful tools for spreading extremist propaganda. By prioritizing engagement, these systems often boost the visibility of controversial and even violent content.

- Viral content: Extremist videos, articles, and memes can go viral, reaching a vast audience far beyond the initial source.

- Prioritizing engagement over safety: The relentless pursuit of user engagement often overrides considerations of safety and well-being, leading to the unchecked spread of harmful content.

- Snowball effect of radicalization: Exposure to extremist content can lead to further engagement with more radical groups and materials, creating a dangerous snowball effect. This creates a self-perpetuating cycle, further fueling the spread of harmful ideologies.

H2: The Responsibility of Social Media Platforms

Social media platforms, the primary vectors for the spread of online radicalization, bear a significant responsibility for mitigating this threat. However, navigating this complex landscape presents immense challenges.

H3: Profit vs. Safety

The business model of many social media platforms centers on maximizing user engagement, often at the expense of safety and well-being. The financial incentives frequently outweigh the need for robust content moderation.

- Platform inaction: Many platforms have been criticized for their slow response to the spread of extremist content, prioritizing profits over the prevention of harm.

- Difficulty of content moderation at scale: The sheer volume of content uploaded daily makes effective human-led moderation extremely difficult.

- Financial incentives for engagement: Algorithms that prioritize engagement incentivize the creation and spread of sensational and extreme content, even if that content is harmful.

H3: The Limitations of Current Moderation Techniques

Current content moderation techniques, both human-led and AI-powered, face significant limitations. Extremist groups constantly adapt their tactics, creating an "arms race" between platforms and those seeking to spread their message.

- Misidentification: Automated systems frequently misidentify content, failing to flag harmful material or incorrectly flagging benign content.

- The "arms race": Extremist groups are constantly developing new ways to circumvent content moderation efforts, forcing platforms to continuously adapt their strategies.

- Limitations of current technologies: Existing AI-based content moderation tools often struggle to detect nuanced forms of extremist propaganda and hate speech. This requires continuous investment in improved technology.

H2: The Individual's Agency and Vulnerability

While algorithms and platforms play a significant role, it's crucial to recognize the agency and vulnerabilities of individuals susceptible to online radicalization.

H3: Psychological Factors and Online Grooming

Certain psychological factors can make individuals more vulnerable to online radicalization. Extremist groups often employ sophisticated grooming techniques to exploit these vulnerabilities.

- Psychological vulnerability: Loneliness, social isolation, pre-existing grievances, and a sense of marginalization can make individuals more susceptible to extremist recruitment efforts.

- Online grooming: Extremist groups use online platforms to identify and target vulnerable individuals, building trust and gradually indoctrinating them into their ideology.

- Individual responsibility: While platforms bear responsibility, individuals must also be critically aware of the information they consume online.

H3: The Path to Violence: From Online Engagement to Real-World Action

The transition from passively consuming extremist content online to engaging in real-world violence is a complex process, often involving several stages.

- Online communities: Engagement with online extremist communities provides a sense of belonging and validation, reinforcing radicalized beliefs.

- In-person meetings: Online radicalization often leads to offline connections, fostering a more intense and direct form of indoctrination.

- From virtual to physical violence: The transition from online engagement to violent acts can be gradual, with online communities providing support and encouragement for violent actions.

3. Conclusion

The connection between algorithms, radicalization, and mass violence is a multifaceted problem demanding a multifaceted solution. The responsibility lies not solely with any one entity but rather requires a shared commitment among algorithm developers, social media platforms, policymakers, and individuals. We need to demand greater transparency and accountability from technology companies, while simultaneously promoting critical thinking and media literacy among users. Further research into the psychological factors driving radicalization, coupled with the development of more effective content moderation techniques, is crucial. Let's work together to break the cycle, fostering a safer and more responsible digital environment. Let's continue the conversation about the connection between algorithms, radicalization, and mass violence and demand better solutions.

Featured Posts

-

Cell Phone Restrictions In Iowa Schools A Comprehensive Guide

May 30, 2025

Cell Phone Restrictions In Iowa Schools A Comprehensive Guide

May 30, 2025 -

Alcarazs Hard Fought Monte Carlo Masters Championship

May 30, 2025

Alcarazs Hard Fought Monte Carlo Masters Championship

May 30, 2025 -

Swiss Village Buried Glacier Collapse Leaves One Missing

May 30, 2025

Swiss Village Buried Glacier Collapse Leaves One Missing

May 30, 2025 -

Marvel Sinner And The Importance Of Post Credit Scenes

May 30, 2025

Marvel Sinner And The Importance Of Post Credit Scenes

May 30, 2025 -

Heute In Augsburg M Net Firmenlauf Ergebnisse Und Fotos

May 30, 2025

Heute In Augsburg M Net Firmenlauf Ergebnisse Und Fotos

May 30, 2025

Latest Posts

-

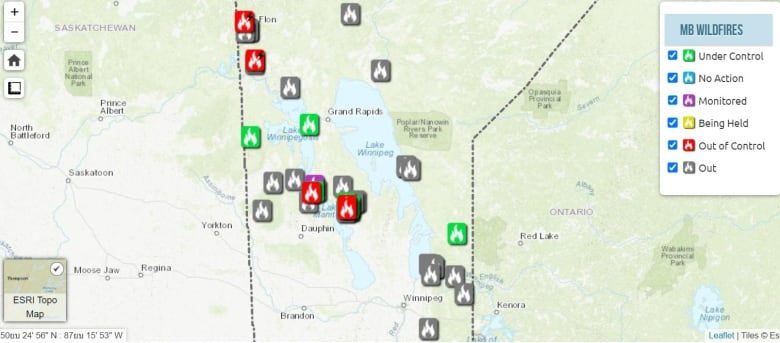

Deadly Wildfires Rage In Eastern Manitoba Update On Contained And Uncontained Fires

May 31, 2025

Deadly Wildfires Rage In Eastern Manitoba Update On Contained And Uncontained Fires

May 31, 2025 -

Texas Panhandle Wildfire A Year Of Recovery And Renewal

May 31, 2025

Texas Panhandle Wildfire A Year Of Recovery And Renewal

May 31, 2025 -

Eastern Manitoba Wildfires Ongoing Battle Against Uncontrolled Fires

May 31, 2025

Eastern Manitoba Wildfires Ongoing Battle Against Uncontrolled Fires

May 31, 2025 -

Beauty From The Ashes Texas Panhandles Wildfire Recovery One Year Later

May 31, 2025

Beauty From The Ashes Texas Panhandles Wildfire Recovery One Year Later

May 31, 2025 -

Newfoundland Wildfires Emergency Response To Widespread Destruction

May 31, 2025

Newfoundland Wildfires Emergency Response To Widespread Destruction

May 31, 2025