What AI Can And Cannot Learn: Guiding Principles For Responsible AI

Table of Contents

Understanding AI's Learning Process

AI's learning capabilities are primarily categorized into three main types of machine learning: supervised, unsupervised, and reinforcement learning. Each approach has its strengths and weaknesses, significantly influencing what AI can and cannot achieve.

Supervised Learning

Supervised learning involves training an AI model on a labeled dataset, where each data point is tagged with the correct output. This allows the model to learn the relationship between inputs and outputs, achieving high accuracy on similar data.

- Examples: Image recognition (classifying images as cats or dogs), spam filtering (identifying emails as spam or not spam).

- Limitations: Supervised learning heavily relies on large, high-quality datasets. The accuracy is limited by the quality and representativeness of the training data. Bias in the data can lead to biased AI outcomes. The model may struggle to generalize to unseen data points that differ significantly from the training data.

Unsupervised Learning

Unsupervised learning involves training an AI model on unlabeled data, allowing it to discover patterns and structures without explicit guidance. This is particularly useful for exploring complex datasets and identifying hidden relationships.

- Examples: Customer segmentation (grouping customers based on purchasing behavior), dimensionality reduction (reducing the number of variables while preserving important information).

- Limitations: Interpreting the results of unsupervised learning can be challenging. The model may identify spurious correlations, leading to inaccurate conclusions. It's difficult to evaluate the performance of unsupervised learning models without predefined labels.

Reinforcement Learning

Reinforcement learning involves training an AI agent to interact with an environment and learn through trial and error. The agent receives rewards for desirable actions and penalties for undesirable ones, learning to maximize its cumulative reward.

- Examples: Robotics (training robots to perform complex tasks), game playing (developing AI agents to play games like chess or Go).

- Limitations: Reinforcement learning can be computationally expensive and require significant time for training. The agent's behavior can be unpredictable and difficult to control, especially in complex environments.

The Limits of Current AI

Despite impressive advancements, current AI systems still face significant limitations that restrict what they can learn and how they can apply that knowledge.

Lack of Common Sense and Reasoning

One major limitation is the lack of common sense reasoning and contextual understanding. AI systems often struggle with tasks requiring intuitive understanding or reasoning beyond the explicit data they've been trained on.

- Examples: An AI might fail to understand a simple statement like "The cat sat on the mat, but the mat was on the floor." It may struggle with tasks requiring background knowledge or inference.

- Challenges: Encoding common sense knowledge into AI systems is a significant challenge. It requires representing vast amounts of implicit knowledge in a way that the AI can process and utilize.

Bias and Fairness in AI

AI systems inherit and can amplify biases present in their training data. This can lead to unfair or discriminatory outcomes, especially in sensitive applications like loan applications or criminal justice.

- Examples: Facial recognition systems exhibiting higher error rates for certain demographics, loan algorithms discriminating against specific groups.

- Mitigation: Addressing bias requires careful data curation, algorithmic fairness techniques, and ongoing monitoring and evaluation of AI systems' performance across different demographics. Data augmentation can help create more representative datasets.

Explainability and Transparency

Many AI models, particularly deep learning models, are often referred to as "black boxes" because their decision-making processes are opaque and difficult to understand. This lack of transparency raises concerns about accountability and trust.

- Challenges: Understanding how complex AI models arrive at their conclusions is a major hurdle. This opacity makes it difficult to debug errors, identify biases, and ensure fairness.

- Techniques: Explainable AI (XAI) focuses on developing techniques to make AI models more transparent and interpretable. This includes methods like visualizing model decisions, providing feature importance scores, and generating human-readable explanations.

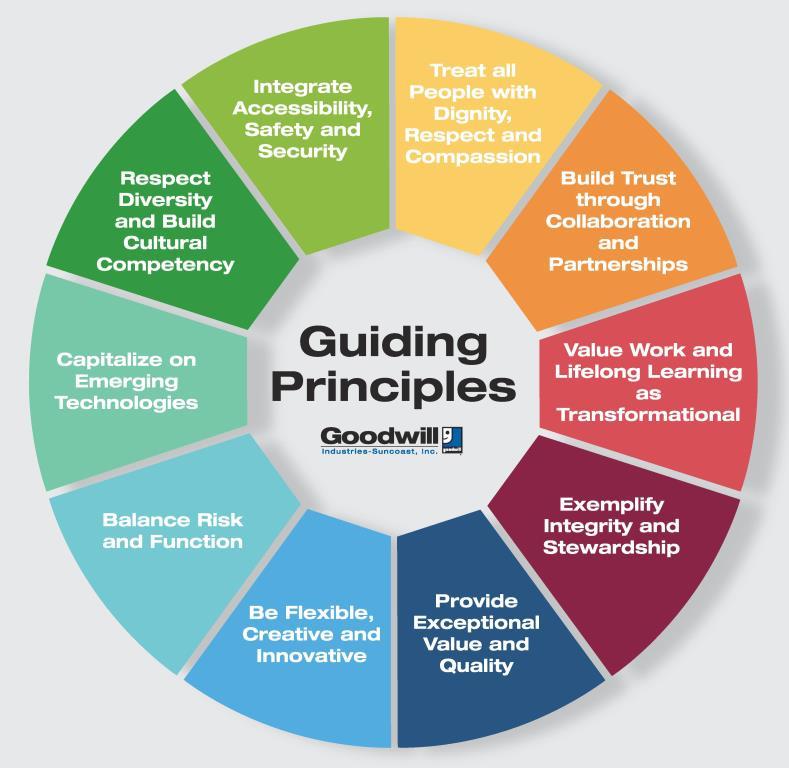

Guiding Principles for Responsible AI Development

Responsible AI development requires a multi-faceted approach that considers ethical considerations, data governance, and human oversight.

Ethical Considerations

Ethical guidelines are crucial for ensuring that AI systems are developed and deployed responsibly, minimizing potential harm and maximizing benefits to society.

- Examples: Privacy concerns (protecting sensitive user data), security risks (preventing malicious attacks), accountability (establishing responsibility for AI-driven decisions).

- Frameworks: Several frameworks exist to guide responsible AI development, including the OECD Principles on AI and the EU's AI Act.

Data Governance and Privacy

Data privacy and security are paramount in responsible AI. AI systems rely on vast amounts of data, raising concerns about data breaches, misuse, and privacy violations.

- Techniques: Data anonymization, differential privacy, and federated learning are some techniques used to protect user privacy while training AI models.

- Regulations: Compliance with data protection regulations like GDPR is essential for responsible AI development.

Human Oversight and Control

Maintaining human control and oversight of AI systems is critical to ensure their responsible use and prevent unintended consequences.

- Mechanisms: Human-in-the-loop AI systems, where humans are involved in the decision-making process, are crucial for maintaining accountability.

- Accountability: Clear lines of responsibility must be established for the actions of AI systems, especially in high-stakes applications.

Conclusion

Understanding what AI can and cannot learn is crucial for harnessing its transformative power responsibly. While AI excels at tasks involving pattern recognition, prediction, and automation, it currently lacks common sense reasoning, complete transparency, and is susceptible to bias. By adhering to responsible AI principles, including ethical considerations, robust data governance, and human oversight, we can mitigate potential risks and ensure that AI benefits all of humanity. Let's continue the conversation about what AI can and cannot learn and work towards a future where AI enhances our lives in a fair and equitable way.

Featured Posts

-

Before The Last Of Us Kaitlyn Devers Unforgettable Role In A Gripping Crime Drama

May 31, 2025

Before The Last Of Us Kaitlyn Devers Unforgettable Role In A Gripping Crime Drama

May 31, 2025 -

Chase Lee Returns To Mlb With Scoreless Inning May 12 2025

May 31, 2025

Chase Lee Returns To Mlb With Scoreless Inning May 12 2025

May 31, 2025 -

Sanofi Etend Son Expertise En Immunologie Avec L Acquisition De Dren Bio

May 31, 2025

Sanofi Etend Son Expertise En Immunologie Avec L Acquisition De Dren Bio

May 31, 2025 -

Daily Press Almanac Comprehensive News Sports And Jobs Information

May 31, 2025

Daily Press Almanac Comprehensive News Sports And Jobs Information

May 31, 2025 -

What Is The Good Life Exploring The Elements Of A Meaningful Existence

May 31, 2025

What Is The Good Life Exploring The Elements Of A Meaningful Existence

May 31, 2025