Why AI Isn't Truly Learning And How To Use It Responsibly

Table of Contents

The Illusion of AI Learning

While AI systems can perform impressive feats, it's crucial to understand that their "learning" differs fundamentally from human learning. AI, particularly machine learning and deep learning, excels at identifying patterns in massive datasets. However, this pattern recognition is purely statistical; AI lacks the genuine understanding and contextual awareness inherent in human learning. This distinction is vital for responsible AI implementation.

Data Dependence: The Achilles' Heel of AI Learning

AI's reliance on training data is its biggest limitation. The quality, quantity, and representativeness of this data directly influence the AI's performance and can introduce significant biases. This is often encapsulated in the adage "garbage in, garbage out."

- Biased facial recognition systems: Datasets lacking diversity can lead to inaccurate or biased identification of individuals from underrepresented racial or ethnic groups.

- Algorithmic bias in loan applications: Training data reflecting historical societal biases can result in discriminatory loan approvals, perpetuating existing inequalities.

- Medical diagnosis AI: If the training data overrepresents certain patient demographics or health conditions, the AI's diagnostic accuracy might be compromised for others.

This data dependence underscores the need for careful curation and validation of training datasets to minimize bias and ensure fairness in AI applications.

Lack of Contextual Understanding: The Common Sense Gap

AI struggles significantly with nuanced situations and lacks the common sense reasoning abilities that humans take for granted. This limitation stems from the statistical nature of its "learning."

- Sarcasm and humor: AI often fails to understand the subtleties of language, misinterpreting sarcasm or humor as literal statements.

- Ambiguous information: AI systems typically struggle with interpreting information that is incomplete, contradictory, or open to multiple interpretations.

- Cultural context: Understanding cultural nuances and social norms requires a level of common sense and contextual awareness that current AI systems lack.

Addressing this gap requires exploring new approaches to AI development that incorporate contextual understanding and common sense reasoning.

Ethical Concerns of Unchecked AI Learning

The potential risks associated with deploying AI systems without carefully considering their limitations are significant. Uncontrolled AI learning can lead to unintended consequences, exacerbating existing societal problems and creating new ethical dilemmas.

Bias Amplification: Perpetuating Inequality

AI systems can inadvertently amplify existing societal biases present in their training data, leading to discriminatory outcomes.

- Hiring algorithms: AI-powered recruitment tools trained on historical data reflecting gender or racial biases can perpetuate these biases in hiring decisions.

- Criminal justice predictions: AI systems used to predict recidivism rates can disproportionately target minority groups if trained on data reflecting biased policing practices.

- Healthcare resource allocation: AI algorithms used to allocate healthcare resources may inadvertently disadvantage marginalized communities if trained on data reflecting healthcare disparities.

Developers have an ethical responsibility to actively mitigate bias in AI systems through careful data selection, bias detection techniques, and fairness-aware algorithm design.

Privacy and Security Risks: Protecting Sensitive Data

AI systems are vulnerable to data breaches and misuse, raising serious privacy and security concerns.

- Data breaches: Large datasets used to train AI systems are attractive targets for cyberattacks, potentially exposing sensitive personal information.

- Surveillance and manipulation: AI-powered surveillance systems raise concerns about potential misuse for mass surveillance and manipulation of individuals.

- Autonomous weapons systems: The development of autonomous weapons raises serious ethical questions about accountability and the potential for unintended harm.

Robust data security and privacy protocols are crucial to mitigating these risks and ensuring responsible AI development.

Promoting Responsible AI Development and Use

Addressing the limitations and ethical concerns surrounding AI requires a concerted effort towards responsible AI development and usage.

Data Diversity and Bias Mitigation: Building Fairer Systems

Creating more diverse and representative datasets is crucial for mitigating bias in AI systems.

- Data augmentation: Techniques like data augmentation can help increase the diversity of training data by generating synthetic data points.

- Bias detection algorithms: Algorithms can be used to identify and quantify bias in datasets and AI models.

- Fairness-aware AI development: Designing algorithms that explicitly incorporate fairness constraints can help mitigate bias in AI decision-making.

These methods are vital for ensuring fairness and equity in AI applications.

Transparency and Explainability: Understanding AI Decisions

Building explainable AI (XAI) systems that provide insights into their decision-making processes is essential for accountability and trust.

- Model interpretability: Techniques like feature importance analysis can help explain which factors contribute most to an AI's predictions.

- Decision visualization: Visualizing AI decision-making processes can make them more transparent and understandable.

- Audit trails: Maintaining detailed audit trails of AI system decisions can facilitate error detection and accountability.

Transparency fosters trust and enables effective identification and correction of errors or biases.

Human Oversight and Control: The Importance of Human-in-the-Loop Systems

Maintaining human oversight and control over AI systems is crucial to ensure responsible AI development and usage.

- Human-in-the-loop systems: Incorporating human feedback and intervention into AI systems can help prevent unintended consequences and mitigate biases.

- Continuous monitoring and evaluation: Regularly monitoring and evaluating AI systems for bias, errors, and unintended consequences is essential.

- Ethical guidelines and regulations: Developing and enforcing ethical guidelines and regulations for AI development and deployment is crucial for responsible innovation.

Human oversight is paramount to preventing the misuse of AI and ensuring its benefits are ethically and equitably distributed.

Conclusion

AI's current capabilities, while impressive, are far from true "learning" as we understand it in humans. The reliance on often-biased data, lack of contextual understanding, and potential for bias amplification highlight the need for responsible AI development and deployment. Addressing the ethical concerns surrounding AI requires a multi-faceted approach that includes fostering data diversity, promoting transparency and explainability, and ensuring robust human oversight. Ignoring these crucial aspects risks perpetuating inequality and creating new ethical dilemmas. Let's work together to ensure that AI learning is used ethically, responsibly, and for the betterment of humanity. Learn more about responsible AI practices and advocate for ethical AI learning, exploring resources like [link to relevant resource 1] and [link to relevant resource 2] to contribute to building a fairer and more equitable future with AI. Let's focus on improving AI learning by prioritizing responsible AI applications and ethical AI development.

Featured Posts

-

Duncan Bannatynes Support For Moroccan Childrens Charity

May 31, 2025

Duncan Bannatynes Support For Moroccan Childrens Charity

May 31, 2025 -

2025 Giro D Italia Ita Airways Announced As Official Airline

May 31, 2025

2025 Giro D Italia Ita Airways Announced As Official Airline

May 31, 2025 -

Analysis Glastonbury Tickets Official Resale Sells Out In 30 Minutes

May 31, 2025

Analysis Glastonbury Tickets Official Resale Sells Out In 30 Minutes

May 31, 2025 -

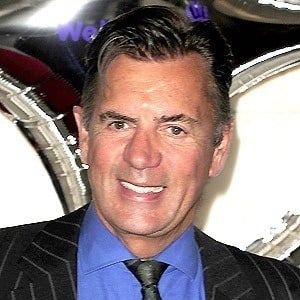

Todays Nyt Mini Crossword May 7 Clues Answers And Solving Strategies

May 31, 2025

Todays Nyt Mini Crossword May 7 Clues Answers And Solving Strategies

May 31, 2025 -

Munguias Revenge Dominant Victory Over Surace In Rematch

May 31, 2025

Munguias Revenge Dominant Victory Over Surace In Rematch

May 31, 2025