2024 OpenAI Developer Event: New Tools For Voice Assistant Creation

Table of Contents

Enhanced Natural Language Processing (NLP) Capabilities for Voice Assistants

The core of any successful voice assistant lies in its ability to understand and respond to human language naturally. The 2024 OpenAI Developer Event will highlight significant leaps in NLP capabilities, promising more accurate, nuanced, and human-like interactions.

Improved Speech-to-Text and Text-to-Speech Conversion

- Increased Accuracy: Expect significant improvements in the accuracy of speech-to-text conversion, even in noisy environments or with diverse accents. New OpenAI models like [Mention specific OpenAI model if available, e.g., Whisper v3] will be showcased, boasting superior performance compared to previous iterations.

- Enhanced Speed: Faster processing times will lead to more responsive and fluid conversations, eliminating frustrating delays.

- Multilingual Support: Expanded multilingual support will break down language barriers, making voice assistants accessible to a global audience. Expect improved handling of complex grammatical structures and idiomatic expressions in multiple languages.

- Improved Handling of Nuances: Advancements will allow for better handling of accents, background noise, and the subtle nuances of human speech, leading to more accurate transcriptions and a more natural conversational flow.

Contextual Understanding and Dialogue Management

The 2024 OpenAI event will showcase tools enhancing contextual understanding and dialogue management within voice assistants. This means:

- Maintaining Context Across Conversations: Voice assistants will better remember previous interactions, allowing for more coherent and natural conversations. OpenAI's advancements in long-term memory management for NLP models will be a key focus.

- Understanding User Intent: Improved algorithms will enable voice assistants to accurately decipher user intent, even with ambiguous or complex requests.

- Handling Complex Requests: The ability to handle multi-part instructions and nested requests will significantly improve the functionality and usability of voice assistants. This will involve demonstrating advancements in task decomposition and planning within the OpenAI framework.

- More Natural and Human-Like Interactions: Expect to see demonstrably more natural and fluid conversations, with voice assistants exhibiting a better understanding of conversational flow and turn-taking.

Sentiment Analysis and Emotional Intelligence in Voice Interactions

This is where voice assistants move beyond simple task completion. New tools at the 2024 OpenAI Developer Event will focus on:

- Detecting User Emotions: Advancements in sentiment analysis will allow voice assistants to detect the emotional tone of user requests, identifying frustration, excitement, or other emotional states.

- Adapting Responses Based on Emotion: Voice assistants will be able to tailor their responses to match the user's emotional state, providing a more empathetic and personalized experience. This could involve adjusting tone, speed, or the level of detail in responses.

- Creating More Personalized and Empathetic Experiences: By understanding and responding to user emotions, voice assistants can create more engaging and satisfying interactions, fostering a stronger connection between user and technology.

New APIs and SDKs for Seamless Voice Assistant Integration

The 2024 OpenAI Developer Event will unveil new APIs and SDKs designed to simplify the process of integrating voice assistants into various applications and platforms.

Simplified Development Process and Reduced Complexity

- Streamlined Integration: Expect to see significantly simplified integration processes, reducing development time and costs. OpenAI might introduce drag-and-drop interfaces or pre-built modules for common functionalities.

- Reduced Code Complexity: New APIs will abstract away much of the underlying complexity, allowing developers to focus on the unique aspects of their applications.

Cross-Platform Compatibility and Scalability

- Seamless Integration Across Platforms: The new tools will ensure easy integration across iOS, Android, and web platforms, maximizing reach and accessibility.

- Scalability for Large User Bases: OpenAI's new infrastructure will support the scalability needed to handle a large and growing number of concurrent users.

Enhanced Security and Privacy Features for Voice Data

Data security and privacy are paramount. OpenAI will emphasize:

- Robust Encryption Methods: Expect strong encryption protocols to protect voice data throughout the entire lifecycle.

- Data Anonymization Techniques: OpenAI will highlight anonymization techniques to protect user privacy while still allowing for data-driven improvements to the system.

- Compliance with Data Privacy Regulations: The new tools will be designed to comply with relevant data privacy regulations like GDPR and CCPA.

Advanced Customization Options for Personalized Voice Assistant Experiences

The 2024 OpenAI Developer Event will showcase tools to create truly personalized voice assistant experiences.

Creating Unique Voice Personalities and Brands

- Custom Voice Models: Developers will be able to create custom voice models, allowing them to precisely define the voice, tone, and personality of their voice assistants.

- Pre-trained Voice Libraries: Access to a library of pre-trained voices will offer a range of options for quickly prototyping and deploying voice assistants.

Integrating with External Services and Data Sources

- Seamless Integration with APIs: Expect easy integration with popular APIs and data platforms, expanding the functionality of voice assistants.

- Connecting to Smart Home Devices: Developers can easily integrate voice assistants with smart home devices, creating a cohesive and automated home experience.

Leveraging User Data for Continuous Improvement and Personalization

- Ethical Data Usage: OpenAI will stress ethical data usage, ensuring user privacy and consent are prioritized.

- Data Anonymization and Aggregation: Techniques for anonymizing and aggregating user data will be used for model training, preserving user privacy while improving performance.

Revolutionizing Voice Assistant Development at the 2024 OpenAI Developer Event

The 2024 OpenAI Developer Event: New Tools for Voice Assistant Creation promises to be a landmark event, showcasing significant advancements in natural language processing, API development, and personalization. These new tools will empower developers to create more accurate, intuitive, and personalized voice assistants, transforming how we interact with technology. Don't miss out on the opportunity to shape the future of voice technology! Register for the 2024 OpenAI Developer Event today and be among the first to experience these groundbreaking tools for voice assistant creation! [Insert Link to Registration Here]

Featured Posts

-

Tracking Global Commodity Markets 5 Key Charts To Monitor This Week

May 06, 2025

Tracking Global Commodity Markets 5 Key Charts To Monitor This Week

May 06, 2025 -

Is Betting On Wildfires The New Normal The La Case

May 06, 2025

Is Betting On Wildfires The New Normal The La Case

May 06, 2025 -

Ugoda Mizh Nitro Chem Ta S Sh A 310 Mln Investitsiy U Polschu

May 06, 2025

Ugoda Mizh Nitro Chem Ta S Sh A 310 Mln Investitsiy U Polschu

May 06, 2025 -

How To Watch March Madness Online Stream Every Game Without Cable

May 06, 2025

How To Watch March Madness Online Stream Every Game Without Cable

May 06, 2025 -

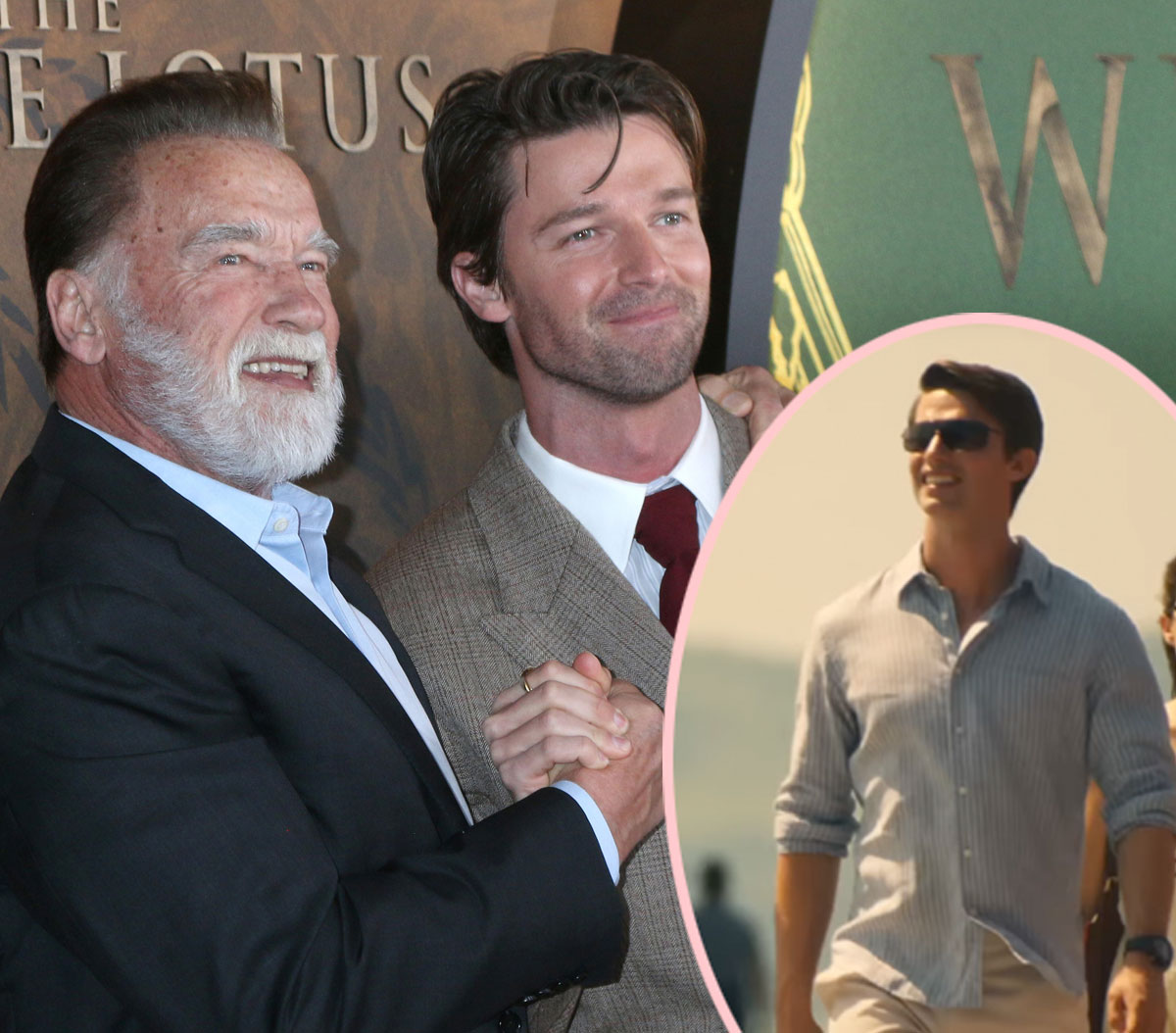

Patrick Schwarzenegger On White Lotus Addressing Nepotism Claims

May 06, 2025

Patrick Schwarzenegger On White Lotus Addressing Nepotism Claims

May 06, 2025

Latest Posts

-

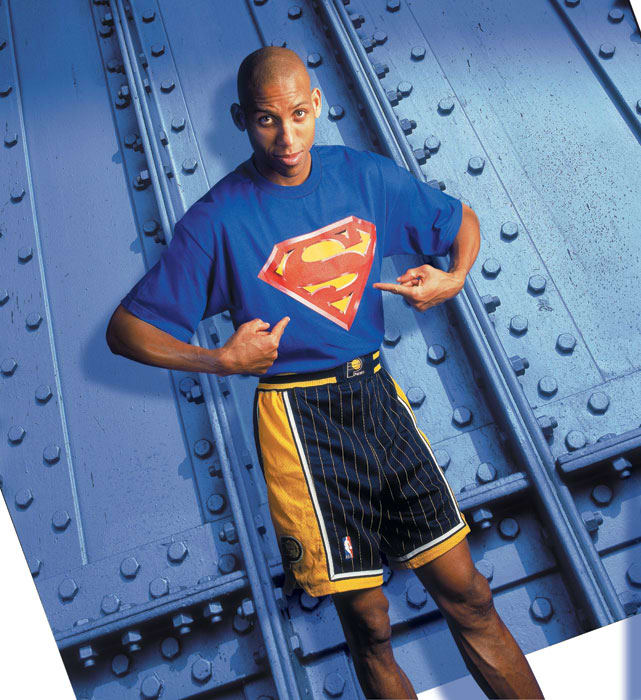

Nba Broadcasting Changes Reggie Millers Next Chapter With Nbc

May 06, 2025

Nba Broadcasting Changes Reggie Millers Next Chapter With Nbc

May 06, 2025 -

Reggie Miller From Tnt To Nbc Analyzing The Nba Broadcasting Shift

May 06, 2025

Reggie Miller From Tnt To Nbc Analyzing The Nba Broadcasting Shift

May 06, 2025 -

Is Reggie Miller Nbcs New Lead Nba Analyst The Latest News

May 06, 2025

Is Reggie Miller Nbcs New Lead Nba Analyst The Latest News

May 06, 2025 -

Nba Broadcast Shakeup Reggie Millers New Role With Nbc Sports

May 06, 2025

Nba Broadcast Shakeup Reggie Millers New Role With Nbc Sports

May 06, 2025 -

Nba Analyst Shuffle Reggie Millers Move To Nbc And What It Means

May 06, 2025

Nba Analyst Shuffle Reggie Millers Move To Nbc And What It Means

May 06, 2025