AI Therapy: Potential For Surveillance And Abuse In Authoritarian Regimes

Table of Contents

Data Collection and Surveillance in AI Therapy

AI therapy platforms collect vast amounts of personal data, creating a treasure trove of information ripe for exploitation in authoritarian contexts. This data surpasses the scope of traditional therapy notes, raising unique concerns regarding privacy and security.

The Nature of AI Therapy Data

AI therapy applications gather extensive personal data, including sensitive information about mental health, relationships, political views, and even biometric data. This data is highly valuable for surveillance and profiling.

- Examples: User text entries detailing personal struggles and opinions, voice recordings capturing emotional nuances, biometric data (e.g., heart rate variability during sessions) reflecting emotional states, and location data associated with therapy sessions.

- Vulnerability: The detailed and intimate nature of this data makes it easily exploitable for creating comprehensive profiles of individuals, enabling targeted surveillance and manipulation. This detailed psychological insight is a powerful tool for authoritarian regimes.

Lack of Data Protection in Authoritarian States

Weak or non-existent data protection laws in many authoritarian regimes leave AI therapy data extremely vulnerable to government access and misuse. This lack of legal protection is a significant threat to individual privacy and freedom.

- Examples: Government-mandated access to data without warrants or judicial oversight, lack of transparency regarding data usage, and the absence of independent oversight bodies to protect citizens' rights.

- Consequences: This lack of regulation facilitates the creation of extensive citizen surveillance databases, allowing authorities to monitor and control individuals based on their mental health and personal beliefs. The potential for abuse is immense.

Manipulation and Control through AI Therapy

The sophisticated algorithms underpinning AI therapy platforms can be manipulated to serve authoritarian agendas, creating a chilling effect on free expression and dissent.

Targeted Propaganda and Disinformation

AI algorithms can be used to identify and target individuals with specific political or social views for manipulation through tailored messaging within the AI therapy platform itself. This represents a highly insidious form of propaganda.

- Examples: Subtle shifts in conversational prompts to reinforce desired beliefs, tailored "recommendations" promoting pro-regime narratives, and the selective presentation of information to influence user perspectives.

- Consequences: Erosion of individual autonomy and freedom of thought, fostering a climate of fear and self-censorship. This can lead to the normalization of oppressive ideologies.

Identification and Targeting of Dissidents

AI therapy data can be used to identify individuals expressing dissenting opinions or exhibiting signs of political opposition. This allows for the preemptive targeting of potential threats to the regime.

- Examples: Sentiment analysis of user text to flag potentially problematic views, identification of individuals expressing dissatisfaction with the government, and the flagging of users exhibiting symptoms of anxiety or depression linked to political unrest.

- Consequences: Increased risk of harassment, arrest, and imprisonment for political dissidents. This creates a climate of fear that silences dissent.

Erosion of Trust and Confidentiality in Mental Healthcare

The potential for surveillance and manipulation significantly undermines the fundamental principles of mental healthcare: trust and confidentiality.

The Chilling Effect of Surveillance on Seeking Help

The knowledge that AI therapy sessions might be monitored can deter individuals from seeking the mental health support they need, exacerbating existing issues and widening the gap in access to care.

- Examples: Individuals may avoid disclosing sensitive information for fear of repercussions, leading to incomplete or inaccurate diagnoses and ineffective treatment.

- Consequences: Increased stigmatization of mental health issues, decreased access to care, and a reluctance to seek help, ultimately harming individuals and society.

Lack of Therapeutic Neutrality

The potential for manipulation undermines the therapeutic relationship, which is based on trust and confidentiality. The AI therapist, or the algorithm guiding the human therapist, may not be truly neutral or unbiased.

- Examples: AI algorithms programmed with biases, human therapists pressured to align with government agendas, and the manipulation of therapeutic interventions to reinforce desired beliefs.

- Consequences: Damaged therapeutic alliance, ineffective treatment, and a further erosion of trust in mental health professionals.

Conclusion

The deployment of AI therapy in authoritarian regimes presents significant risks. The potential for surveillance, manipulation, and the erosion of trust in mental health services cannot be ignored. The lack of robust data protection laws and ethical guidelines creates a fertile ground for abuse. We must advocate for stricter regulations and ethical frameworks to prevent the misuse of AI therapy and protect vulnerable populations. Further research is critical to understanding the implications of AI therapy and to ensure its responsible development and deployment. Let's work together to prevent the abuse of AI therapy and ensure its ethical use for the benefit of all. We need to prioritize human rights and individual freedoms in the development and application of this powerful technology.

Featured Posts

-

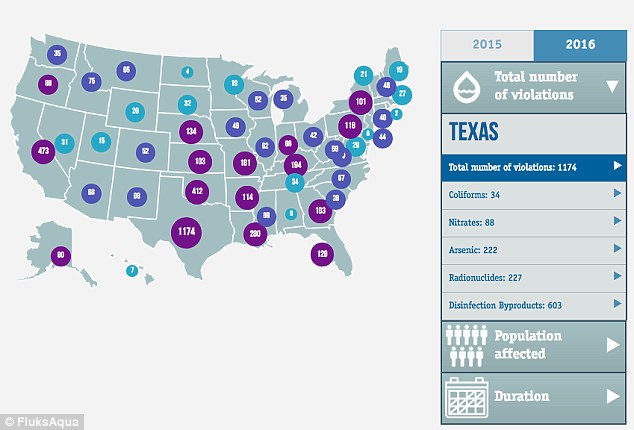

Contaminated Drinking Water Report Reveals Millions Affected In The Us

May 15, 2025

Contaminated Drinking Water Report Reveals Millions Affected In The Us

May 15, 2025 -

Star Wars Andor Planned Book Project Abandoned Amidst Ai Worries

May 15, 2025

Star Wars Andor Planned Book Project Abandoned Amidst Ai Worries

May 15, 2025 -

Hamer Bruins En Npo Toezichthouder Moeten Over Leeflang Praten

May 15, 2025

Hamer Bruins En Npo Toezichthouder Moeten Over Leeflang Praten

May 15, 2025 -

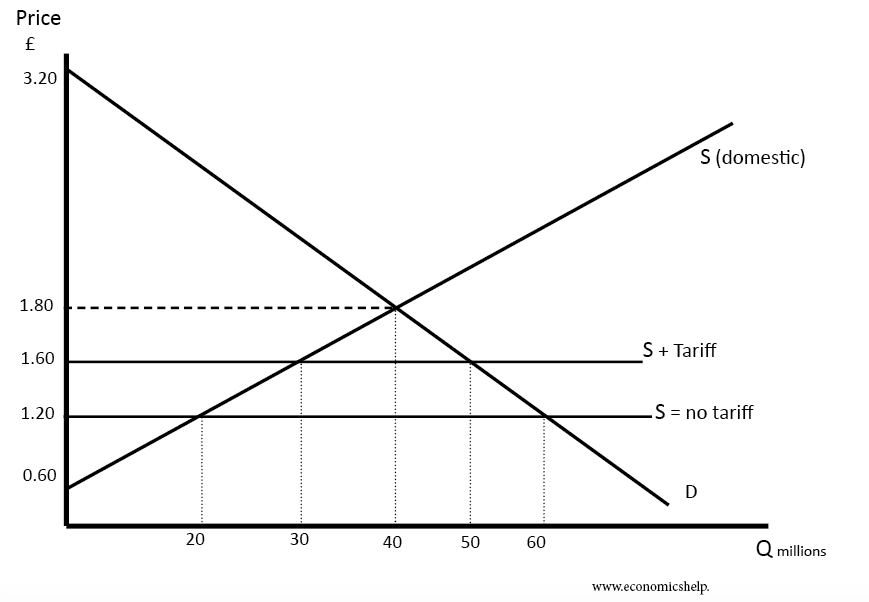

Indias Economy The Ripple Effects Of Reciprocal Tariffs

May 15, 2025

Indias Economy The Ripple Effects Of Reciprocal Tariffs

May 15, 2025 -

10 Run Inning Doesnt Dim Padres Bullpens Positive Outlook Tom Krasovic San Diego Union Tribune

May 15, 2025

10 Run Inning Doesnt Dim Padres Bullpens Positive Outlook Tom Krasovic San Diego Union Tribune

May 15, 2025