Building Voice Assistants Made Easy: OpenAI's Latest Development Tools

Table of Contents

OpenAI's Powerful APIs for Voice Assistant Development

OpenAI offers a suite of powerful APIs specifically designed to streamline voice assistant development. These APIs handle the heavy lifting of speech processing and natural language understanding, allowing developers to focus on the higher-level aspects of their applications. Key components include speech-to-text and text-to-speech capabilities, significantly reducing the complexity of building a functional voice interface.

-

Whisper API: Accurate and Efficient Speech-to-Text: OpenAI's Whisper API provides state-of-the-art speech-to-text conversion. Its multilingual capabilities and robustness handle diverse accents and noisy environments with impressive accuracy, eliminating a significant hurdle in voice assistant development. This means your voice assistant can understand a wider range of users and speaking conditions.

-

Text-to-Speech Synthesis: Natural and Customizable Voices: Converting text to speech is crucial for a natural and engaging user experience. OpenAI's text-to-speech capabilities deliver high-quality, natural-sounding voices, with options for customization to match the brand or application's personality. This allows for a more personalized and immersive interaction.

-

Seamless API Integration: OpenAI’s APIs are designed for easy integration into existing applications or as the foundation for new projects. Developers can leverage these powerful tools without needing to build complex speech processing pipelines from scratch. This dramatically reduces development time and effort.

-

Example (Conceptual): Imagine a simple Python integration:

response = openai.Whisper.transcribe("audio.wav")This single line could handle the entire speech-to-text process, instantly providing a text transcription for processing. (Note: Actual code will vary depending on API version and implementation.)

Streamlining the Development Process with Pre-trained Models

OpenAI's pre-trained models are a game-changer for voice assistant development. These models have already been trained on massive datasets, significantly reducing the need for extensive model training from scratch. This translates to faster development cycles and reduced resource requirements.

-

Pre-trained Models for Core Tasks: OpenAI provides pre-trained models for critical voice assistant functions, including intent recognition (understanding what the user wants) and dialogue management (handling conversational flow). These ready-to-use models provide a solid foundation for building your voice assistant.

-

Reduced Development Time and Resources: By leveraging pre-trained models, developers can bypass the time-consuming and computationally expensive process of training models from scratch. This significantly accelerates the development process and lowers the barrier to entry for building sophisticated voice assistants.

-

Fine-tuning for Customization: OpenAI's models can be further fine-tuned with your specific data to optimize performance for your unique use case. This allows you to tailor the voice assistant to better understand your users' specific language and requests.

-

Personalized Voice Assistants: Fine-tuning enables the creation of personalized voice assistants that adapt to individual user preferences and behavior, creating a more engaging and effective experience.

Simplifying Natural Language Understanding (NLU)

Natural Language Understanding (NLU) is a critical component of any effective voice assistant. OpenAI's tools simplify the complexities of NLU, making it easier than ever to create voice assistants that truly understand user intent.

-

Intent Recognition and Entity Extraction: OpenAI’s tools excel at identifying the user's intent (e.g., setting an alarm, playing music) and extracting relevant entities (e.g., the time, the song title). This is essential for accurately responding to user requests.

-

Simplified Training Process: The process of training NLU models is significantly simplified with OpenAI’s tools. Developers can focus on providing relevant training data rather than wrestling with complex model architectures and training procedures.

-

Natural Conversational Flows: Improved NLU leads to more natural and engaging conversational flows. The voice assistant can understand nuances in language and respond appropriately, making the interaction feel more human-like.

Building Engaging and Personalized Voice User Interfaces (VUIs)

The Voice User Interface (VUI) is the crucial point of interaction between the user and the voice assistant. A well-designed VUI is key to a positive user experience.

-

User Experience (UX) Best Practices: OpenAI's tools, while powerful, need to be coupled with thoughtful VUI design. Consider factors such as clear prompts, concise responses, error handling, and providing feedback to the user.

-

Engaging and Intuitive Interactions: OpenAI facilitates the creation of more engaging and intuitive interactions by providing the tools to create natural-sounding speech and understand complex user requests.

-

Best Practices for Effective VUIs: Prioritize concise and clear instructions, incorporate error handling and confirmations, and design for different user contexts and situations.

-

Successful VUI Examples: Analyze existing successful voice assistants (e.g., Alexa, Google Assistant) to understand effective design patterns.

Conclusion

OpenAI's development tools are revolutionizing the creation of voice assistants. By providing powerful APIs, pre-trained models, and simplified NLU capabilities, OpenAI significantly reduces the complexity and time required to build effective and engaging voice interfaces. This unlocks the potential of conversational AI for a wider range of developers, fostering innovation and accelerating the adoption of voice technology across various applications. Ready to build your own voice assistant with ease? Explore OpenAI's powerful development tools today and unlock the potential of conversational AI!

Featured Posts

-

Eric Andre Rejected Kieran Culkin For A Real Pain Role

May 23, 2025

Eric Andre Rejected Kieran Culkin For A Real Pain Role

May 23, 2025 -

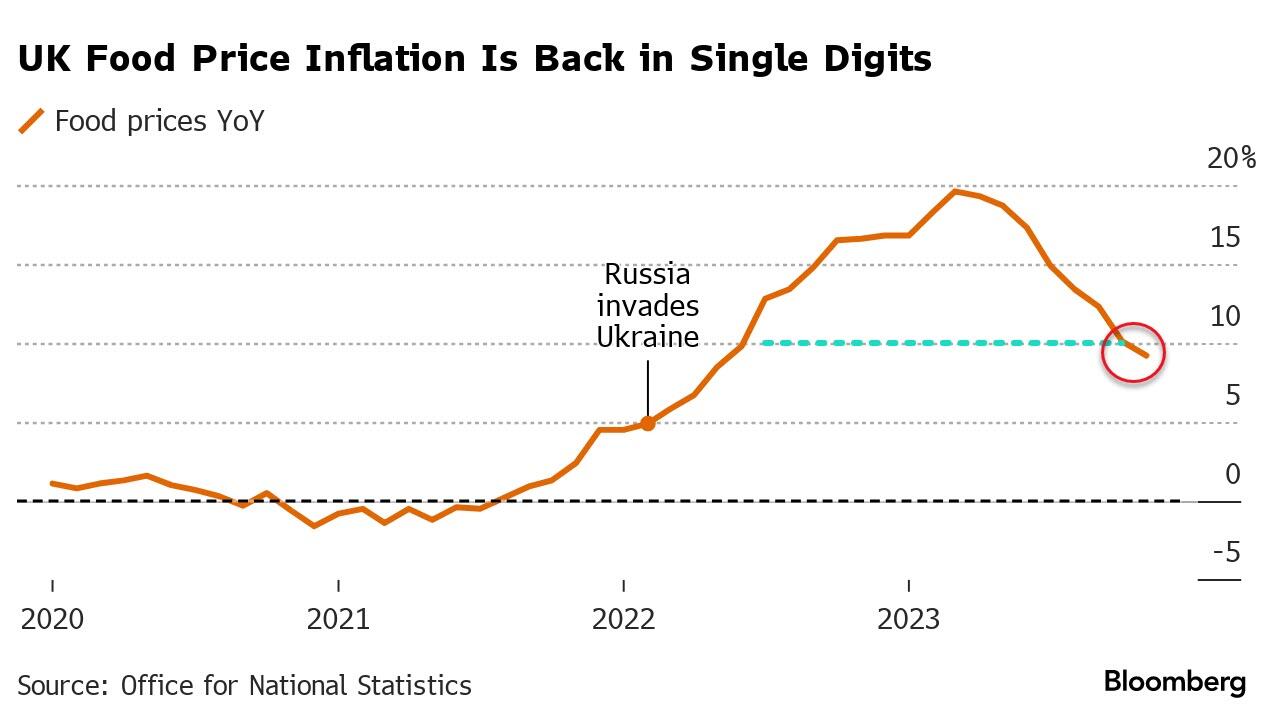

Lower Uk Inflation Eases Boe Rate Cut Pressure Pound Value Increases

May 23, 2025

Lower Uk Inflation Eases Boe Rate Cut Pressure Pound Value Increases

May 23, 2025 -

Four Year Deal Bbc Secures Continued Ecb Cricket Coverage

May 23, 2025

Four Year Deal Bbc Secures Continued Ecb Cricket Coverage

May 23, 2025 -

Grand Ole Oprys Historic London Show Royal Albert Hall Broadcast Details

May 23, 2025

Grand Ole Oprys Historic London Show Royal Albert Hall Broadcast Details

May 23, 2025 -

New Post From Dylan Dreyer Featuring Brian Fichera Public Response

May 23, 2025

New Post From Dylan Dreyer Featuring Brian Fichera Public Response

May 23, 2025

Latest Posts

-

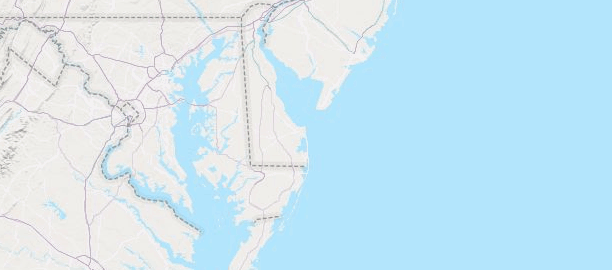

2025 Memorial Day Weekend Beach Forecast Ocean City Rehoboth Sandy Point

May 23, 2025

2025 Memorial Day Weekend Beach Forecast Ocean City Rehoboth Sandy Point

May 23, 2025 -

Memorial Day Weekend 2025 Beach Forecast Ocean City Rehoboth Sandy Point

May 23, 2025

Memorial Day Weekend 2025 Beach Forecast Ocean City Rehoboth Sandy Point

May 23, 2025 -

Memorial Day Weekend 2025 Ocean City Rehoboth And Sandy Point Beach Forecast

May 23, 2025

Memorial Day Weekend 2025 Ocean City Rehoboth And Sandy Point Beach Forecast

May 23, 2025 -

2025 Umd Graduation Kermit The Frog To Deliver Commencement Address

May 23, 2025

2025 Umd Graduation Kermit The Frog To Deliver Commencement Address

May 23, 2025 -

University Of Maryland Graduation A Notable Amphibians Inspiring Speech

May 23, 2025

University Of Maryland Graduation A Notable Amphibians Inspiring Speech

May 23, 2025