Court Case Highlights Free Speech Concerns Regarding Character AI Chatbots

Table of Contents

The Case Itself: Facts and Legal Arguments

The case of Smith v. NovelAI Corp. (a fictionalized example for illustrative purposes), filed in the US District Court for the Northern District of California, centered on allegations of free speech violations related to the content generated by NovelAI's Character AI chatbot. The plaintiff, a user, argued that the platform's content moderation policies, specifically the removal of certain chatbot interactions, constituted censorship and violated their First Amendment rights.

- Plaintiff's Arguments: The plaintiff claimed that the removal of their chatbot interactions, which involved fictional scenarios exploring controversial topics, was arbitrary and unfairly restricted their freedom of expression. They argued that the chatbot's output, while potentially offensive to some, represented a form of protected speech.

- Defendant's Defense: NovelAI Corp. countered that it had a right to moderate content on its platform to prevent the spread of illegal or harmful material, including hate speech and incitement to violence. They argued that they are not responsible for every utterance generated by their AI, and that their moderation policies were reasonably designed to balance free expression with the need to maintain a safe online environment.

- Legal Precedents: The case cited several key legal precedents concerning online content moderation and Section 230 of the Communications Decency Act, which provides immunity to online platforms for user-generated content. The court also considered case law related to the definition of "speech" in a digital context and the limits of free speech protections.

- Problematic Chatbot Outputs: Examples of allegedly problematic chatbot outputs included sexually suggestive content and scenarios depicting violence, which the plaintiff argued were removed unfairly. The defendant countered that these outputs violated the platform's terms of service and community guidelines.

Character AI Chatbots and the First Amendment

The applicability of free speech protections to AI-generated content is a novel and complex legal issue. The Smith v. NovelAI Corp. case forces a deeper examination of this question.

- Legal Definition of "AI Speech": The court grappled with defining "speech" when it's generated by an AI. Is the speech that of the developer, the user, or the AI itself? This is a crucial question with significant implications for accountability and liability.

- Role of the Chatbot Developer: The developer's role in shaping the AI's responses through training data and algorithms was central to the legal arguments. The court needed to determine the extent to which the developer is responsible for the AI's outputs.

- Misinformation and Harmful Content: The potential for Character AI chatbots to spread misinformation, propaganda, or incite violence is a major concern. The case highlighted the need for effective content moderation strategies to mitigate these risks.

- Challenges of Content Moderation: Moderating AI-generated content presents unique challenges. The sheer volume of output and the dynamic nature of AI responses make it difficult to implement comprehensive and effective moderation policies without infringing on free speech rights.

The Question of Accountability

Determining who should be held responsible for harmful or illegal content generated by Character AI chatbots is a pivotal challenge.

- Accountability Models: The case explored several potential accountability models, including holding developers responsible for inadequately designed AI, holding users responsible for their prompts and interactions, or even exploring the concept of AI liability itself (though legally complex).

- Increased Regulation: The case may lead to increased regulation of Character AI chatbot development and deployment, with stricter requirements for content moderation and safety measures.

- Assigning Liability: Assigning liability for AI-generated content is a significant hurdle. The unpredictable nature of AI and the complexities of tracing the source of harmful outputs present substantial challenges for legal frameworks.

Implications for the Future of AI Development

The Smith v. NovelAI Corp. case has significant long-term implications for the AI industry.

- Changes in AI Development: This case may drive changes in AI development practices, with increased focus on safety measures, bias mitigation, and the incorporation of ethical considerations into the design process.

- Government Regulation: The case may influence government regulation of AI, potentially leading to new laws and guidelines that address free speech concerns and the responsible development and deployment of Character AI chatbots.

- Impact on Innovation: The need to balance free speech with safety and accountability may slow innovation but could ultimately lead to more responsible and ethical AI development.

The Public's Role in Shaping AI Ethics

Public awareness and engagement are crucial in shaping the ethical considerations surrounding Character AI chatbots.

- Responsible AI Use: Educating the public on responsible AI use and development is crucial in promoting ethical practices.

- Public Discourse: Encouraging public discourse on AI ethics will help in establishing widely accepted standards and guidelines.

- AI Literacy: Initiatives promoting AI literacy can equip individuals with the understanding needed to navigate the complex ethical considerations surrounding AI technologies.

Conclusion

The Smith v. NovelAI Corp. case (fictional example) highlights the critical need for a balanced approach that protects free speech while mitigating the risks associated with Character AI chatbots. The legal and ethical considerations surrounding accountability, content moderation, and the future of AI development are paramount. This case underscores the evolving nature of free speech in the digital age and the urgent need for thoughtful consideration of how to balance these rights with the potential for misuse of powerful AI technologies.

Call to Action: Stay informed about developments in this evolving legal landscape. Understanding the free speech implications surrounding Character AI chatbots and other AI technologies is crucial for shaping a future where AI benefits society while respecting fundamental rights. Engage in discussions and advocate for responsible innovation in the field of Character AI chatbots and free speech. Let's work together to ensure that Character AI chatbots are developed and used responsibly, upholding free speech while mitigating potential harms.

Featured Posts

-

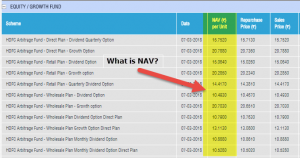

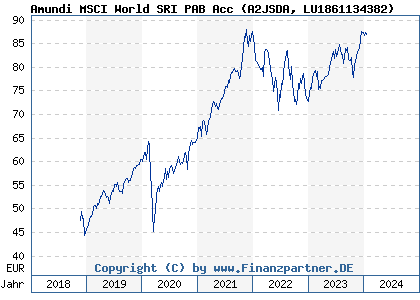

Tracking The Net Asset Value Nav Of The Amundi Dow Jones Industrial Average Ucits Etf

May 24, 2025

Tracking The Net Asset Value Nav Of The Amundi Dow Jones Industrial Average Ucits Etf

May 24, 2025 -

Will A Us Market Upswing Counteract The Daxs Positive Momentum

May 24, 2025

Will A Us Market Upswing Counteract The Daxs Positive Momentum

May 24, 2025 -

Aex Index Wint Terrein Terwijl Amerikaanse Beurs Daalt

May 24, 2025

Aex Index Wint Terrein Terwijl Amerikaanse Beurs Daalt

May 24, 2025 -

Amundi Msci World Ex Us Ucits Etf Acc Understanding Net Asset Value Nav

May 24, 2025

Amundi Msci World Ex Us Ucits Etf Acc Understanding Net Asset Value Nav

May 24, 2025 -

Bbc Radio 1 Big Weekend 2025 A Complete Guide To Ticket Purchasing

May 24, 2025

Bbc Radio 1 Big Weekend 2025 A Complete Guide To Ticket Purchasing

May 24, 2025

Latest Posts

-

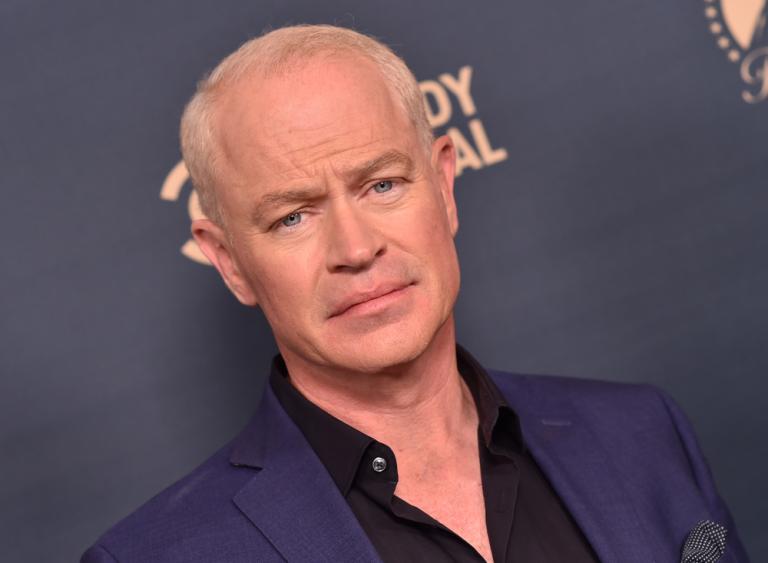

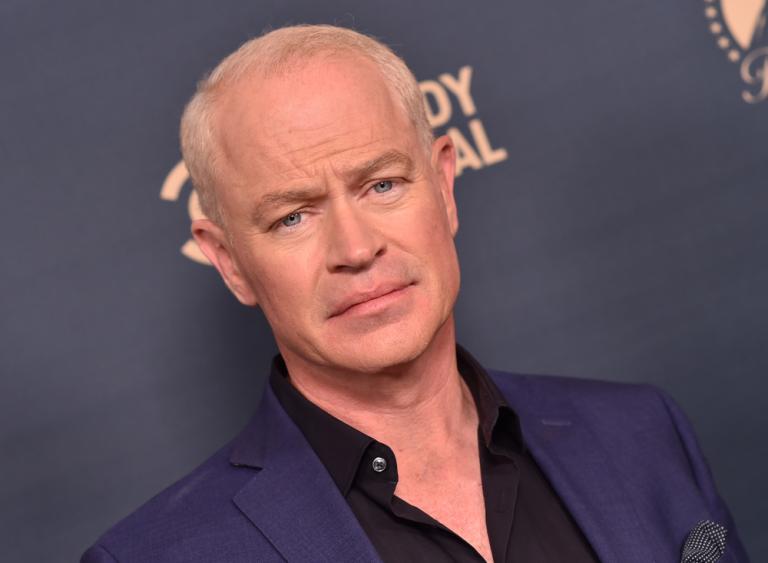

The Last Rodeo Exploring Neal Mc Donoughs Roles Faith And His Portrayal Of The Pope

May 24, 2025

The Last Rodeo Exploring Neal Mc Donoughs Roles Faith And His Portrayal Of The Pope

May 24, 2025 -

Gas Prices Plunge Memorial Day Weekend Travel Could Be Cheaper

May 24, 2025

Gas Prices Plunge Memorial Day Weekend Travel Could Be Cheaper

May 24, 2025 -

Neal Mc Donough Discusses Faith And Film In The Last Rodeo

May 24, 2025

Neal Mc Donough Discusses Faith And Film In The Last Rodeo

May 24, 2025 -

Whats Open On Memorial Day 2025 In Michigan Your Guide To The Federal Holiday

May 24, 2025

Whats Open On Memorial Day 2025 In Michigan Your Guide To The Federal Holiday

May 24, 2025 -

The Last Rodeo An Interview With Neal Mc Donough About Faith Film And Bull Riding

May 24, 2025

The Last Rodeo An Interview With Neal Mc Donough About Faith Film And Bull Riding

May 24, 2025