Develop Voice Assistants Effortlessly With OpenAI's New Tools

Table of Contents

Understanding OpenAI's Contribution to Voice Assistant Development

OpenAI has significantly simplified the process of voice assistant creation by providing readily available, high-performing APIs and pre-trained models. Gone are the days of needing massive datasets and years of development; OpenAI's contribution lies in democratizing access to cutting-edge technologies. Specific tools crucial for voice assistant development include Whisper for speech-to-text conversion and GPT models for natural language understanding (NLU).

- Improved accuracy in speech recognition: Whisper boasts state-of-the-art accuracy, even in noisy environments, translating spoken words into text with remarkable precision. This is vital for a smooth user experience in your voice assistant.

- Enhanced natural language processing capabilities: OpenAI's GPT models excel at understanding the nuances of human language, allowing your voice assistant to interpret complex commands and requests accurately. This leads to more natural and intuitive interactions.

- Simplified integration with existing platforms: OpenAI APIs are designed for easy integration with popular development frameworks and platforms, reducing the complexity of deployment and saving valuable development time.

- Reduced development time and costs: By leveraging pre-trained models and streamlined APIs, you can drastically cut down on the time and resources required to build a functional voice assistant, making it a viable project for individuals and smaller teams.

Step-by-Step Guide: Building a Basic Voice Assistant with OpenAI Tools

Let's outline a basic approach to building a voice assistant using OpenAI's tools. This guide focuses on utilizing OpenAI's APIs and pre-trained models.

- Setting up your development environment: Begin by installing necessary libraries and setting up an OpenAI API key. Popular choices for development include Python with libraries like

openaiandspeech_recognition. - Choosing the right OpenAI model for your needs: Select an appropriate GPT model based on your requirements. For basic tasks, a smaller model might suffice; for complex interactions, a larger model offers greater accuracy and contextual understanding.

- Integrating speech-to-text functionality (using Whisper API): Use the Whisper API to transcribe audio input from a microphone or audio file into text. This forms the foundation of your voice assistant's input mechanism.

- Implementing natural language understanding (using GPT models): Utilize a GPT model to interpret the transcribed text, understanding the user's intent and extracting relevant information.

- Adding text-to-speech capabilities (mentioning potential integrations): Integrate a text-to-speech (TTS) engine to convert the voice assistant's responses back into spoken words. Several TTS APIs and libraries are readily available.

- Deploying your voice assistant (mentioning platform options): Once developed, you can deploy your voice assistant on various platforms, such as a local machine, a cloud server (AWS, Google Cloud, Azure), or even integrate it into a smart home device.

Advanced Features and Customization with OpenAI's APIs

OpenAI's APIs allow for significant customization and the addition of advanced features to elevate your voice assistant beyond the basics.

- Customizing personality and tone of voice: Fine-tune the GPT model's responses to reflect a specific personality or tone, creating a unique user experience.

- Integrating with external services (e.g., calendars, weather APIs): Connect your voice assistant to external APIs to provide access to real-time information and services, expanding its functionality.

- Adding context awareness and memory: Implement mechanisms to allow your voice assistant to remember previous interactions and maintain context during a conversation, making the experience more natural and fluid.

- Building a personalized knowledge base: Create a custom knowledge base tailored to your specific needs, enabling your voice assistant to answer questions and provide information within a defined domain.

- Training custom models for specific tasks or domains: For highly specialized tasks, you can fine-tune existing models or train new ones using your own data, improving performance in specific areas.

Overcoming Challenges and Best Practices

While OpenAI's tools simplify development, certain challenges may arise.

- Handling noisy audio input: Implement noise reduction techniques to improve the accuracy of speech-to-text conversion, particularly in noisy environments.

- Managing ambiguous user requests: Develop robust NLU to handle ambiguous or unclear requests, guiding the user toward clarification when needed.

- Ensuring privacy and security: Implement appropriate security measures to protect user data and comply with privacy regulations.

- Optimizing for performance and scalability: Optimize your code and choose appropriate infrastructure to ensure your voice assistant performs efficiently and can scale to handle increasing user demand.

- Iterative development and testing: Embrace an iterative development process, regularly testing and refining your voice assistant to ensure optimal performance and user satisfaction.

Conclusion

Using OpenAI's tools for voice assistant development offers significant advantages: ease of use, reduced development time, and enhanced capabilities. The combination of Whisper for speech-to-text and powerful GPT models for natural language understanding provides a robust foundation for creating innovative voice-driven applications.

Ready to start developing your own innovative voice assistant effortlessly? Explore OpenAI's powerful tools today and unlock the potential of voice-driven applications. Learn more about building voice assistants with OpenAI's resources and start building your own today!

Featured Posts

-

Dsp Signals Caution Indian Stock Market Concerns Prompt Cash Increase

Apr 29, 2025

Dsp Signals Caution Indian Stock Market Concerns Prompt Cash Increase

Apr 29, 2025 -

Louisville Eateries Face Challenges Due To River Road Roadwork

Apr 29, 2025

Louisville Eateries Face Challenges Due To River Road Roadwork

Apr 29, 2025 -

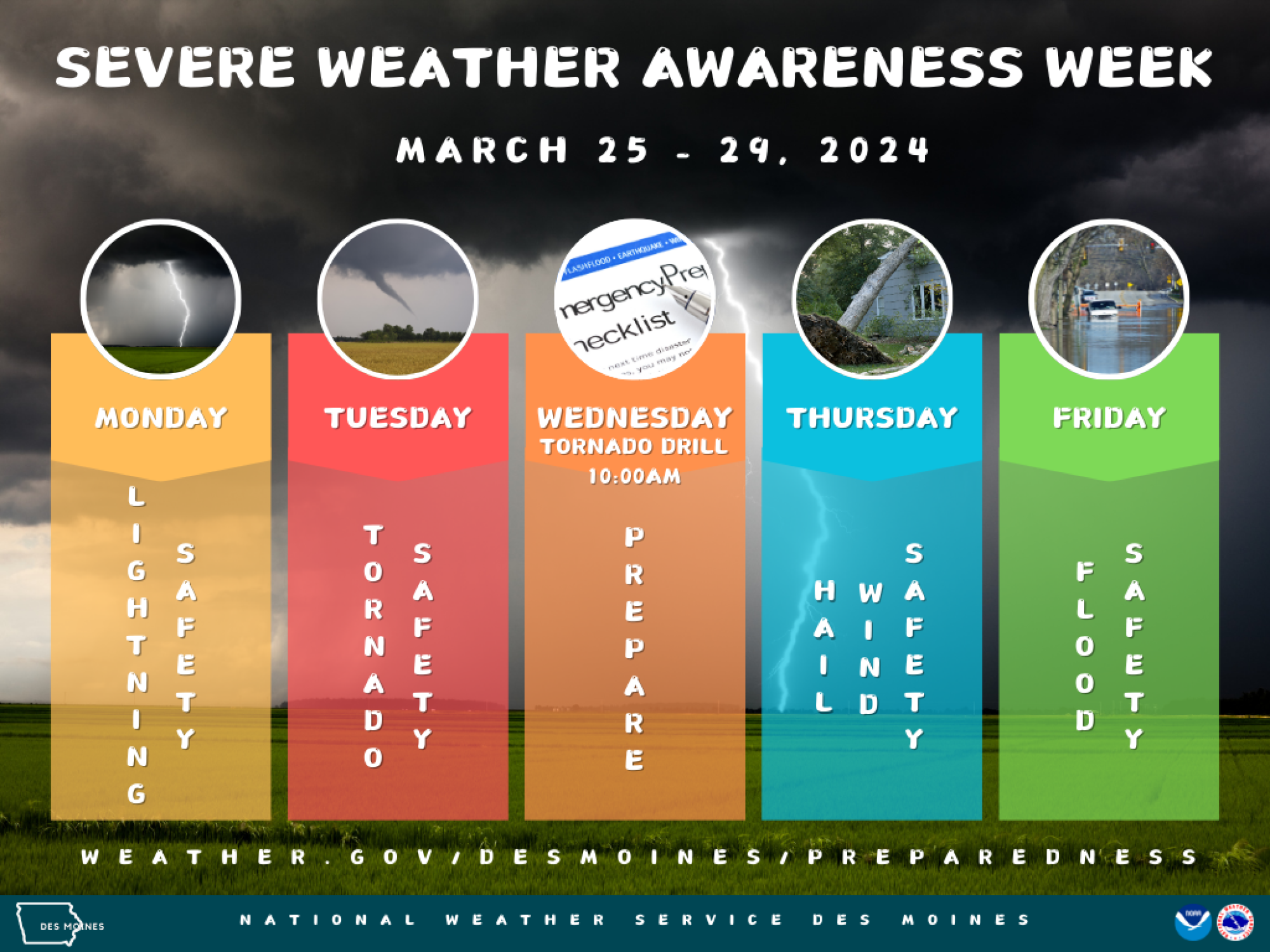

Nws Kentucky Important Updates For Severe Weather Awareness Week

Apr 29, 2025

Nws Kentucky Important Updates For Severe Weather Awareness Week

Apr 29, 2025 -

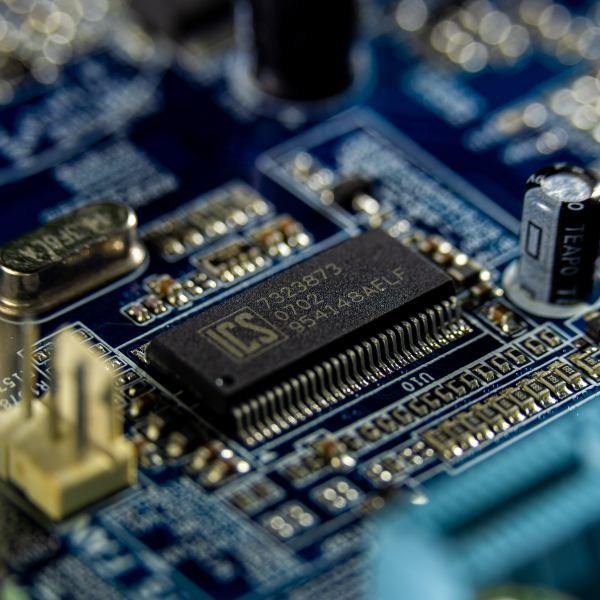

Long Lasting Power Evaluating Kuxius Solid State Power Bank Technology

Apr 29, 2025

Long Lasting Power Evaluating Kuxius Solid State Power Bank Technology

Apr 29, 2025 -

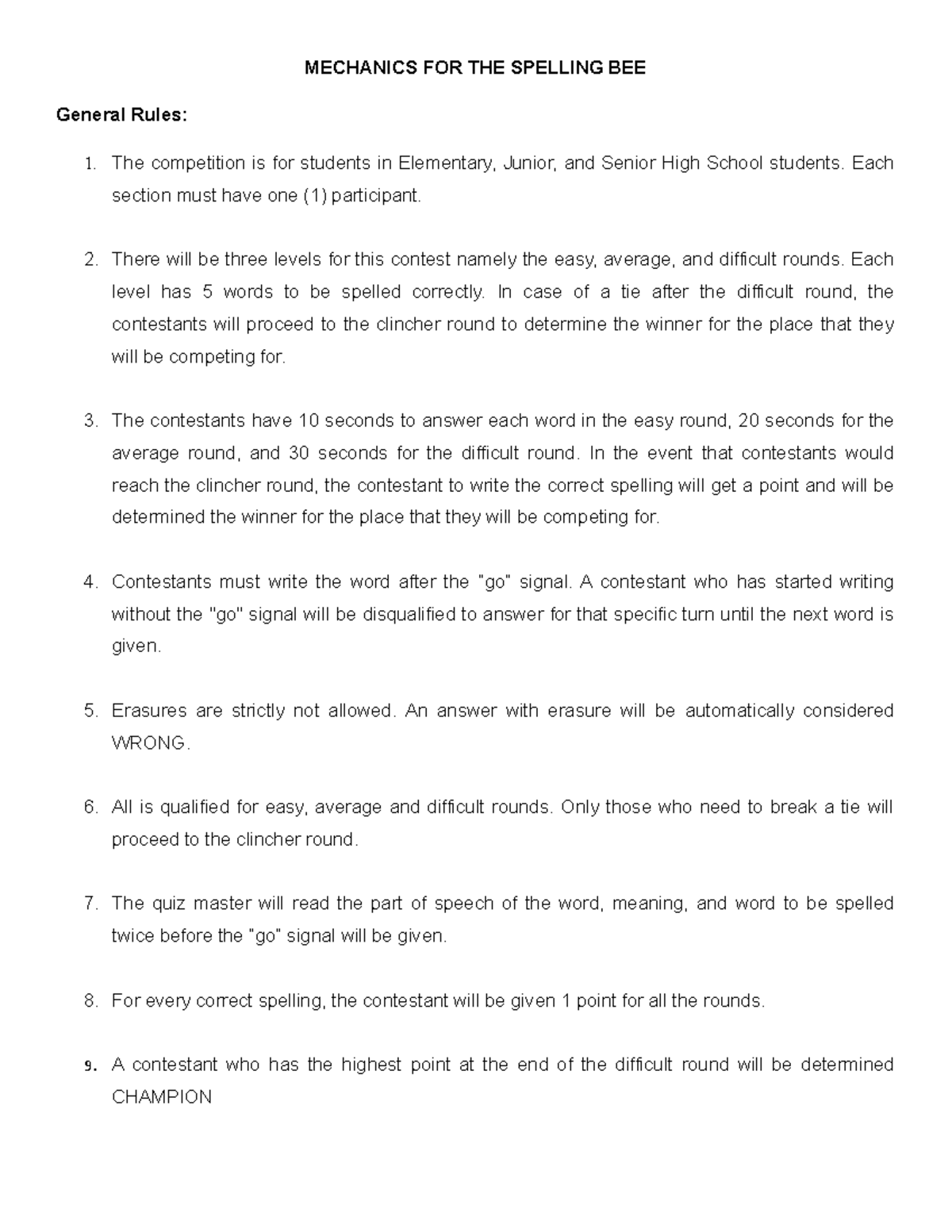

Nyt Spelling Bee April 3 2025 Full Solutions And Tips

Apr 29, 2025

Nyt Spelling Bee April 3 2025 Full Solutions And Tips

Apr 29, 2025

Latest Posts

-

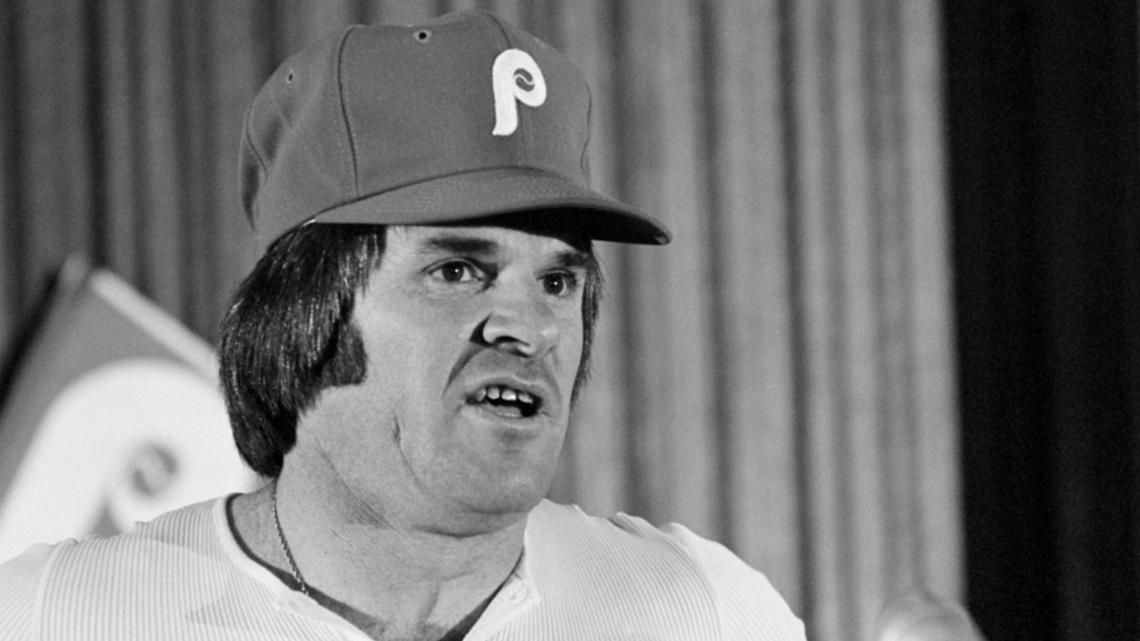

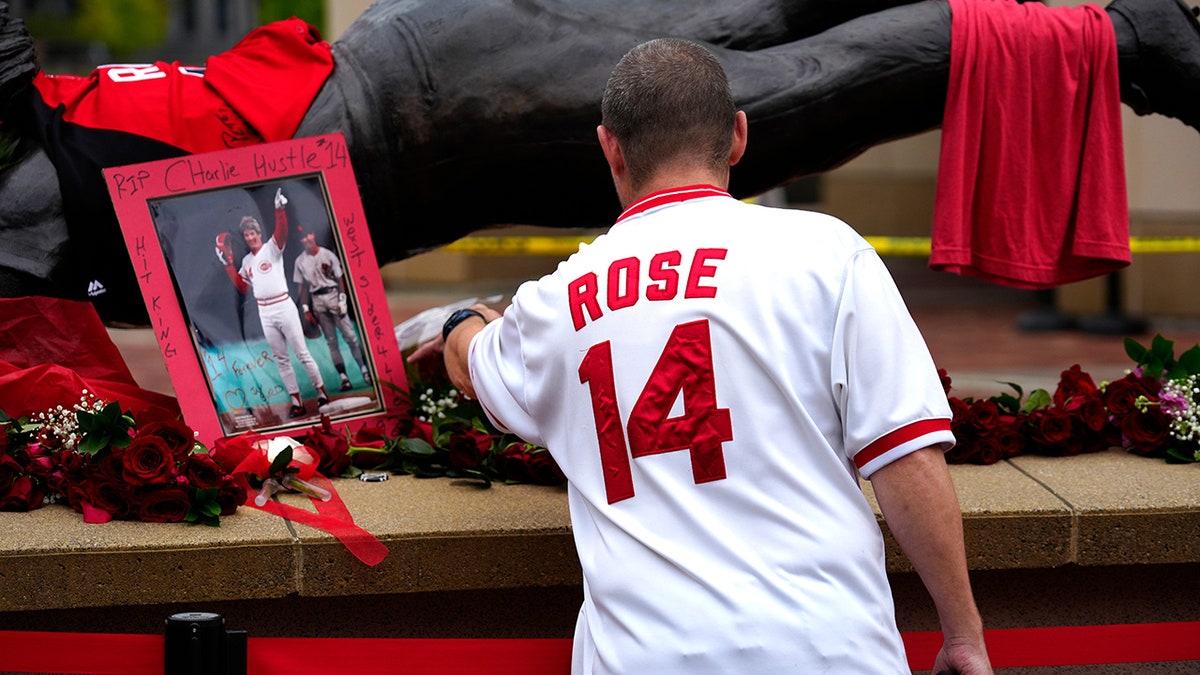

Trump To Pardon Pete Rose Posthumously Details And Reactions

Apr 29, 2025

Trump To Pardon Pete Rose Posthumously Details And Reactions

Apr 29, 2025 -

Pete Rose Pardon Trumps Statement And Its Implications

Apr 29, 2025

Pete Rose Pardon Trumps Statement And Its Implications

Apr 29, 2025 -

Trump To Pardon Pete Rose After His Death Examining The Announcement

Apr 29, 2025

Trump To Pardon Pete Rose After His Death Examining The Announcement

Apr 29, 2025 -

Will Pete Rose Receive A Posthumous Pardon From Trump Analysis And Reactions

Apr 29, 2025

Will Pete Rose Receive A Posthumous Pardon From Trump Analysis And Reactions

Apr 29, 2025 -

Pete Rose Pardon Trumps Post Presidency Plans

Apr 29, 2025

Pete Rose Pardon Trumps Post Presidency Plans

Apr 29, 2025