OpenAI Under FTC Scrutiny: A Deep Dive Into The ChatGPT Probe

Table of Contents

The FTC's Investigation: What We Know So Far

The FTC's investigation into OpenAI is a landmark event, potentially setting precedents for how the government will regulate the rapidly evolving field of AI.

Allegations of Unfair and Deceptive Practices

The FTC's investigation centers around allegations that OpenAI engaged in unfair or deceptive business practices. While the specifics remain largely under wraps, leaked documents and news reports suggest several key areas of concern:

- Data Privacy Violations: Concerns exist about the massive datasets used to train ChatGPT, including potentially sensitive personal information collected without proper consent. This raises questions about compliance with data privacy regulations like GDPR and CCPA.

- Bias in ChatGPT's Outputs: Critics point to instances where ChatGPT has generated biased or discriminatory content, reflecting biases present in the training data. This raises serious ethical concerns about the fairness and equity of AI systems.

- Misleading Statements about ChatGPT's Capabilities: Some argue that OpenAI has overstated the capabilities of ChatGPT, potentially misleading users about its limitations and potential risks. This could constitute deceptive marketing under FTC regulations.

[Link to relevant news article 1] [Link to relevant news article 2] [Link to official FTC statement – if available]

The Scope of the Investigation

The breadth of the FTC's inquiry remains unclear, but it likely encompasses multiple facets of OpenAI's operations:

- Data Security: The investigation is likely examining OpenAI's practices for securing the vast amounts of data used to train its models. This includes assessing their vulnerability to data breaches and unauthorized access.

- User Privacy: The FTC is likely scrutinizing OpenAI's data collection and usage practices to determine whether they comply with existing privacy laws and regulations.

- Algorithmic Bias: A key area of concern is the potential for algorithmic bias in ChatGPT and how OpenAI is addressing this critical issue. The investigation will likely examine OpenAI's efforts to mitigate bias in its models.

This investigation sets a crucial legal precedent for future AI development, particularly regarding the responsibilities of companies creating and deploying powerful AI models.

Potential Penalties and Outcomes

If the FTC finds OpenAI to be in violation of its regulations, the potential penalties could be significant:

- Substantial Fines: OpenAI could face hefty fines for violating FTC regulations. The amount would depend on the severity of the violations and the company's cooperation with the investigation.

- Changes to Business Practices: The FTC could mandate significant changes to OpenAI's data handling practices, model training processes, and disclosure statements.

- Restrictions on Data Usage: The FTC might impose restrictions on the types of data OpenAI can collect and use for training its models.

- Reputational Damage: Even without formal penalties, the investigation could severely damage OpenAI's reputation, impacting its ability to attract investors and partners.

The Ethical Concerns Surrounding ChatGPT and Large Language Models (LLMs)

The OpenAI investigation highlights broader ethical concerns surrounding ChatGPT and other LLMs:

Data Privacy and Security

The massive datasets used to train LLMs like ChatGPT raise serious concerns about data privacy and security:

- Data Breaches: The sheer volume of data stored and processed by OpenAI makes it a prime target for cyberattacks, raising the risk of data breaches and unauthorized access to sensitive information.

- Unauthorized Data Collection: Concerns exist about the potential for unauthorized data collection and the lack of transparency in data usage practices. Users may not fully understand how their data is being used to train these powerful models.

- Lack of Transparency: The lack of transparency regarding data sources and processing methods makes it difficult for users to assess the potential risks associated with the use of their data.

Algorithmic Bias and Fairness

AI models like ChatGPT are not immune to the biases present in the data they are trained on:

- Bias in ChatGPT's Output: Examples of biased or discriminatory outputs from ChatGPT have been widely documented, highlighting the challenge of mitigating bias in AI systems.

- Challenges in Mitigating Bias: Developing effective strategies to identify and mitigate bias in large language models is an ongoing challenge, requiring significant research and development efforts.

- Ethical Implications of Unfair Outcomes: The deployment of biased algorithms can lead to unfair or discriminatory outcomes, perpetuating existing societal inequalities.

Misinformation and Manipulation

The ability of LLMs to generate human-quality text raises concerns about their potential for misuse:

- Examples of Misuse: ChatGPT and similar LLMs can be used to create realistic-sounding fake news articles, propaganda, and other forms of misinformation.

- Need for Safeguards: Safeguards are needed to prevent the misuse of these technologies for malicious purposes, including the development of detection methods and ethical guidelines for their use.

- Role of Regulation: Regulation plays a crucial role in preventing the spread of misinformation and the manipulation of public opinion through AI-generated content.

The Future of AI Regulation in Light of the OpenAI Probe

The OpenAI investigation underscores the urgent need for a comprehensive regulatory framework for AI:

The Need for Comprehensive AI Governance

The rapid development of AI necessitates the creation of clear guidelines and regulations:

- Proposed Regulations: Various proposals for AI regulation are being debated, including regulations focusing on data privacy, algorithmic transparency, and liability for AI-related harms.

- International Cooperation: International cooperation is crucial to ensure that AI regulation is effective and consistent across different jurisdictions.

- Role of Independent Oversight Bodies: Independent oversight bodies are needed to monitor the development and deployment of AI systems and ensure compliance with regulations.

Balancing Innovation and Safety

Regulating AI requires a delicate balance between fostering innovation and ensuring safety:

- Responsible AI Development: Encouraging responsible AI development practices, including ethical considerations and rigorous testing, is crucial for mitigating risks.

- Ethical Considerations: Ethical considerations should be at the forefront of AI development and deployment, ensuring that AI systems are used for beneficial purposes and do not cause harm.

- Risk-Based Approach to Regulation: A risk-based approach to regulation, focusing on the potential harms associated with specific AI applications, is essential.

The Impact on the AI Industry

The OpenAI investigation will likely have a significant impact on the AI industry:

- Changes in Development Practices: Companies developing AI systems will likely need to adapt their practices to ensure compliance with stricter regulations.

- Increased Scrutiny: The AI industry will face increased scrutiny from regulators and the public, leading to greater transparency and accountability.

- Potential for Slower Innovation: Greater regulatory oversight could potentially slow down the pace of innovation, particularly in areas with higher risks.

Conclusion

The FTC's investigation into OpenAI highlights the critical need for responsible AI development and the urgent necessity for a comprehensive regulatory framework. The allegations of unfair and deceptive practices, alongside the broader ethical concerns surrounding ChatGPT and LLMs, underscore the potential risks associated with powerful AI technologies. The investigation's outcome will significantly shape the future of AI regulation and the AI industry's approach to ethical considerations. Key takeaways include the need for stronger data privacy protections, strategies to mitigate algorithmic bias, and safeguards against misinformation. Stay informed about the evolving landscape of AI regulation and the continued scrutiny of OpenAI by following the latest news on the FTC investigation and exploring resources dedicated to responsible AI development.

Featured Posts

-

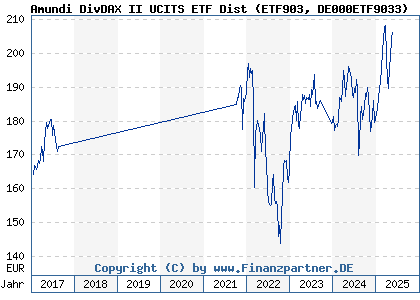

Amundi Msci World Ii Ucits Etf Usd Hedged Dist Nav Performance And Analysis

May 24, 2025

Amundi Msci World Ii Ucits Etf Usd Hedged Dist Nav Performance And Analysis

May 24, 2025 -

Mia Farrows Comeback Is Ronan Farrow The Key

May 24, 2025

Mia Farrows Comeback Is Ronan Farrow The Key

May 24, 2025 -

Mwshr Daks Alalmany Awl Mwshr Awrwby Ytkhta Dhrwt Mars

May 24, 2025

Mwshr Daks Alalmany Awl Mwshr Awrwby Ytkhta Dhrwt Mars

May 24, 2025 -

Atp Indian Wells Drapers Maiden Masters 1000 Championship

May 24, 2025

Atp Indian Wells Drapers Maiden Masters 1000 Championship

May 24, 2025 -

England Airpark And Alexandria International Airports New Ae Xplore Campaign Fly Local Explore The World

May 24, 2025

England Airpark And Alexandria International Airports New Ae Xplore Campaign Fly Local Explore The World

May 24, 2025

Latest Posts

-

Zekanin Sirri Burclarda Mi En Akilli Burclar Listesi

May 24, 2025

Zekanin Sirri Burclarda Mi En Akilli Burclar Listesi

May 24, 2025 -

Burclar Ve Zeka En Yetenekli Burclar Hangileri

May 24, 2025

Burclar Ve Zeka En Yetenekli Burclar Hangileri

May 24, 2025 -

Horoscope Predictions For March 20 2025 5 Powerful Signs

May 24, 2025

Horoscope Predictions For March 20 2025 5 Powerful Signs

May 24, 2025 -

En Zeki Burclar Akil Zeka Ve Basarida Oende Olanlar

May 24, 2025

En Zeki Burclar Akil Zeka Ve Basarida Oende Olanlar

May 24, 2025 -

En Zeki Burclar Dahilik Genleri Ve Akil Yetenekleri

May 24, 2025

En Zeki Burclar Dahilik Genleri Ve Akil Yetenekleri

May 24, 2025