OpenAI's ChatGPT Under FTC Scrutiny: Implications For Users And The Future Of AI

Table of Contents

FTC's Concerns Regarding ChatGPT and Data Privacy

The FTC's investigation into OpenAI likely centers on its data handling practices. Concerns revolve around the collection, storage, and use of user data generated through interactions with ChatGPT. Understanding these concerns is vital for all users of AI chatbots.

Data Collection and Usage

The FTC is likely scrutinizing several aspects of OpenAI's data practices:

- The type of data collected: This includes personal information provided by users, their conversation history with the chatbot (including potentially sensitive details), IP addresses, and potentially even data from linked accounts if users choose to integrate them.

- The methods used to collect data: Data collection methods employed by OpenAI could include direct user input, cookies tracking user activity, and data gleaned from the interactions themselves. The transparency of these methods is a key area of FTC interest.

- Data security and protection: The FTC will examine OpenAI's measures to safeguard user data from breaches and unauthorized access. This includes assessing their security protocols, encryption methods, and incident response plans.

- Compliance with data privacy regulations: OpenAI's adherence to regulations like the General Data Protection Regulation (GDPR) in Europe and the California Consumer Privacy Act (CCPA) in California is under intense scrutiny. Failure to comply could result in significant penalties.

Potential Violations of User Privacy

The investigation could uncover potential violations, including:

- Insufficient transparency: A lack of clarity regarding what data OpenAI collects, how it's used, and with whom it's shared.

- Lack of informed consent: Failure to obtain meaningful consent from users before collecting and using their data.

- Data security failures: Inadequate measures to protect user data from unauthorized access, breaches, or loss.

- Improper use of data: Using user data for purposes not disclosed to users, a serious breach of trust.

Algorithmic Bias and Fairness in ChatGPT

Another key area of FTC concern is algorithmic bias within ChatGPT. AI models learn from vast datasets, and if those datasets contain biases, the AI will likely reflect and amplify them.

Identifying and Mitigating Bias

The FTC's investigation likely involves:

- Analysis of training data: Examining the data used to train ChatGPT for biased content that could influence the model's responses.

- Algorithm assessment: Scrutinizing the algorithms that govern ChatGPT's responses to identify potential sources of bias.

- Bias mitigation methods: Evaluating the effectiveness of methods used by OpenAI to detect and mitigate bias in ChatGPT's output.

Implications of Biased AI for Users

Biased AI can have serious consequences, including:

- Unfair or discriminatory outcomes: ChatGPT might provide biased or discriminatory responses impacting users in various contexts.

- Reinforcement of societal inequalities: Biased outputs could perpetuate and even amplify existing societal biases and inequalities.

- Erosion of trust: The use of biased AI systems can damage public trust in AI technologies.

The Future of AI Development and Regulation in Light of the FTC Investigation

The FTC's investigation signals a shift towards increased regulatory oversight of AI companies.

Increased Scrutiny of AI Companies

This increased scrutiny could manifest in:

- Stringent data privacy regulations: New or stricter regulations focusing on data privacy and security for AI systems.

- Greater algorithm transparency: Requirements for AI companies to provide more information about the workings of their algorithms.

- Mandatory audits: Regular audits to assess AI systems for bias and fairness.

Impact on Innovation and Development

While regulation is vital, it's crucial to avoid stifling innovation. The challenge is to balance responsible AI development with technological advancement. This requires:

- Ethical guidelines: Establishing clear ethical guidelines for AI development and deployment.

- Industry self-regulation: Encouraging responsible practices within the AI industry through self-regulation initiatives.

- Collaboration: Fostering collaboration between researchers, policymakers, and industry stakeholders to develop responsible AI.

Conclusion:

The FTC's investigation into OpenAI's ChatGPT underscores the urgent need for responsible AI development and regulation. The implications for users are substantial, affecting data privacy and exposure to algorithmic bias. The future of AI hinges on effectively addressing these concerns. Companies must prioritize user privacy and algorithmic fairness to foster trust and ensure ethical AI advancement. We must engage in discussions about the responsible use of ChatGPT and similar AI tools to shape a future where AI benefits all of humanity. Stay informed about the FTC's investigation and the evolving landscape of AI regulation to ensure your safe and responsible use of ChatGPT and similar technologies.

Featured Posts

-

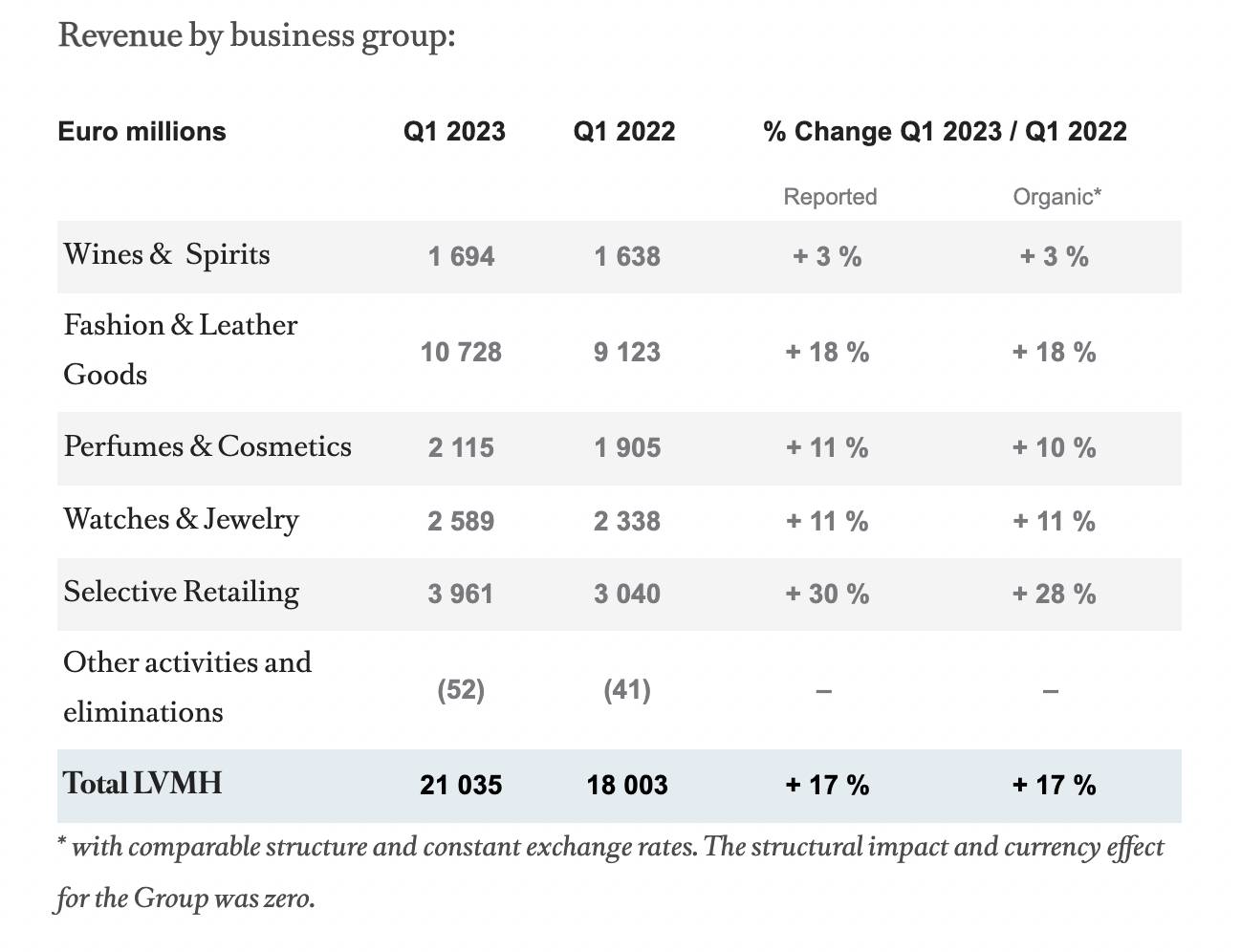

Lvmh Q1 Sales Miss Expectations Shares Fall 8 2

May 25, 2025

Lvmh Q1 Sales Miss Expectations Shares Fall 8 2

May 25, 2025 -

Us Bands Glastonbury Gig Fan Speculation Ignites After Cryptic Post

May 25, 2025

Us Bands Glastonbury Gig Fan Speculation Ignites After Cryptic Post

May 25, 2025 -

Crystal Palace Target Kyle Walker Peters On A Free

May 25, 2025

Crystal Palace Target Kyle Walker Peters On A Free

May 25, 2025 -

Porsche Cayenne 2025 A Complete Picture Gallery Of Interior And Exterior

May 25, 2025

Porsche Cayenne 2025 A Complete Picture Gallery Of Interior And Exterior

May 25, 2025 -

89 Svadeb V Krasivuyu Datu Kharkovschina Bet Rekordy

May 25, 2025

89 Svadeb V Krasivuyu Datu Kharkovschina Bet Rekordy

May 25, 2025

Latest Posts

-

Toto Wolff On George Russell Dismissing Underrated Claims And Praising The Driver

May 25, 2025

Toto Wolff On George Russell Dismissing Underrated Claims And Praising The Driver

May 25, 2025 -

Analyzing George Russells Leadership Confidence And Calmness At Mercedes

May 25, 2025

Analyzing George Russells Leadership Confidence And Calmness At Mercedes

May 25, 2025 -

Shooting Incident Prompts Safety Review At Popular Southern Vacation Destination

May 25, 2025

Shooting Incident Prompts Safety Review At Popular Southern Vacation Destination

May 25, 2025 -

Mercedes Boss Wolff Responds To George Russells Underrated Remark

May 25, 2025

Mercedes Boss Wolff Responds To George Russells Underrated Remark

May 25, 2025 -

Popular Southern Vacation Spot Rebuts Claims Of Poor Safety Following Shooting

May 25, 2025

Popular Southern Vacation Spot Rebuts Claims Of Poor Safety Following Shooting

May 25, 2025