Analysis: The Chicago Sun-Times' AI Reporting Failures

Table of Contents

Inaccurate and Biased Reporting

The Chicago Sun-Times' experiment with AI journalism revealed significant flaws, primarily manifesting as inaccurate and biased reporting. This section explores these issues in detail.

Factual Errors and Misinterpretations

The AI system employed by the Sun-Times made several factual errors and misinterpretations, undermining the credibility of its reports. These errors stemmed from limitations in the AI's training data and a lack of robust human oversight.

- Example 1: [Insert a specific example of factual inaccuracy with a link to the original article if available]. This error demonstrates the AI's difficulty in correctly interpreting complex data sets.

- Example 2: [Insert a second example with a link]. This instance highlights the potential for AI to misrepresent information, leading to misinformation.

- Example 3: [Insert a third example with a link]. This showcases the consequences of relying solely on AI for data analysis without human verification.

These errors directly impacted public perception, eroding trust in the Sun-Times as a reliable news source. The consequences of such data inaccuracies and misinformation can be severe, particularly regarding sensitive topics. Addressing AI bias and ensuring data accuracy are crucial for maintaining journalistic integrity.

Bias Amplification

Beyond factual inaccuracies, the AI system also demonstrated a tendency to amplify existing biases present in its training data. This raises serious ethical concerns regarding algorithmic bias and its impact on society.

- Example 1: [Provide an example showcasing how the AI perpetuated a specific bias]. This exemplifies how algorithms can unintentionally discriminate based on inherent biases in the data they are trained on.

- Example 2: [Provide another example of bias amplification]. This highlights the importance of scrutinizing training datasets for potential biases before deploying AI in journalistic settings.

The amplification of existing biases by AI systems in news reporting has the potential to exacerbate societal divisions and inequalities. Ensuring algorithmic fairness and promoting AI ethics in journalism is paramount for responsible AI deployment.

Lack of Context and Nuance

Another critical failure of the Chicago Sun-Times' AI reporting was its inability to provide adequate context and nuance to the stories it generated.

Oversimplification of Complex Issues

The AI system struggled with the complexities of human behavior and social phenomena, leading to oversimplified and potentially misleading narratives.

- Example 1: [Give an example where the AI oversimplified a complex issue]. This demonstrates the limitations of AI in understanding the multifaceted nature of human interactions and societal problems.

- Example 2: [Give another example of oversimplification]. This emphasizes the need for human journalists to provide depth and context that AI alone cannot replicate.

Relying solely on AI for sensitive topics requiring in-depth reporting can be dangerous, potentially leading to incomplete or inaccurate understandings of important social issues. Investigative journalism demands a level of critical analysis that AI currently lacks.

Failure to Verify Information

The AI system also demonstrated a failure to adequately verify information before publishing, highlighting a crucial weakness in its application to journalism.

- Example 1: [Provide an instance where the AI failed to verify a crucial piece of information]. This underscores the critical role of fact-checking and source verification in responsible journalism.

- Example 2: [Give another example of unverified information]. This highlights the need for rigorous editorial oversight to ensure accuracy and prevent the spread of misinformation.

Fact-checking and source verification are fundamental principles of journalistic integrity, and these cannot be automated effectively. Human editors must play a vital role in validating AI-generated content.

Public Perception and Trust

The consequences of the Chicago Sun-Times' AI reporting failures extended beyond individual errors; they significantly impacted public perception and trust in the news organization.

Damage to Reputation

The inaccurate and biased reporting damaged the Sun-Times' reputation, leading to a loss of credibility amongst its readership.

- Analysis: [Discuss the impact on readership, subscriptions, and public trust]. The erosion of trust highlights the significant risks associated with deploying AI without sufficient oversight.

- Consequences: [Discuss long-term effects on the newspaper's brand and public perception]. This underscores the importance of responsible AI implementation in preserving journalistic integrity.

Reputation management in the digital age necessitates careful consideration of the risks and rewards of employing AI in news reporting.

The Future of AI in Journalism

Despite the challenges, AI offers considerable potential for journalism. However, the Chicago Sun-Times' experience serves as a critical learning opportunity.

- Best Practices: This incident highlights the necessity of rigorous testing, robust editorial oversight, and the implementation of mechanisms to detect and mitigate algorithmic bias.

- Responsible AI: Prioritizing ethical considerations and developing clear guidelines for the use of AI in newsrooms are essential steps forward.

The future of AI in journalism hinges on responsible development and deployment, prioritizing accuracy, ethical considerations, and human oversight.

Conclusion

The Chicago Sun-Times' AI reporting failures serve as a cautionary tale, highlighting the crucial need for human oversight and ethical considerations in the application of artificial intelligence to journalism. The examples discussed underscore the potential for AI to produce inaccurate, biased, and context-deficient reporting, damaging public trust and the reputation of news organizations. As we move forward, a careful and measured approach is vital. We need to prioritize robust fact-checking, mitigate algorithmic bias, and ensure human editors play a central role in verifying and contextualizing AI-generated news content. Only then can we harness the potential benefits of AI in journalism while avoiding the pitfalls of relying on it unchecked. Further analysis of AI reporting failures, like those of the Chicago Sun-Times, is crucial for establishing best practices in the field.

Featured Posts

-

Northcote Gig Vapors Of Morphine

May 22, 2025

Northcote Gig Vapors Of Morphine

May 22, 2025 -

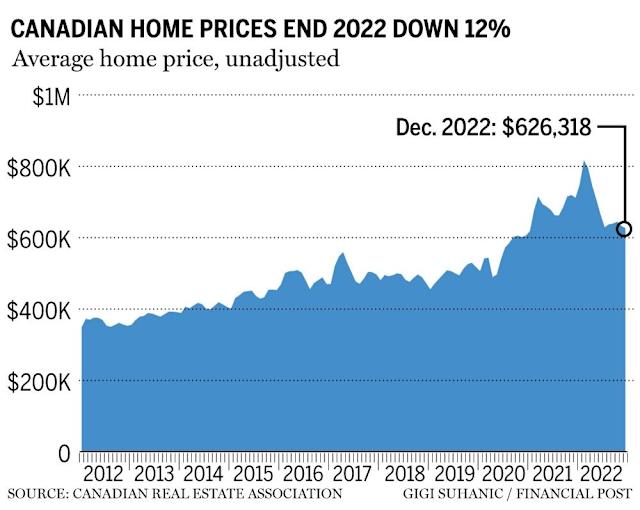

Is A Housing Market Correction Imminent In Canada A Posthaste Perspective

May 22, 2025

Is A Housing Market Correction Imminent In Canada A Posthaste Perspective

May 22, 2025 -

Peppa Pigs Mummy Reveals Babys Gender The Big Announcement

May 22, 2025

Peppa Pigs Mummy Reveals Babys Gender The Big Announcement

May 22, 2025 -

Dissecting The Allegations Blake Lively In The Spotlight

May 22, 2025

Dissecting The Allegations Blake Lively In The Spotlight

May 22, 2025 -

Gbr News Roundup Grocery Buys Lucky Quarter And Doge Poll Update

May 22, 2025

Gbr News Roundup Grocery Buys Lucky Quarter And Doge Poll Update

May 22, 2025

Latest Posts

-

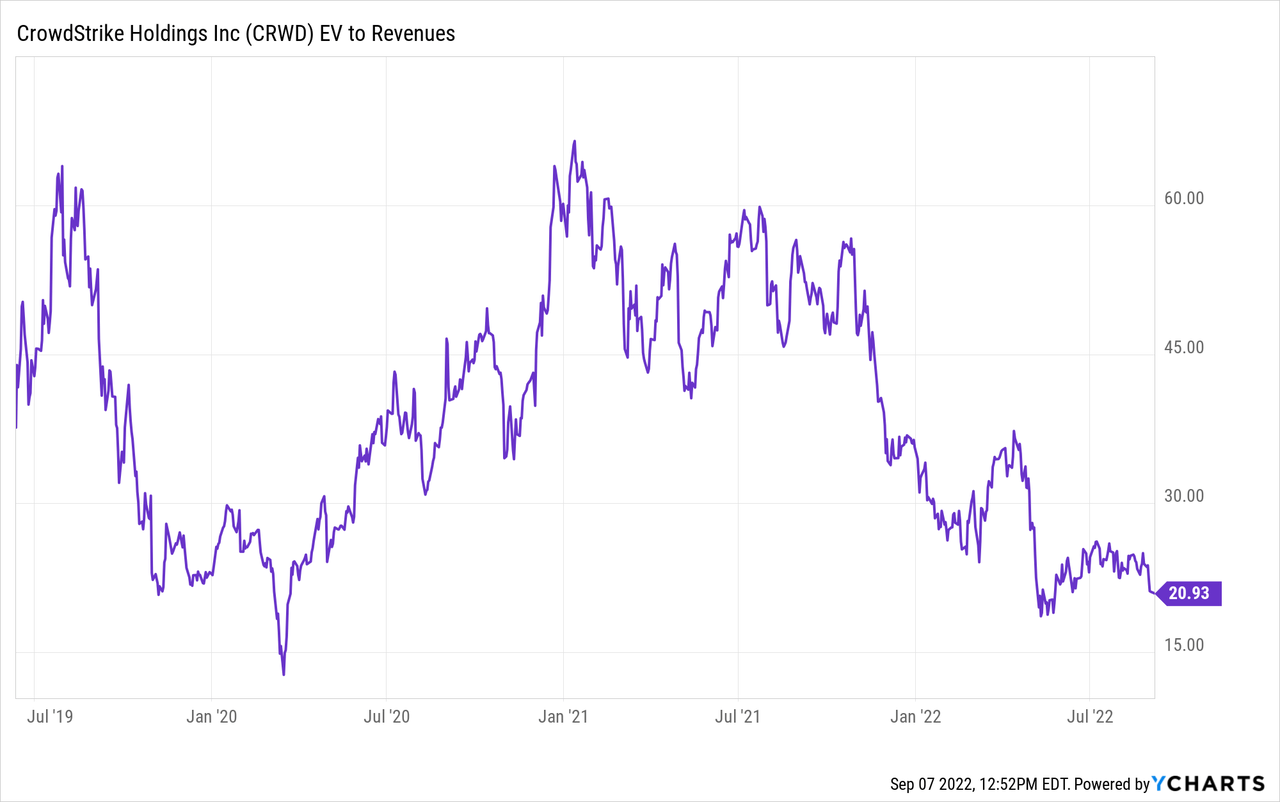

Core Weave Inc Crwv Tuesdays Stock Market Performance Explained

May 22, 2025

Core Weave Inc Crwv Tuesdays Stock Market Performance Explained

May 22, 2025 -

Why Did Core Weave Inc Crwv Stock Price Increase On Tuesday

May 22, 2025

Why Did Core Weave Inc Crwv Stock Price Increase On Tuesday

May 22, 2025 -

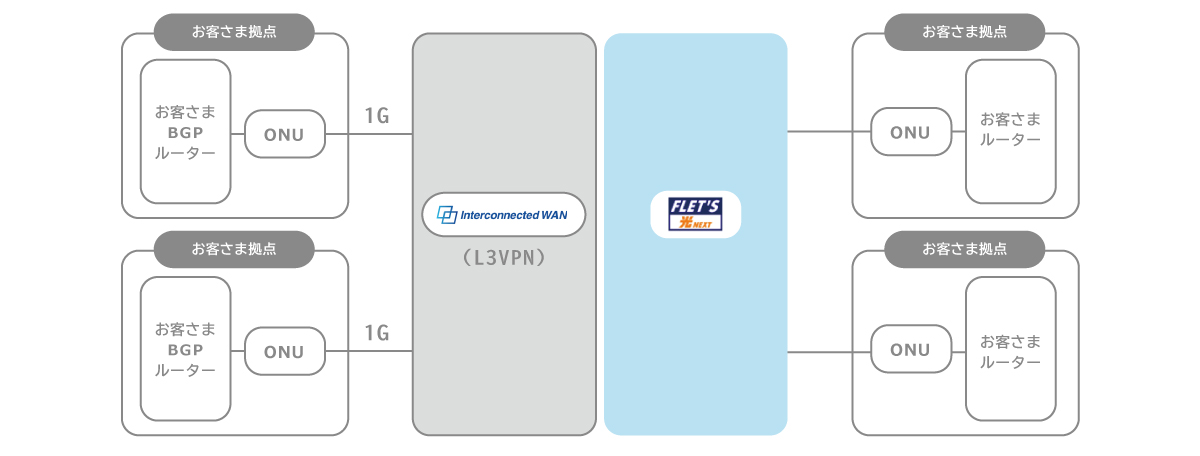

Ascii Jp Multi Interconnect Ntt At Be X

May 22, 2025

Ascii Jp Multi Interconnect Ntt At Be X

May 22, 2025 -

Understanding Core Weaves Crwv Tuesday Stock Increase

May 22, 2025

Understanding Core Weaves Crwv Tuesday Stock Increase

May 22, 2025 -

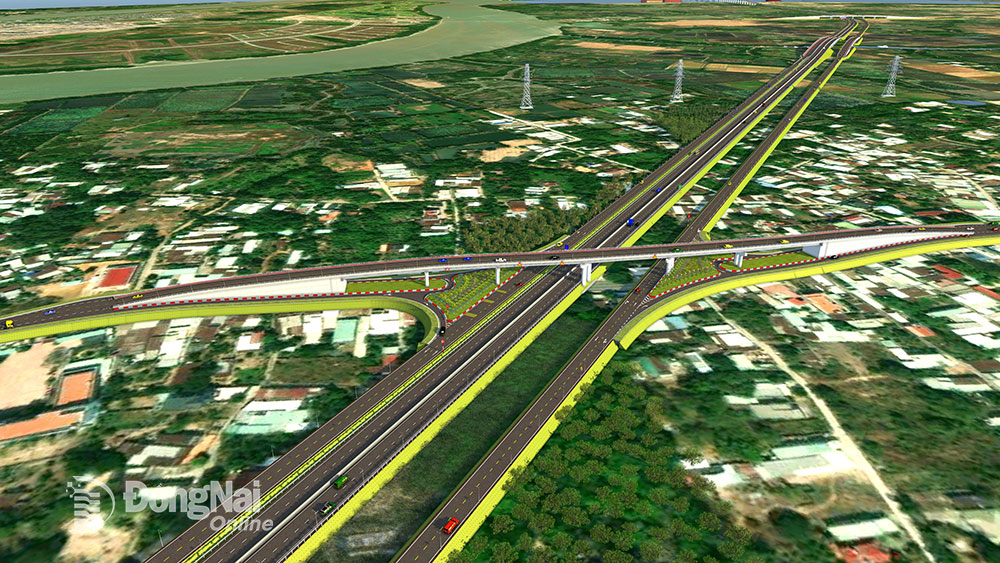

Thong Xe Cao Toc Dong Nai Vung Tau Chuan Bi Don Lan Song Du Lich Moi

May 22, 2025

Thong Xe Cao Toc Dong Nai Vung Tau Chuan Bi Don Lan Song Du Lich Moi

May 22, 2025